For decades, structural hierarchy has been a key strategy for managing chip design complexity. While not perfect, it enables a divide-and-conquer approach, though no ideal method exists due to the need to focus on specific analyses. Systems can be viewed through various valid hierarchical perspectives, forming a heterarchy.

Conceptualizing Hierarchical Design

Engineers often begin with a block diagram, labeling components like CPU, encoder, or display subsystem. This diagram, rooted in printed circuit board design, resembles a pin-based hierarchy rather than a purely functional or structural decomposition. At the chip level, everything reduces to a sea of transistors, blurring traditional boundaries.

This hierarchy, inspired by PCB design (e.g., TI 7400 series logic libraries), encapsulates blocks for isolated development, minimizing interdependencies. The top level defines block interconnections, with each block undergoing similar decomposition.

Reasons for Hierarchical Design

Hierarchical design addresses several challenges:

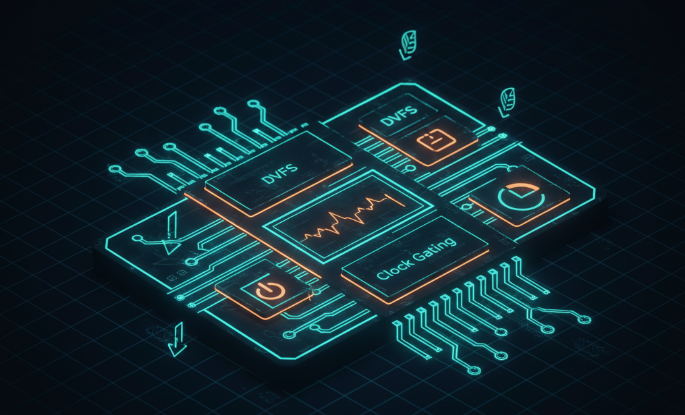

- Capacity: Large designs overwhelm algorithms, requiring decomposition to reduce runtime and resource demands.

- Parallel Engineering: Multiple teams work simultaneously on different blocks, often across global locations.

- Reuse: Standard cell libraries and IP blocks enable reuse, enhancing efficiency.

- Data Management: Managing vast design data requires structured organization.

- Repetitive Structures: Memory, multi-core arrays, and interfaces benefit from repeated patterns.

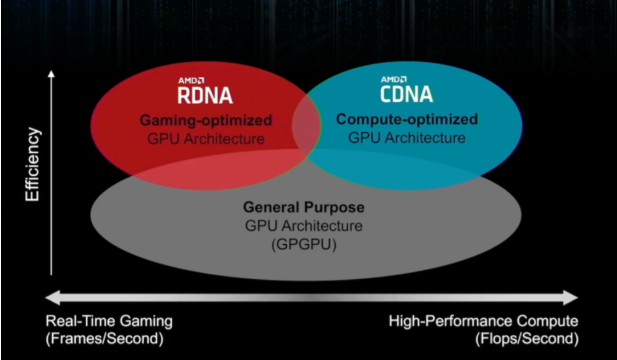

- Mixed Domains: Analog and digital blocks use different tools, necessitating separation.

Capacity Challenges

As designs grow, algorithms take longer to execute. Decomposition reduces execution time and memory usage. Synopsys' Jim Schultz notes that large designs may take days or weeks for physical implementation, and errors are costly. Hierarchy allows closing one design segment at a time, managing resource constraints.

Hierarchical analysis requires accurate boundary conditions for each block, with top-level cross-boundary analysis. Synopsys' Rimpy Chugh explains that flat analysis is accurate but slow, while a black-box approach sacrifices accuracy for speed. Retaining interface logic in abstract models balances both, enabling IP-level abstraction for SoC integration.

Parallel Engineering

Distributed teams work on separate hierarchy levels, often globally, creating coordination challenges. Keysight's Simon Rance highlights the importance of stable hierarchies, as late changes are difficult. Teams may resort to workarounds, leading to suboptimal solutions, especially in smaller chips.

Reuse and Standardization

Hierarchy supports both top-down and bottom-up design. Cadence's Brian LaBorde compares transistors forming logic gates to cells forming organs in biology. Standard cell libraries and macros enable reuse, with virtual partitions defined in layout databases.

Data Management

Designs generate vast data, requiring traceability for standards like ISO 26262 or MIL882. Rance notes that documentation, verification, and test plans must be hierarchically organized to enable failure analysis, ensuring all metadata is linked for traceability.

Repetitive Structures

Designs with repeated elements, like memory or CPU arrays, face unique challenges. Ansys' Marc Swinnen explains that a 4x4 CPU grid may have identical cores but varying boundary conditions, requiring individual optimization due to parasitic effects or thermal issues, balancing reuse and customization.

Mixed Domains

Analog and digital domains require distinct tools. Siemens' Ron Press notes that hierarchical DFT, like scan chains, simplifies testing by isolating cores, enabling plug-and-play integration and independent pattern generation, streamlining distributed team workflows.

Challenges of Structural Hierarchy

Hierarchy introduces overhead, particularly at block boundaries. Synopsys' Schultz highlights the challenge of defining constraints accurately and pushing them to block boundaries, limiting cross-boundary optimization. Siemens' Press notes that early top-level decisions, like pin allocation for DFT, can reduce efficiency if misaligned with core needs.

Poor partitioning can cause congestion, especially in SoCs, where communication across blocks creates feedthroughs, complicating timing closure. Ansys' Swinnen explains that logical connections at boundaries lack physical wires, while feedthroughs have physical wires but no logical representation, creating modeling challenges.

Logical vs. Physical Hierarchy

Logical hierarchies from RTL synthesis must map to physical partitions. Schultz notes that suboptimal logical hierarchies, where blocks communicate across distant partitions, require RTL restructuring, necessitating collaboration between logical and physical design teams.

Arteris' Frank Schirrmeister adds that NoC integration at the top level may require RTL refactoring for power domains, ideally automated to avoid manual module changes.

Data Tracking and Revisions

Rance emphasizes the complexity of revision control for hierarchical documentation. Multiple hierarchy versions, created for analyses like PPA optimization, require meticulous tracking to maintain design integrity.

Global Analyses

Some analyses, like thermal modeling, defy hierarchical simplification. Schirrmeister notes that thermal analysis requires chip-wide evaluation, correlating hotspots with functional data, a complex task across the design lifecycle.

Alternative Hierarchies

Functional hierarchies, like requirement tracking systems, decompose high-level tasks into simpler ones, defining logic and verification tests. Temporary hierarchies, such as clock trees or power distribution, emerge during design. Physical layout hierarchies start with floorplanning, followed by block placement and routing, with 3D-ICs adding vertical dimensions.

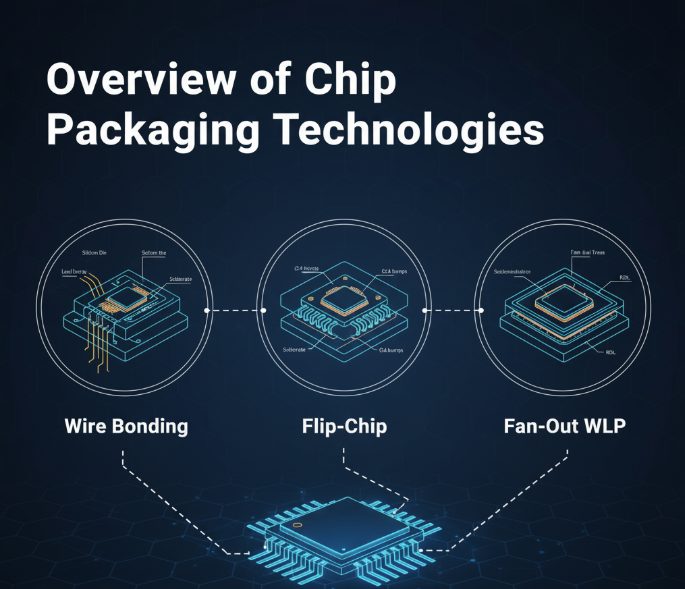

Cadence's LaBorde notes that 2.5D and 3D systems, using chiplets, reduce the strategic importance of traditional hierarchies, as macros represent distinct chips connected by bumps rather than symbolic pins.

Conclusion

Despite its imperfections, structural hierarchy remains a proven method for managing chip design complexity. While it impacts some processes and limits optimization at boundaries, tools mitigate these shortcomings. The trade-off in optimization potential is a small price for the productivity gains it enables.

ALLPCB

ALLPCB