The general rule is: the lower the resistance value, the better. In practice, many designers set the resistor's maximum voltage drop to about 100 mV at the highest current as a compromise. Regardless of the chosen value and associated drop, the "optimal" value of the resistor remains subject to debate and depends on the priorities for current sensing.

Is selecting a current-sense resistor straightforward? The basic idea is appealing: measure the voltage across a known resistor and apply Ohm's law, I = V/R, to determine current. It seems simple, but there are subtleties that must be acknowledged. For very low resistances, typically in the 100 milliohm range and below, four-wire Kelvin sensing with a high-impedance measurement stage is commonly used (source: Vishay Intertechnology via Eastern States Components).

Trade-offs Between Resistance and Voltage Drop

Choosing the resistor value is not trivial. A larger resistance increases the voltage drop across the resistor, which improves signal amplitude, signal-to-noise ratio, and resolution. However, a larger resistance also wastes power and can affect circuit stability by introducing additional series resistance between the supply and the load, and it increases self-heating of the resistor.

Therefore, lower resistance values are usually preferred. Many designers adopt the practical compromise of limiting the maximum voltage drop to around 100 mV at full current. Even so, the "best" value depends on the sensing priorities for the application and remains open to discussion.

IR Voltage Drop and Self-Heating

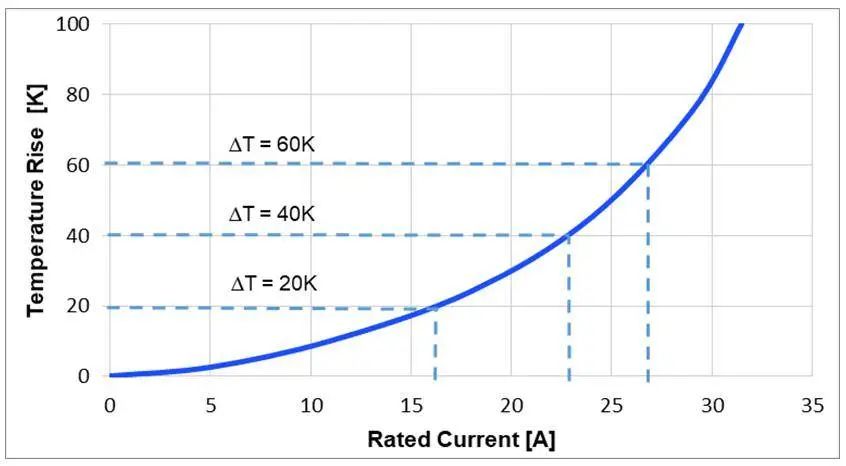

An obvious issue is the IR voltage drop. A related and unavoidable consequence is self-heating of the resistor. No matter how small the nominal resistance (often only milliohms), ampere-level currents produce watt-level dissipation when present. This dissipation raises the resistor temperature and changes its resistance, which in turn distorts the measured current value.

To address this, except in milliamp or microamp applications where self-heating is negligible, designers must use the supplier-provided temperature coefficient of resistance (TCR) data to analyze resistance change. Note that this analysis can be iterative: resistance change can affect current (depending on what drives the current into the load), which then affects self-heating and thus resistance again.

Current-Sense Accuracy

Estimating overall current-sense accuracy requires accounting for three factors: initial resistor tolerance, TCR error from environmental temperature variations, and TCR error from self-heating. Suppliers provide precision metal foil resistors with very low TCR to address these needs.

Manufacturers achieve low TCR by using specialized alloy formulations and production techniques that minimize temperature dependence. For example, the Bourns CRL2010-FW-R015E is a 51 mΩ, 1% device with a TCR of ±200 ppm/°C (see the application note "Designer's Guide to Chip Resistors, Chip Diodes and Power Chokes for Power Supplies and DC/SDC Converters"). Alloy formulations can be counterintuitive: copper is sometimes added even when it produces a nominally higher TCR because it improves overall heat dissipation and reduces self-heating.

For metrology-grade measurements, resistors with the lowest TCR are accompanied by a full resistance-vs-temperature characteristic curve, often showing a parabolic shape that depends on the alloy composition. High-precision analysis must also consider TCR nonlinearity (see Vishay technical note "Non-Linearity of Resistance/Temperature Characteristics: Its Influence on Performance of Precision Resistors") and the TCR of resistor terminations and leads.

Certain applications do not require high accuracy and can tolerate standard resistors and coarse measurements. However, in many cases reasonable consistency, accuracy, and attention to self-heating are required because TCR effects can produce significant errors in inferred current values.

Choosing a Current-Sense Strategy

Designers must decide whether to accept a lower-cost sense resistor and manage the resulting TCR drift, or to use a higher-cost, very low-TCR device. Alternatives include choosing a resistor with higher power rating and larger physical size for better dissipation, or adding a small heatsink to the resistor. Any chosen approach should be justified during design review, and trade-offs are commonly questioned.

Although the relationship V = I·R may seem trivial, its consequences can be significant. In systems with large battery packs, photovoltaic arrays, or motor drives, inaccurate current readings can cause unexplained behavior, substandard performance and efficiency, and in some cases safety risks.

Have issues been encountered due to current-sense resistor TCR, ambient temperature changes, or resistor self-heating? How were these resolved in design? Was a non-resistive current-sensing solution ultimately adopted?

ALLPCB

ALLPCB