Overview

Recently, Yann LeCun reshared two long papers that examine whether large language models (LLMs) can plan and reason, and the origin of so-called emergent capabilities. The authors argue that LLMs do not possess inherent planning or reasoning abilities, and that apparent emergent skills largely stem from in-context learning.

Do LLMs actually reason?

The question of whether large language models can reason or plan has attracted renewed attention. One paper asserted that "autoregressive LLMs cannot form plans (and cannot truly reason)." A second paper, highlighted by LeCun, examined emergent capabilities and argued that after more than 1,000 experiments, what is often called emergence is primarily in-context learning.

Findings from recent research

Subbarao Kambhampati and colleagues argue that claims about LLMs being able to reason and plan do not hold up under careful testing, although the academic community is treating the question seriously. Their earlier work evaluating GPT-3 concluded that LLMs perform poorly on planning and action-change reasoning tasks. They developed a scalable evaluation framework to test reasoning about actions and changes, provided a set of test cases that are more complex than prior benchmarks, and evaluated different aspects of reasoning about operations and changes. GPT-3 (davinci), Instruct-GPT-3 (text-davinci-002), and BLOOM (176B) showed weak performance on these tasks.

The team extended that research to newer models, including GPT-4, to see whether the latest state-of-the-art models show progress on planning and reasoning. They generated instances similar to those used in international planning competitions and evaluated LLMs in two modes: autonomous and heuristic. Results indicate that the LLMs' autonomous ability to generate executable plans is very limited; GPT-4's average success rate across domains was around 12%.

However, in the heuristic mode there was more promise. The researchers showed that LLM-generated plans can improve the search process of an underlying symbolic planner, and that external verifiers can provide feedback to refine plans and prompt the LLM to produce better plans.

Why apparent reasoning may be misleading

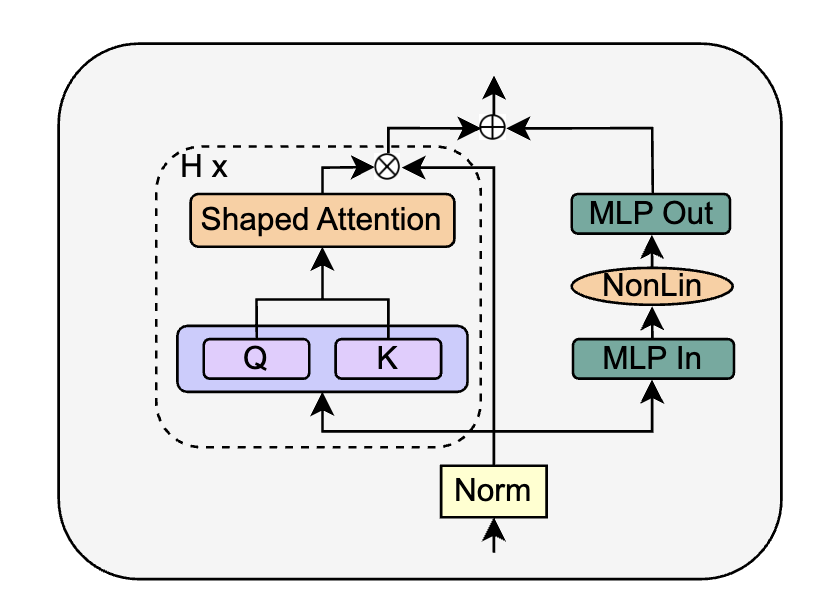

The paper includes a diagram suggesting that LLMs often appear to reason because tasks are simple and the questioner already knows the answer. For competition-grade planning and reasoning tasks, such as Blocks World problems from the International Planning Competition (IPC), LLM performance is unsatisfactory.

Preliminary results show some improvement in plan accuracy from GPT-3 to GPT-3.5 to GPT-4. GPT-4 reached about 30% empirical accuracy in Blocks World, although performance in other domains remained low. The researchers suggest that strong performance on many planning tasks may reflect large-scale training that lets models "memorize" planning content.

To reduce the effectiveness of approximate retrieval, the team obfuscated action and object names in planning problems so the model could not rely on memorized planning content. Under these conditions, GPT-4's empirical performance dropped sharply.

Attempts to improve planning ability

The team explored two approaches to overcome the limitation that LLMs cannot autonomously plan:

- Fine-tuning the model. After fine-tuning, the research team did not observe an increase in genuine planning capability. They argue that any apparent improvement from fine-tuning may merely convert planning into memory-based retrieval rather than demonstrating autonomous planning.

- Iterative prompting to refine initial plans. Continually prompting an LLM to generate improvement suggestions essentially asks the model to produce guesses, or relies on a human to select which guesses improve the plan. This does not show that the model itself has acquired planning abilities.

The researchers found that many high-profile papers claiming LLM planning fail because they either operate in domains where subgoal interactions can safely be ignored, or they delegate reasoning to humans through iterative prompting and correction. Without such assumptions or buffering measures, plans produced by LLMs may look plausible to lay users but fail during execution. A practical example is the proliferation of travel guides generated by LLMs that readers may mistake for executable plans, often leading to disappointing results.

In summary, the authors concluded that their validated work provides no convincing evidence that LLMs reason or plan in the conventional sense. What appears as reasoning or planning is often large-scale retrieval. LLMs are good at generating ideas for tasks that involve reasoning, which can be used to support planning, but this should not be conflated with autonomous reasoning or planning capability.

Emergence or in-context learning?

A second paper investigated the role of in-context learning in apparent emergent capabilities. The researchers found that LLMs can perform surprisingly well on some tasks they were not explicitly trained for, and this has influenced directions in NLP research. However, they also noted potential confounding factors in evaluations, such as prompt engineering. In-context learning and instruction following are examples of mechanisms that can produce apparent capabilities as model size increases.

The research team conducted a comprehensive study, controlling for potential evaluation biases. They evaluated 18 models ranging from 60 million to 175 billion parameters across 22 tasks. After more than 1,000 experiments, they presented evidence that observed emergent capabilities are primarily due to in-context learning. They did not find evidence that LLMs possess reasoning ability independent of in-context effects.

Experimental methodology

The researchers examined several issues:

- To eliminate the influence of in-context learning and instruction tuning, they tested zero-shot settings and used models that were not instruction-tuned.

- They explored the interaction between in-context learning and instruction tuning to determine whether instruction-tuned models' extra capabilities reflect reasoning or simply better in-context learning. They compared models without instruction tuning to models with varying degrees of instruction tuning across multiple scales.

- They manually inspected models for functional language ability, formal language ability, and whether models could memorize tasks.

The experimental design deliberately avoided triggering in-context learning. Instruction tuning can convert training instructions into exemplars, which may induce in-context learning. To avoid that, the team used non-instruction-tuned models.

The models evaluated came from four families: GPT, T5, Falcon, and LLaMA. These were chosen because GPT and LLaMA have previously exhibited emergent behavior, Falcon ranks highly among LLMs, and T5 is an encoder-decoder model whose instruction-tuned version (FLAN) was trained on many instruction datasets. Within GPT, the team used both instruction-tuned and non-instruction-tuned variants; for T5 they evaluated T5 and FLAN-T5; for Falcon they tested both tuned and non-tuned versions; LLaMA lacked an instruction-tuned variant so only the non-tuned version was used. The team also evaluated GPT-3 text-davinci-003, an InstructGPT model fine-tuned through human feedback and reinforcement learning, which has been shown to improve performance.

One T5 variant was intentionally chosen with fewer than 1B parameters as a control, since emergence had not been observed in models that small.

The team evaluated 12 selected models from the T5 and GPT families on all 22 tasks. For each setting they used the same prompt strategies: closed and adversarial prompts. Each experiment was run three times to account for variability and averaged. Experiments ran on NVIDIA A100 GPUs with temperature set to 0.01 and batch size 16. For GPT-3 175B models (davinci, text-davinci-001, and text-davinci-003), they used the official API with temperature 0 and a single run to ensure reproducibility and minimize hallucinations.

They also selected six models from LLaMA and Falcon and evaluated them on a subset of four tasks chosen to include two tasks previously identified as emergent and two identified as non-emergent. Those experiments also used closed and adversarial prompts and were repeated three times.

For tasks with variable numbers of options, the baseline was built by repeatedly sampling options and averaging scores.

Results

First, the team asked which capabilities truly emerge in the absence of in-context learning and instruction tuning. They showed performance of a 175B GPT-3 model under zero-shot and non-instruction-tuned conditions. Across evaluated models, in the absence of in-context learning the models consistently lacked the reported emergent capabilities.

Using BERTScore accuracy (BSA) and exact match accuracy (EMA) under few-shot and zero-shot settings, they compared instruction-tuned and non-instruction-tuned models. Instruction-tuned models perform as reported in prior literature under few-shot conditions. However, non-instruction-tuned models under zero-shot conditions, representing results without in-context learning influence, generally performed at or near the random baseline for many tasks.

Second, they investigated whether instruction tuning reveals genuine reasoning ability or simply enables more effective in-context learning. Instruction tuning essentially maps instructions to exemplars, a mechanism characteristic of in-context learning, so it likely triggers that capability. The researchers' hypothesis is that instruction tuning gives models the ability to convert instructions into exemplars, which then engage in-context learning.

Their experiments, including analyses of T5 family models across different settings, support the conclusion that instruction tuning mainly improves models by enabling or enhancing in-context learning rather than by conferring independent reasoning ability.

Conclusion

Overall, the two papers question claims that LLMs autonomously reason or plan. Evidence from extensive experiments suggests that much of what is called emergence is attributable to in-context learning and instruction tuning, and that models often rely on memorized content or external validation and iteration rather than genuine autonomous planning. LLMs can generate useful ideas and heuristics that support human reasoning and symbolic planners, but current evidence does not show that they reason or plan in the conventional, autonomous sense.

ALLPCB

ALLPCB