Overview

Tesla rolled out a V12.1.2 Beta end-to-end FSD to US users, drawing significant attention. This article explains how to understand an end-to-end autonomous driving system.

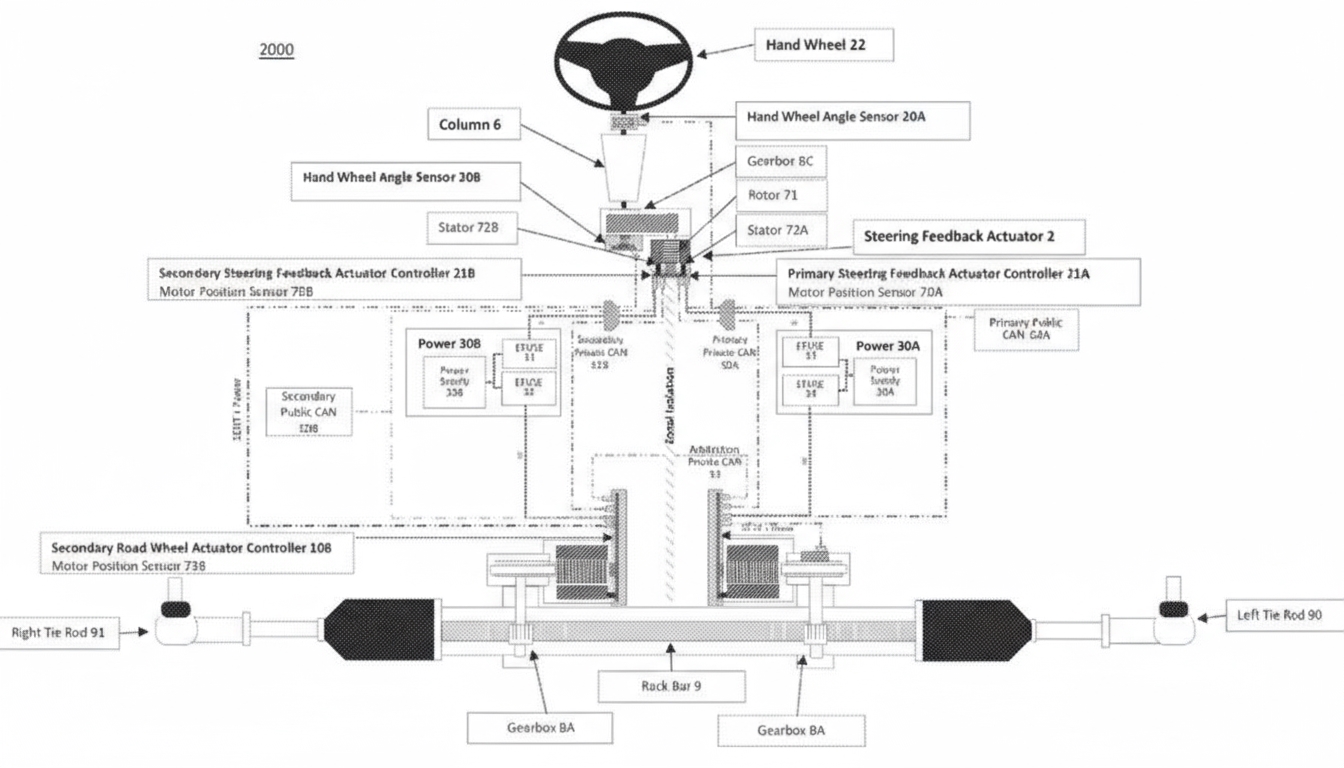

Conventional autonomous driving architectures use a modular approach with perception, planning, and control modules that interact through well-defined interfaces. Tesla's end-to-end large model forms an integrated neural network that merges perception and planning/control into a single structure.

The end-to-end approach is implemented entirely with a full-stack neural network: sensor data are fed directly into the network and the outputs are steering, braking, and acceleration commands.

1. Tesla Autonomy System Evolution

From 2021 through the expected 2023/2024 timeframe, the main milestones include:

- 2021: Introduction of HydraNet

Tesla initially used Mobileye systems but shifted to a custom solution, including the HydraNet multi-task learning algorithm. HydraNet uses a single neural network to perform multiple tasks such as perception (object and environment detection) and planning and control (path and driving decisions). On the algorithm side, Tesla iteratively refined planners from classical A algorithms and route-guided A algorithms to Monte-Carlo tree search variants for planning.

- 2022: Introduction of Occupancy Networks

Tesla introduced Occupancy Network algorithms to improve the perception module and achieve better 3D scene understanding. HydraNet was extended with a new lane-marking detection head, and the planner was optimized to incorporate outputs from the Occupancy Networks.

- 2023/2024: Transition to end-to-end learning

Tesla planned a transition from the current architecture to a fully end-to-end deep learning system. The key step is converting the planner to rely entirely on deep learning and training with joint loss functions. The end-state removes manual rules and handcrafted code, allowing the model to generalize better to novel scenarios.

An end-to-end large model essentially compresses massive driving video datasets into network parameters, analogous to generative language models compressing internet-scale text into a model. This enables efficient storage and application of driving knowledge. A full-stack neural FSD is a product of the software 2.0 era, driven entirely by data. The quality and scale of training data become decisive factors for end-to-end network performance.

2. Challenges and Advantages of End-to-End Learning

End-to-end learning presents challenges such as reduced interpretability, although components' outputs can still be visualized. Tesla conducts large-scale imitation from driver behavior—using 10 million driving video clips—to improve generalization.

- Advantages

- Higher technical ceiling: the end-to-end structure facilitates joint optimization to seek global solutions.

- Data-driven handling of long-tail problems: large datasets help cover more corner cases and improve adaptability.

- Elimination of cumulative module errors: a full-stack neural architecture can propagate full information, reducing error accumulation between modules.

- Disadvantages

- Lack of interpretability: the internal workings of end-to-end models are harder to explain, complicating system understanding.

- Requires massive, high-quality data: training demands significant compute, data, AI talent, and funding; data quality critically impacts model performance.

Tesla has made substantial investments in training the end-to-end FSD. The company spent about one quarter training on 10 million video clips. The quality and quantity of training clips are decisive for system performance. Tesla plans to raise training compute to 100E by the end of 2025, which remains an order of magnitude higher than many other manufacturers.

Summary

Tesla's end-to-end approach has attracted wide attention. Key industry concerns are interpretability and the large, high-quality data requirements. The approach offers improvements in overall performance and adaptability, while its drawbacks include reduced transparency and high data and compute thresholds. The path is clear, but implementing it at scale is challenging.

ALLPCB

ALLPCB