Overview

Neural radiance fields (NeRF) and 3D Gaussian splatting (3DGS) have recently shown promising results in visual SLAM. However, most existing methods rely on RGB-D sensors and target indoor scenes. Robust reconstruction in large-scale outdoor environments remains underexplored. This article summarizes LSG-SLAM, a stereo-camera 3DGS-based large-scale visual SLAM system designed to address those limitations. Extensive evaluation on EuRoC and KITTI demonstrates improved tracking stability and reconstruction quality in large-scale scenarios.

Key ideas

Visual SLAM is a core spatial-intelligence technology for autonomous robots and embodied AI. From a map-representation perspective, SLAM systems can be categorized as sparse, dense, implicit neural representations, or explicit volumetric representations. Traditional sparse and dense SLAM focus on geometric mapping and rely heavily on hand-crafted features. They typically store only the locally observed parts of a scene during reconstruction. Implicit neural representations, notably NeRF, learn via differentiable rendering and can synthesize high-quality novel views. Per-pixel ray tracing remains a rendering bottleneck, and implicit feature embeddings via MLPs can suffer from catastrophic forgetting and are not easily edited.

By contrast, 3D Gaussian splatting represents scenes explicitly with Gaussian points. Rasterization of 3D primitives enables high-fidelity scene capture and faster rendering. SplaTAM improves rendering quality by removing view-dependent appearance and using isotropic Gaussian points. MonoGS applies a map-centric approach with dynamic allocation of Gaussians to model arbitrary spatial distributions. However, prior methods use simple constant-velocity motion models for pose priors, which are prone to drift under large viewpoint changes,lack explicit loop-closure modules to remove accumulated error, and have been evaluated mainly in small-scale indoor environments.

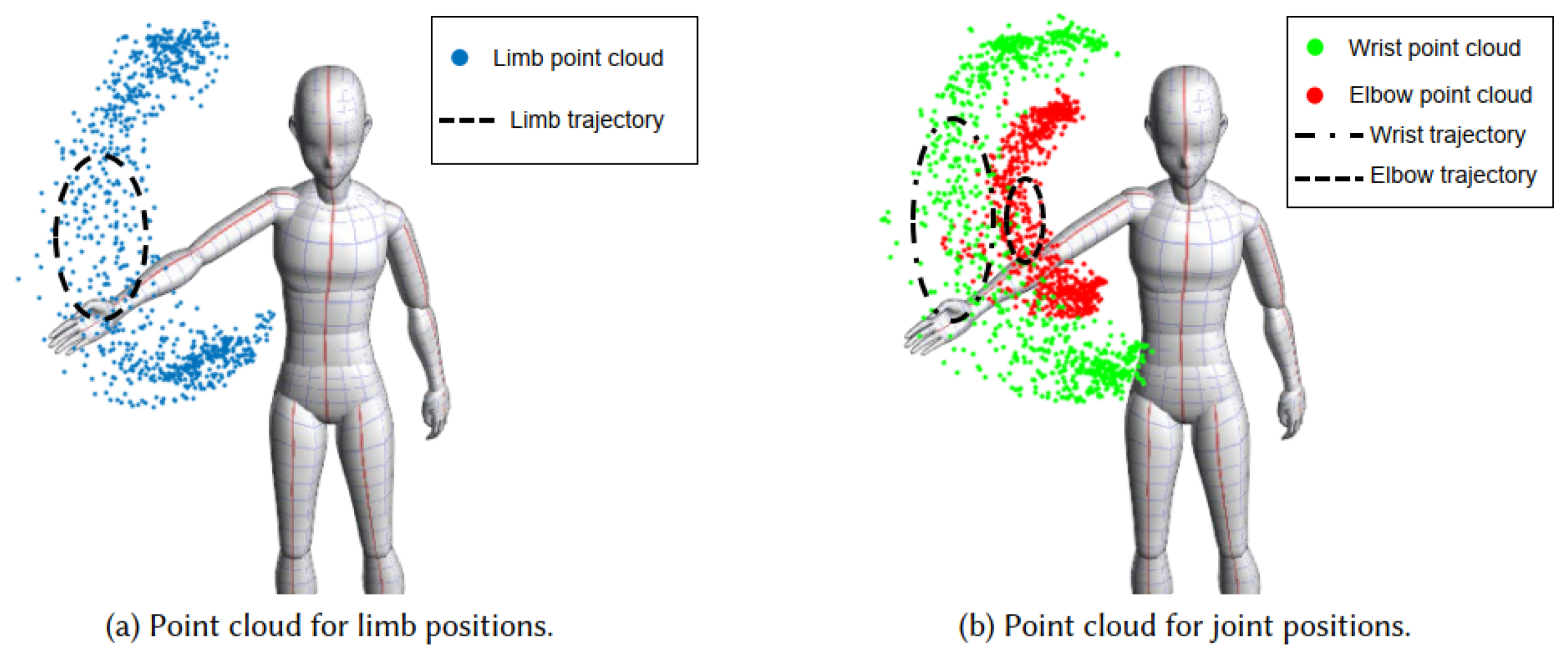

This work develops LSG-SLAM, a large-scale stereo visual SLAM based on 3DGS. It is the first 3DGS-SLAM system designed specifically for large-scale outdoor scenes. Key strategies include a multimodal approach for robust pose tracking under large inter-frame viewpoint changes and a combination of rendering loss with feature-alignment warping constraints for pose optimization. Rendering loss reduces errors caused by feature detection and matching failures, while feature-alignment constraints mitigate misleading effects from appearance similarity. These choices enable operation at low frame rates and with limited data. For scalable mapping in unbounded scenes with limited memory, LSG-SLAM uses continuous 3DGS submaps and performs careful loop detection across submaps. Loop constraints are estimated by rasterizing Gaussians and minimizing the difference between rendered frames and query keyframes, using the same losses as in tracking. A submap-based structural refinement stage further improves reconstruction quality after global pose graph and point cloud adjustments.

Contributions

- Developed the first 3DGS-based large-scale stereo visual SLAM system, improving tracking stability, map consistency, scalability, and reconstruction quality.

- Promoted efficient 3DGS rendering for novel-view synthesis to improve image and feature matching, including local and global matching, thereby enhancing tracking and loop-closure performance.

- Proposed a submap-based structural refinement method that follows global pose graph and point cloud adjustment to improve reconstruction fidelity.

- Validated the approach extensively; results indicate up to 70% improvement in tracking accuracy and 50% gain in reconstruction quality compared with state-of-the-art 3DGS-based SLAM methods.

System architecture

LSG-SLAM is a stereo SLAM system that simultaneously tracks camera poses and reconstructs the scene with 3D Gaussian points. Figure 2 illustrates the overall architecture. The main components are simultaneous tracking and mapping with continuous GS submaps, loop detection, and structural optimization.

Experiments

LSG-SLAM was evaluated on two well-known stereo datasets: EuRoC and KITTI. The EuRoC MAV dataset contains indoor and outdoor scenes with large viewpoint and illumination changes. The large-scale KITTI dataset covers urban, rural, and highway scenes.

EuRoC evaluation

Tracking performance

Compared with other 3DGS methods, LSG-SLAM significantly improves tracking accuracy at low image frame rates. Traditional models are limited: SplaTAM and MonoGS rely on constant-velocity motion priors and are prone to drift; Photo-SLAM depends on ORB reprojection errors and can fail in low-texture, high-motion scenarios.

LSG-SLAM uses a multimodal pose prior to handle large viewpoint changes and combines rendering loss with feature-alignment warping constraints for pose optimization. Rendering loss reduces errors from non-repeatable feature extraction and impacts from low-texture regions, while alignment constraints lessen the impact of large similar-appearance regions. After loop-closure optimization, LSG-SLAM achieves trajectory accuracy comparable to ORB-SLAM3 and achieves a higher reconstruction success rate in challenging scenes.

Mapping quality

Rendering comparisons show that LSG-SLAM outperforms SplaTAM and MonoGS. Even without structural refinement, the method attains more accurate tracking, reducing map-structure errors. Adding the structural refinement module yields significant reconstruction improvements, indicating that ellipsoids better capture complex texture detail than spheres. A scale-regularization loss further increases peak signal-to-noise ratio (PSNR) relative to the original 3D Gaussian point cloud approach.

KITTI evaluation

Tracking performance

On KITTI, LSG-SLAM outperforms traditional and learning-based methods in pose estimation accuracy. Representative 3DGS methods cannot process entire sequences due to memory limits. By using continuous GS submaps with loop-closure, LSG-SLAM reconstructs large-scale scenes under limited resources. LSG-SLAM also avoids long training times required by some learning-based systems.

Mapping quality

The structural refinement module significantly improves rendering quality. Direct optimization of anisotropic Gaussian ellipsoids can cause numerical instability. Isotropic Gaussian spheres converge faster and are more stable in early optimization. LSG-SLAM first reconstructs the scene with isotropic spheres to obtain a good initialization, then converts spheres to ellipsoids during structural refinement to capture surface detail. This two-stage process increases robustness to floating artifacts and improves rendering fidelity.

Conclusion

LSG-SLAM is a stereo visual SLAM system that applies 3D Gaussian splatting to large-scale scene reconstruction. Its main components are simultaneous tracking and mapping with continuous submaps, loop detection, and structural optimization. LSG-SLAM improves tracking stability, mapping consistency, scalability, and reconstruction quality, and achieves state-of-the-art performance compared with traditional and learning-based baselines.

ALLPCB

ALLPCB