Overview

By 2025, artificial intelligence is no longer just a buzzword in research circles; it has entered everyday life with applications such as image generation, code writing, autonomous driving, and medical diagnosis. Almost every industry is discussing and adopting AI.

Today's large-model era did not arrive overnight. Its development traces a path of breakthroughs, controversies, pauses, and revivals. From the 1956 Dartmouth conference to the global competition around models with hundreds of billions of parameters, AI history is a story of how humans have modeled, extended, and redefined intelligence.

This article reviews 10 pivotal moments that helped move AI from theoretical ideas to a core technology driving many industries today.

Dartmouth Conference (1956)

The 1956 Dartmouth conference is widely regarded as the founding moment of artificial intelligence. Organized by pioneers John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, it gathered researchers who set out to explore machine intelligence.

Over six weeks, participants discussed, debated, and collaborated, helping to establish AI as a formal discipline. They attempted to define AI, set research goals, and outline potential directions, covering topics such as problem solving, machine learning, and symbolic reasoning.

The conference not only opened the door for decades of AI research and innovation but also formed a community convinced that machines could replicate aspects of human cognition. Its lasting impact was to establish AI as an academic and practical field.

Perceptron (1957)

In 1957, Frank Rosenblatt developed the perceptron, one of the earliest artificial neural networks. The perceptron is a simplified model inspired by biological neurons, designed for binary classification.

Although structurally simple, the perceptron introduced the idea of optimizing a model using training data. This train-then-predict paradigm later became central to machine learning and deep learning. The perceptron laid the foundation for modern neural networks and deep learning techniques.

Despite limitations, such as an inability to handle nonlinearly separable problems, the perceptron spurred research into more complex models and influenced applications in image recognition, speech processing, and natural language understanding.

ELIZA (1966)

ELIZA, created in 1966 by MIT computer scientist Joseph Weizenbaum, was one of the first widely recognized conversational programs. It simulated a psychotherapist by transforming user inputs into follow-up questions, following a Rogerian-style dialogue technique.

Weizenbaum initially intended ELIZA to demonstrate the superficiality of machine intelligence, but many users nevertheless attributed human-like understanding to it. He noted that some subjects found it hard to believe ELIZA was not human.

Although elementary by modern standards, ELIZA showed the potential of natural language processing and demonstrated that a computer could produce apparently human responses, stimulating interest in conversational AI.

Expert Systems (1970s)

In the 1970s, AI research focused on symbolic methods and logical reasoning. The rise of expert systems, such as Dendral and MYCIN, was an early demonstration of AI providing domain-specific expertise.

Dendral analyzed mass spectrometry data to infer molecular structures of organic compounds, showing AI could mimic expert reasoning in chemistry. MYCIN focused on medical diagnosis, identifying bacterial infections and recommending antibiotic treatments.

These systems demonstrated that AI could be applied as domain-specific knowledge experts, laying groundwork for applications in medicine, law, finance, and engineering.

Deep Blue Defeats Garry Kasparov (1997)

IBM's Deep Blue defeated world chess champion Garry Kasparov in a historic match, proving that machines could outperform humans in complex strategic games requiring evaluation and decision making. This milestone attracted global attention and marked a major achievement for AI in game-playing domains.

Machine Learning Emergence (1990s–2000s)

Tom Mitchell defined machine learning as research on algorithms that allow programs to improve performance with experience. This definition signaled a shift toward data-driven approaches in AI, emphasizing algorithms that adapt and improve over time.

Rise of Deep Learning (2012)

Deep learning uses multi-layer neural network architectures and backpropagation to train models. Geoffrey Hinton and collaborators laid foundational work for deep neural networks and popularized backpropagation, enabling efficient training of multi-layer networks and overcoming early limitations of perceptrons.

AlexNet's breakthrough performance in the ImageNet competition became a catalyst for the deep learning revolution, demonstrating the practical power of large convolutional neural networks on image tasks.

Generative Adversarial Networks (2014)

Ian Goodfellow proposed generative adversarial networks, which consist of a generator and a discriminator that compete. GANs transformed generative modeling, enabling realistic synthesized data such as images and video, and they have had significant impact on image synthesis, video generation, and data augmentation.

AlphaGo (2016)

Developed by DeepMind, AlphaGo defeated world Go champion Lee Sedol, achieving a landmark victory in a game noted for its vast search space and need for intuition and strategy. AlphaGo highlighted AI's capability to tackle challenges once thought uniquely human.

Transformer and Large Models (2017–2025)

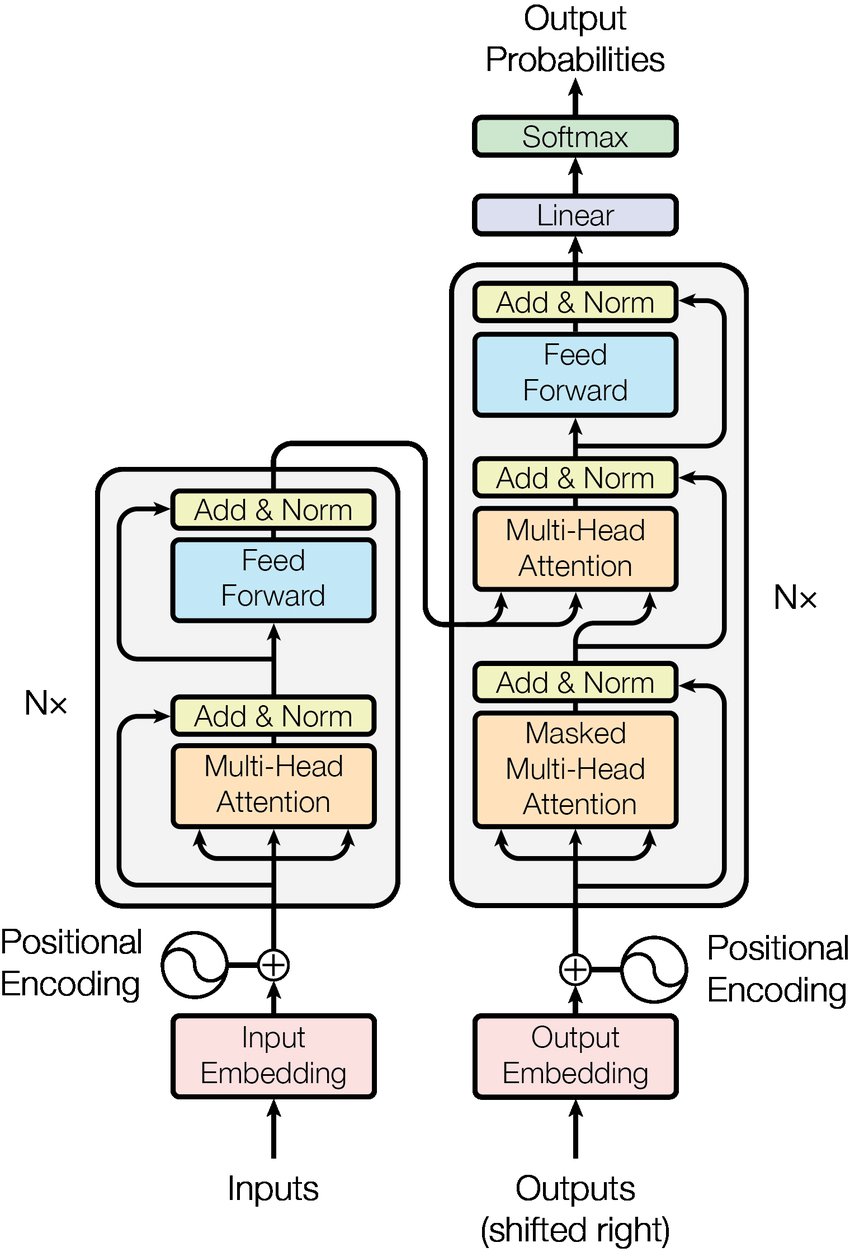

The development of large language models began with the 2017 Transformer architecture, whose self-attention mechanism effectively addressed long-range dependencies and enabled better understanding and generation of long text. In 2018, models like GPT-1 and BERT established the pretraining-then-finetuning paradigm, allowing models to learn general language abilities from massive unlabeled corpora.

GPT-3, with 175 billion parameters, demonstrated emergent capabilities such as zero-shot learning, logical reasoning, and code generation, showing how scale can produce qualitative changes.

In 2023, GPT-4 extended multimodal capabilities by integrating text and image input and improved safety and usefulness through reinforcement learning from human feedback. Several commercial and open models followed, including Claude and the Gemini series, as well as open models like LLaMA. The large-model ecosystem in the Chinese market has also evolved rapidly, with offerings such as Baidu's Wenxin Yiyan, Alibaba's Tongyi Qianwen, and ByteDance's Doubao. Several models and startups in the Chinese market attracted attention for providing open parameters and competitive performance.

From the Dartmouth idea in 1956 to large models deployed across industries by 2025, AI's development has been nonlinear, marked by setbacks and leaps. These 10 moments represent collective advances in understanding intelligence and expanding technical capability. Looking back helps explain current trends and may hint at where the next major shift could occur.

ALLPCB

ALLPCB