Introduction

With rapid advances in artificial intelligence, optical character recognition (OCR) has evolved from traditional pattern recognition to end-to-end solutions based on deep learning. The PP-OCRv5 model from the PaddlePaddle team delivers notable improvements in accuracy and efficiency. Combined with the hardware acceleration capabilities of the Intel OpenVINO toolkit, this approach can better support a variety of document processing scenarios.

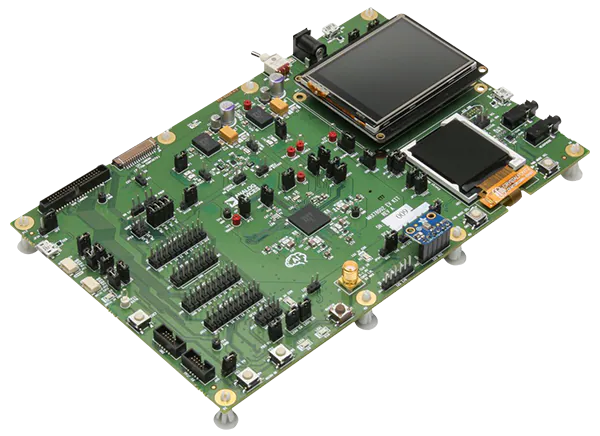

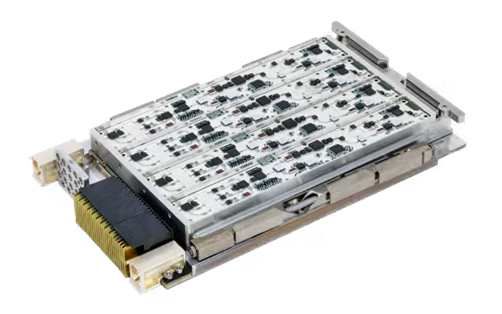

Modular Mini-PC Overview

The modular mini-PC is a DIY mini desktop that uses a drawer-style design so later assembly, upgrades, and maintenance require only module plug-and-play. By selecting compute modules with different processing capabilities and pairing them with different I/O modules, a wide range of configurations can be created to suit different scenarios.

If performance is insufficient, the compute module can be upgraded to increase processing power. If I/O interfaces do not match requirements, the I/O module can be replaced to adjust functionality without rebuilding the entire system.

All validation steps in this article were performed on a modular mini-PC equipped with an Intel i5-1165G7 processor.

Implementation Steps

1. Preparation

Install Miniconda and create a virtual environment:

conda create -n PP-OCRv5_OpenVINO python=3.11 # Create virtual environmentconda activate PP-OCRv5_OpenVINO # Activate virtual environmentpython -m pip install --upgrade pip # Upgrade pip to the latest versionpip install -r requirements.txt # Install required packages2. Model Deployment

Install PaddlePaddle and PaddleOCR:

pip install paddlepaddle # Installpaddlepip install paddleocr # Installpip install onnx==1.16.0 # Install3. Download PP-OCRv5_server Pretrained Models

wget https://paddle-model-ecology.bj.bcebos.com/paddlex/official_inference_model/paddle3.0.0/PP-OCRv5_server_det_infer.tar && tar -xvf PP-OCRv5_server_det_infer.tar # Download and extract PP-OCRv5_server_det pretrained modelwget https://paddle-model-ecology.bj.bcebos.com/paddlex/official_inference_model/paddle3.0.0/PP-OCRv5_server_rec_infer.tar && tar -xvf PP-OCRv5_server_rec_infer.tar # Download and extract PP-OCRv5_server_rec pretrained modelwget https://paddle-model-ecology.bj.bcebos.com/paddlex/official_inference_model/paddle3.0.0/PP-LCNet_x1_0_doc_ori_infer.tar && tar -xvf PP-LCNet_x1_0_doc_ori_infer.tar # Download and extract PP-OCRv5_server_cls pretrained model4. Export PP-OCRv5_server Models to ONNX

paddlex --paddle2onnx --paddle_model_dir ./PP-OCRv5_server_det_infer --onnx_model_dir ./PP-OCRv5_server_det_onnx # Export PP-OCRv5_server_det to ONNXpaddlex --paddle2onnx --paddle_model_dir ./PP-OCRv5_server_rec_infer --onnx_model_dir ./PP-OCRv5_server_rec_onnx # Export PP-OCRv5_server_rec to ONNXpaddlex --paddle2onnx --paddle_model_dir ./PP-LCNet_x1_0_doc_ori_infer --onnx_model_dir ./PP-OCRv5_server_cls_onnx # Export PP-OCRv5_server_cls to ONNX5. Run the Script

To get started quickly with the PP-OCRv5_OpenVINO project, run the following:

python main.py --image_dir images/handwrite_en_demo.png # Run the Python script to invoke inference --det_model_dir PP-OCRv5_server_det_onnx/inference.onnx --det_model_device CPU --rec_model_dir PP-OCRv5_server_rec_onnx/inference.onnx --rec_model_device CPUThe program will print the recognized text results to the console.

Video link: OpenVINO document OCR with PP-OCRv5 on a modular mini-PC

Conclusion

This article outlined the deployment process for an intelligent document information extraction solution based on PP-OCRv5 and OpenVINO. The PP-OCRv5 update improves both accuracy and speed. When combined with OpenVINO hardware acceleration, the solution enables efficient document processing. This approach is particularly suitable for enterprise scenarios that require processing large volumes of documents, such as invoice recognition, contract review, and archive digitization.

ALLPCB

ALLPCB