Introduction

With the arrival of the 5G era, edge computing has become a new area of growth for autonomous driving systems. It is expected that more than 60% of data and applications will be generated and processed at the edge in future deployments.

What is edge computing

Edge computing is a computing model that performs processing at the network edge. Its data handling includes two directions: downstream cloud services and upstream Internet-of-Things services. The "edge" is a relative concept referring to any compute, storage, or network resource along the path from data sources to cloud computing centers. Depending on application needs and scenarios, the edge can be one or more resource nodes along that path. Essentially, edge computing extends cloud computing outside the data center and is realized through three main deployment forms: edge cloud, edge network, and edge gateway.

The edge layer sits between the cloud and field devices. It supports various field device connections downward and can interface with the cloud upward. The edge layer typically consists of edge nodes (hardware entities that host edge workloads) and edge managers (software that centrally manages edge nodes). Edge nodes generally provide compute, network, and storage resources. Resource usage can be exposed directly via invocation interfaces for code downloads, network policy configuration, and database operations, or further packaged into functional modules and orchestrated by the edge manager using model-driven business composition for integrated development and agile deployment of edge services.

Why edge computing matters for autonomous driving

Large-scale AI models and centralized data analytics are usually hosted in the cloud because of abundant compute resources. However, relying solely on remote cloud processing is often infeasible for autonomous vehicles. Vehicles generate large volumes of time-sensitive data during operation. Transmitting all this data over the core network to a remote cloud introduces high latency and cannot meet real-time processing requirements. Core network bandwidth is also insufficient for many vehicles to send large data volumes simultaneously, and network congestion can destabilize data transfer and jeopardize driving safety.

Edge computing targets localized services with strict real-time requirements and high network load, making it suitable for local small-scale intelligence, preprocessing, and integrated algorithm models. Applying edge computing to autonomous driving helps address challenges in environmental data acquisition and processing.

Edge computing characteristics relevant to autonomous driving

- Proximity: Edge nodes are close to data sources, enabling efficient capture and analysis of critical information and direct device access. This facilitates edge intelligence and enables specialized application scenarios.

- Low latency: Edge services are near the devices that generate data, significantly reducing latency compared with cloud-only solutions. This is crucial for autonomous driving feedback loops.

- Locality: Edge systems can operate isolated from the rest of the network, enabling localized and relatively independent processing. This improves local data security and reduces dependence on network quality.

- Location awareness: When the edge is part of a wireless network, local services can determine device locations with limited information, supporting location-based applications.

Trends in edge computing

Edge computing is evolving toward heterogeneous computing, edge intelligence, edge-cloud collaboration, and integration with 5G. Heterogeneous computing assembles compute units with different instruction sets and architectures to satisfy diverse edge workloads. It supports flexible allocation of computing resources to meet fragmented industry requirements and improve utilization and scheduling of compute power.

Reference architectures for edge computing expose open interfaces at each layer, enabling full-stack openness. Vertical management services, data lifecycle management, and security services enable intelligent end-to-end lifecycle operations.

Edge computing hardware foundations

- Edge servers: Edge servers and edge data centers are the primary compute carriers for edge workloads and can be deployed in operator facilities. Because edge environments vary and edge workloads have specific latency, bandwidth, GPU, and AI requirements, edge servers should support remote automated operation and strong management and O&M capabilities for state collection, control, and remote interfaces.

- Integrated edge appliances: In autonomous driving, integrated edge appliances combine compute, storage, networking, virtualization, and environmental controls into rugged industrial PCs to support vehicle systems reliably in operational environments.

- Edge access network: This covers the network infrastructure between vehicle systems and edge computing systems, including campus networks, access networks, and edge gateways. It requires convergence, low latency, high bandwidth, massive connectivity, and strong security.

- Edge internal network: The network inside an edge system connects servers and external networks. It emphasizes simplified architecture, full-featured functions, and reduced performance loss, enabling edge-cloud collaboration and centralized control.

- Edge interconnect network: This includes networks connecting edge systems to cloud systems (public, private, telecom cloud, or user-built cloud), other edge systems, and data centers. It supports diverse connectivity and low-latency cross-domain communication.

Because edge deployments are distributed and numerous but each site is relatively small, single-point management is insufficient. An intelligent cross-domain management and orchestration system in the cloud is typically required to centrally manage edge network infrastructure within a domain and support automated, efficient configuration of network and compute resources through edge-cloud collaboration.

How edge computing and autonomous driving combine

Higher-level autonomous driving requires more than single-vehicle intelligence. Two primary edge-enabled capabilities are collaborative perception and task offloading.

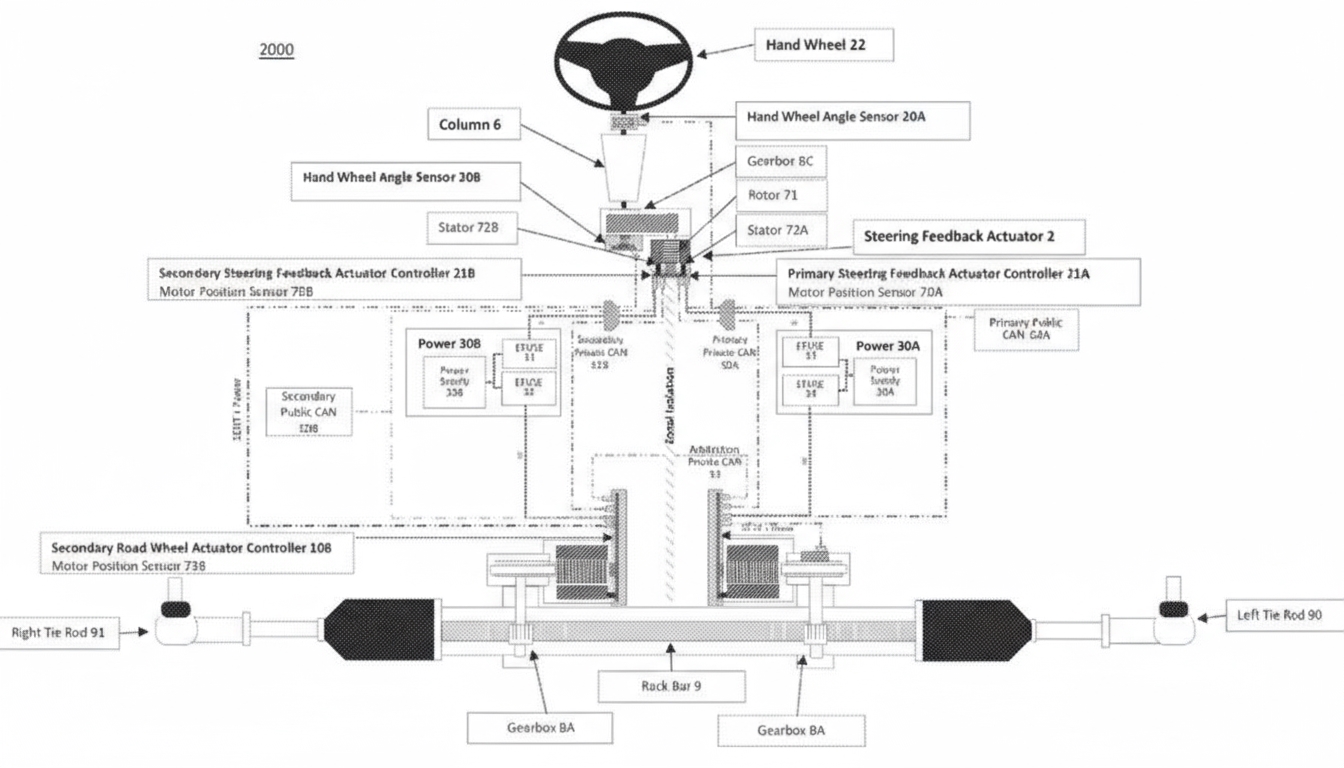

Collaborative perception lets vehicles obtain sensor information from other edge nodes to expand perception range and improve environmental data completeness. A vehicle equipped with lidar and cameras can also use V2X to perceive road-side and traffic information, obtaining richer data than on-board sensors alone and enabling perception beyond line of sight. Vehicles can interact with roadside edge nodes and nearby vehicles to extend perception capability and achieve vehicle-to-vehicle and vehicle-to-infrastructure collaboration. Cloud centers aggregate data from distributed edge nodes to perceive traffic system state and issue scheduling commands to edge nodes, traffic signals, and vehicles through big data and AI algorithms to improve system efficiency. In adverse weather or complex intersections, V2X data can provide real-time road and traffic information to enable predictive decision making and accident avoidance.

As sensing capability increases, autonomous vehicles generate large volumes of raw sensor data daily. These data require local real-time processing, fusion, and feature extraction, including deep learning–based detection and tracking. V2X, high-definition 3D maps, real-time modeling and localization, path planning, and driving strategy adjustments all require persistent real-time processing in the vehicle. Therefore, a powerful and reliable edge compute platform is needed. Supporting heterogeneous compute platforms improves execution efficiency and reduces power and cost by matching workloads to appropriate hardware.

Autonomous driving edge architectures rely on edge-cloud collaboration and LTE/5G communication infrastructure. Edge components include in-vehicle units, roadside units (RSUs), and MEC servers. In-vehicle units handle perception, planning, and control but depend on RSUs or MEC servers for additional road and pedestrian information. Some functions, such as remote vehicle control, simulation and validation, node management, and long-term data storage, remain more suitable for or must be handled by cloud infrastructure.

Key advantages for autonomous driving

Edge computing enables load consolidation, heterogeneous computing, real-time processing, connectivity, and security optimization for autonomous driving systems.

1. Load consolidation

Different workloads such as ADAS, IVI, digital instrument clusters, head-up displays, and rear entertainment systems can run on the same hardware platform through virtualization. Virtualization and hardware abstraction simplify cloud-driven orchestration, deep learning model updates, and software and firmware upgrades for the whole vehicle system.

2. Heterogeneous computing

Different autonomous driving tasks are mapped to appropriate hardware based on performance and energy efficiency. For example, GPS and path planning, deep-learning-based object recognition, image preprocessing and feature extraction, sensor fusion, and tracking benefit differently from CPU, GPU, or DSP implementations. GPUs excel at convolutional computations for recognition and tracking; CPUs provide efficient logical computation with lower energy for control tasks; DSPs offer advantages in signal processing and feature extraction. Heterogeneous architectures improve performance and energy efficiency while reducing latency, with unified upper-layer software interfaces abstracting hardware diversity.

3. Real-time processing

Autonomous driving requires strict real-time performance because only a few seconds or less may be available for braking or collision avoidance. The end-to-end response includes cloud processing, vehicle-to-cloud negotiation, on-vehicle compute, and braking actuation. Edge platforms must meet fine-grained timing requirements for perception, fusion, and trajectory planning. Low-latency 5G connectivity is also critical, enabling end-to-end latencies below 1 ms in some scenarios and flexible priority-based resource allocation to ensure fast transmission of control signals.

4. Connectivity

Edge computing for vehicles depends on wireless communications such as V2X (vehicle-to-everything), which provides communication between autonomous vehicles and other elements of the intelligent transportation system. V2X enables cooperation between vehicles and edge nodes. V2X approaches include DSRC (dedicated short-range communication) and cellular networks. DSRC supports low-latency, high-rate, point-to-point or point-to-multipoint communication. Cellular networks, led by 5G, provide large network capacity and wide coverage, suitable for V2I communication and connections between vehicles and edge servers.

5. Security optimization

Edge security combines cloud and edge defense-in-depth measures to strengthen infrastructure, network, application, and data protections against threats. Edge distribution of compute and storage close to data sources reduces latency and network load while improving data security and privacy. Deploying edge compute on mobile telecom equipment near vehicles, such as base stations and roadside units, enables local processing, encryption, decision making, and reliable real-time communication.

Summary

Edge computing plays a critical role in environmental perception and data processing for autonomous driving. Vehicles can expand perception by consuming data from edge nodes and offload compute tasks to edge nodes to address on-board resource constraints. Compared with cloud-only solutions, edge computing avoids long-distance data transfers and associated latency, providing faster response times to vehicles and reducing backbone network load. Incorporating edge computing into stages of autonomous driving development is an important option for ongoing optimization and deployment.

ALLPCB

ALLPCB