Overview

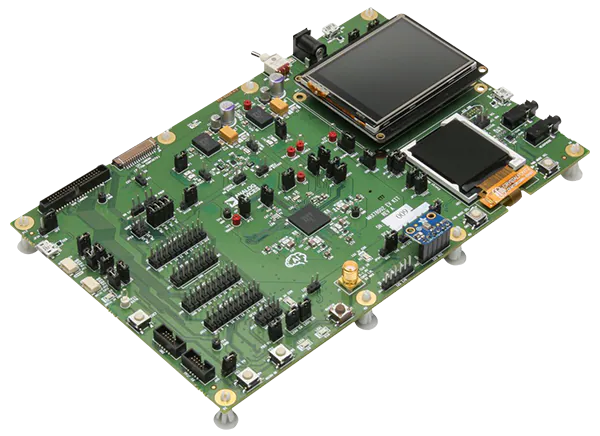

DeepSeek-R1 is an inference model developed by DeepSeek. It uses reinforcement learning fine-tuning to improve inference performance and is designed for complex reasoning tasks such as mathematics, code, and natural language reasoning. This article describes how to run DeepSeek-R1 offline on EASY-EAI-Orin-Nano (RK3576). The RK3576 offers competitive edge AI efficiency and cost-effectiveness suitable for on-device inference.

Development environment setup

RKLLM-Toolkit installation

This section explains how to install RKLLM-Toolkit using pip. Follow the steps below to install the RKLLM-Toolkit toolchain.

Install miniforge3

To avoid conflicts between multiple Python versions, use miniforge3 to manage the Python environment. Check whether miniforge3 and conda are installed; skip this step if already present.

wget -c https://mirro [rs](https://www.elecfans.com/tags/rs/).bfsu.edu.cn/github-release/conda-forge/miniforge/LatestRelease/Miniforge3- [Linux](https://www.elecfans.com/v/tag/538/)-x86_64.shInstall miniforge3:

chmod 777 Miniforge3-Linux-x86_64.shbash Miniforge3-Linux-x86_64.shCreate RKLLM-Toolkit Conda environment

Enter the Conda base environment:

source ~/miniforge3/bin/ [ac](https://www.hqchip.com/app/1703) [ti](https://www.elecfans.com/tags/ti/) vateCreate a Python 3.8 Conda environment named RKLLM-Toolkit (Python 3.8 is the recommended version):

conda create -n RKLLM-Toolkit python=3.8Activate the RKLLM-Toolkit Conda environment:

conda activate RKLLM-ToolkitInstall RKLLM-Toolkit

Within the RKLLM-Toolkit Conda environment, use pip to install the provided wheel packages. The installer will download required dependencies automatically

pip3 install nvidia_cublas_cu12-12.1.3.1-py3-none-manylinux1_x86_64.whlpip3 install torch-2.1.0-cp38-cp38-manylinux1_x86_64.whlpip3 install rkllm_toolkit-1.1.4-cp38-cp38-linux_x86_64.whlIf some files download slowly during installation, you can manually download packages from the Python package index

https://pypi.org/If the commands above run without errors, the installation is successful.

DeepSeek-R1 model conversion

This chapter explains how to convert the DeepSeek-R1 large language model to the RKLLM format.

Model and script download

Two types of model files are provided: the original Hugging Face model and the converted NPU model.

Model conversion

After downloading, place the model and conversion scripts in the same directory.

In the RKLLM-Toolkit environment, run the conversion commands provided with the toolkit to perform the model conversion.

After conversion, a file named deepseek_w4a16.rkllm will be generated as the NPU-optimized model file.

DeepSeek-R1 model deployment

This chapter explains how to run the RKLLM-format NPU model on EASY-EAI-Orin-Nano hardware.

Copy the example project files into an NFS-mounted directory. If you are unsure how to construct the directory, refer to the Getting Started/Development Environment/NFS setup and mount documentation.

Important: Copy the source directory and model locally on the board (for example, to /userdata). Running a large model directly from an NFS mount can cause slow initialization.

Enter the example directory on the development board and run the build script as shown:

cd /userdata/deepseek-demo/./build.shRun the example and test

Enter the deepseek-demo_release directory and run the sample program:

cd deepseek-demo_release/ulimit -HSn 102400 ./deepseek-demo deepseek_w4a16.rkllm 256 512You can now test a conversation. For example, input: "The two legs of a right triangle are 3 and 4, what is the hypotenuse?" The model will respond accordingly.

RKLLM algorithm example

The example source is located at rkllm-demo/src/main.cpp. The operation flow is shown in the example code.

#include#include#include#include "rkllm.h"#include#include#include#include#define PROMPT_TEXT_PREFIX "<|im_start|>system You are a helpful assistant. <|im_end|> <|im_start|>user"#define PROMPT_TEXT_POSTFIX "<|im_end|><|im_start|>assistant"using namespace std;LLMHandle llmHandle = nullptr;void exit_handler(int signal){ if (llmHandle != nullptr) { { cout << "程序即将退出" << endl; LLMHandle _tmp = llmHandle; llmHandle = nullptr; rkllm_destroy(_tmp); } } exit(signal);}void callback(RKLLMResult *result, void *userdata, LLMCallState state){ if (state == RKLLM_RUN_FINISH) { printf("\n"); } else if (state == RKLLM_RUN_ERROR) { printf("\run error\n"); } else if (state == RKLLM_RUN_GET_LAST_HIDDEN_LAYER) { /* ================================================================================================================ If using GET_LAST_HIDDEN_LAYER, the callback interface returns a memory pointer: last_hidden_layer, the token count: num_tokens, and the hidden layer size: embd_size. These three parameters can be used to retrieve data from last_hidden_layer. Note: retrieve the data within the current callback; if not retrieved in time, the pointer will be freed on the next callback. ===============================================================================================================*/ if (result->last_hidden_layer.embd_size != 0 && result->last_hidden_layer.num_tokens != 0) { int data_size = result->last_hidden_layer.embd_size * result->last_hidden_layer.num_tokens * sizeof(float); printf("\ndata_size:%d",data_size); std::ofstream outFile("last_hidden_layer.bin", std::binary); if (outFile.is_open()) { outFile.write(reinterpret_cast(result->last_hidden_layer.hidden_states), data_size); outFile.close(); std::cout << "Data saved to output.bin successfully!" << std::endl; } else { std::cerr << "Failed to open the file for writing!" << std::endl; } } } else if (state == RKLLM_RUN_NORMAL) { printf("%s", result->text); }}int main(int argc, char **argv){ if (argc < 4) { std::cerr << "Usage: " << argv[0] << " model_path max_new_tokens max_context_len\n"; return 1; } signal(SIGINT, exit_handler); printf("rkllm init start\n"); // Set parameters and initialize RKLLMParam param = rkllm_createDefaultParam(); param.model_path = argv[1]; // Set sampling parameters param.top_k = 1; param.top_p = 0.95; param.temperature = 0.8; param.repeat_penalty = 1.1; param.frequency_penalty = 0.0; param.presence_penalty = 0.0; param.max_new_tokens = std::atoi(argv[2]); param.max_context_len = std::atoi(argv[3]); param.skip_special_token = true; param.extend_param.base_domain_id = 0; int ret = rkllm_init(&llmHandle, ¶m, callback); if (ret == 0){ printf("rkllm init success\n"); } else { printf("rkllm init failed\n"); exit_handler(-1); } string text; RKLLMInput rkllm_input; // Initialize infer parameter structure RKLLMInferParam rkllm_infer_params; memset(&rkllm_infer_params, 0, sizeof(RKLLMInferParam)); // Initialize all contents to 0 rkllm_infer_params.mode = RKLLM_INFER_GENERATE; while (true) { std::string input_str; printf("\n"); printf("user: "); std::getline(std::cin, input_str); if (input_str == "exit") { break; } text = input_str; rkllm_input.input_type = RKLLM_INPUT_PROMPT; rkllm_input.prompt_input = (char *)text.c_str(); printf("robot: "); rkllm_run(llmHandle, &rkllm_input, &rkllm_infer_params, NULL); } rkllm_destroy(llmHandle); return 0;} ALLPCB

ALLPCB