Introduction

Technological progress is driving product innovation and creating new challenges for designers, especially in the automotive sector. OEMs are working to upgrade L2 ADAS implementations to L3 and L4, with longer-term aims toward fully autonomous systems. L3 passenger cars are already operating in multiple regions, and L4 robotaxi trials are running in several cities. However, before these vehicles reach mass-market deployment, numerous commercial, logistical, and regulatory challenges remain.

From Prototype to Production

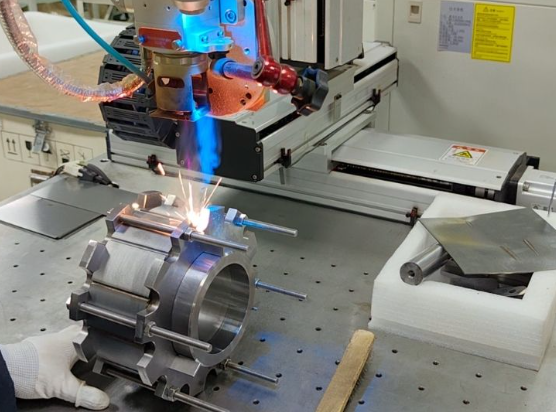

One major challenge for automakers is bridging the gap between proof-of-concept demonstrations and robust, repeatable, large-scale production. Production systems must be stable, safe, reliable, and cost-effective.

Two basic approaches exist for system development. The top-down approach integrates as much hardware, sensors, and software as possible into the vehicle, then uses fusion and simplification to meet performance, cost, and weight targets. The bottom-up approach proceeds in stages: complete one level of autonomous capability, collect data, and address the required challenges before advancing to the next level. The latter method is increasingly favored by major OEMs.

Sensor Suite and Perception

Advanced ADAS and autonomous driving require accurate perception of the surrounding environment and appropriate actions based on what the vehicle "sees." The more accurate the perception, the better the driving decisions and safety. Accordingly, an early design decision is the number and types of sensors mounted around the vehicle. Common sensing modalities are cameras (image sensors), millimeter-wave radar, and lidar; each has advantages and limitations.

Camera and lidar processing have largely moved to centralized domain controllers, while many current millimeter-wave radar solutions still perform front-end processing and send detected objects to the domain controller for late fusion rather than centralized raw-data processing. This article emphasizes the rationale for centralized processing of 4D imaging millimeter-wave radar and discusses technical advantages offered by the Ambarella CV3 family in this area.

Front-End Processing vs Centralized Domain Processing

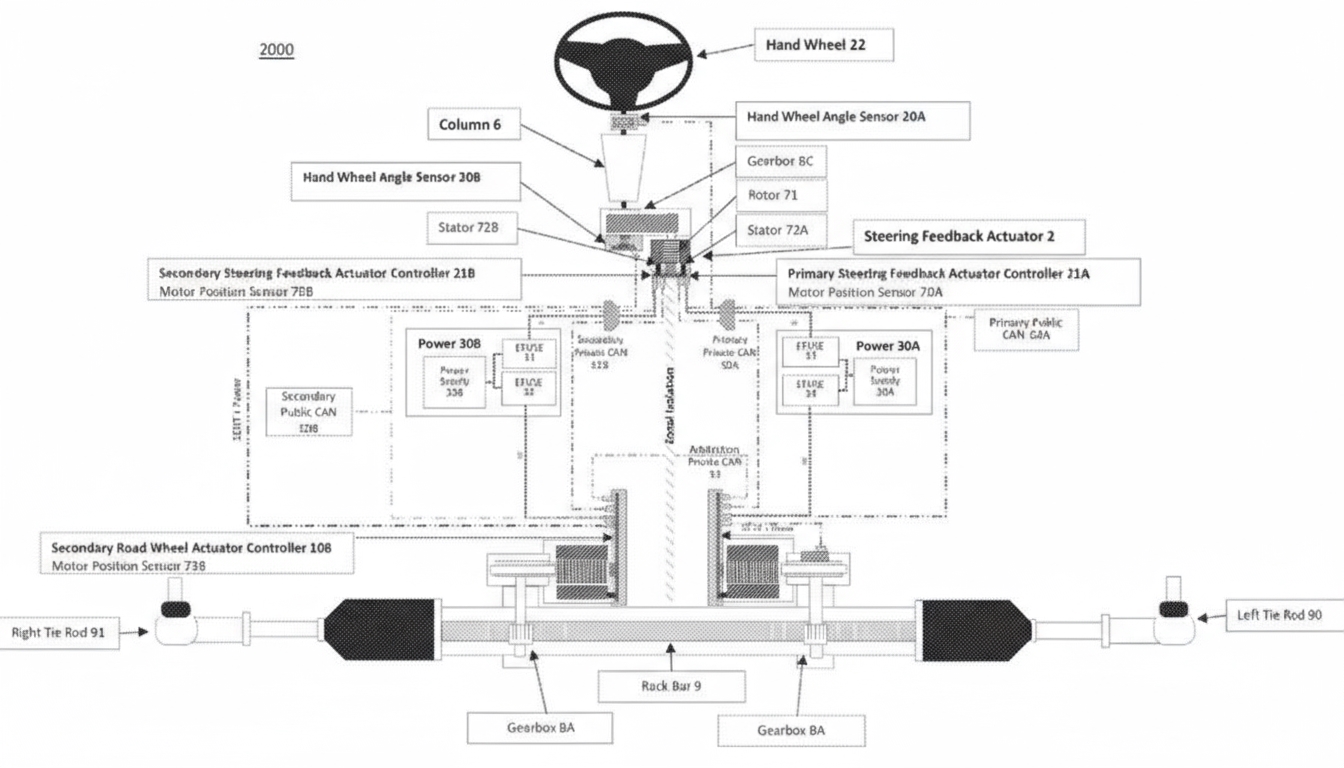

After defining the vehicle's sensor requirements, a sequence of architectural decisions follows, including whether to process sensor data at the front end or centrally in a domain controller.

Traditional 3D radar is lower cost but has limited perception capability. Typical architectures perform millimeter-wave radar front-end computation to generate detected targets, then do later fusion with camera-based perception in the domain controller. Because pre-processing at the radar front end loses raw information, the contribution of traditional 3D radar to overall perception is reduced, and with advances in vision-based perception, 3D radar risks becoming marginalized.

Advances in millimeter-wave radar have produced 4D imaging radar with significant improvements over traditional 3D radar: an added height dimension, longer range, denser point clouds, better angular resolution, more reliable detection of static objects, and lower false-positive and false-negative rates. These gains typically come from more sophisticated modulation schemes and more complex point-cloud and tracking algorithms, so 4D imaging millimeter-wave radar often requires dedicated radar-processing silicon to achieve high performance. Some market designs add DSPs or FPGAs in the radar module for front-end computation; these can improve performance over 3D radar but increase cost and complicate widespread deployment.

Centralized processing offers advantages because raw sensor data from all modalities are merged at a single point, preserving key information during fusion. Radar modules are simplified without front-end compute, reducing size, power, and cost. In addition, many radars are located behind the vehicle bumper to limit repair costs in minor collisions.

Centralized processing also lets developers dynamically adjust the relative weighting of radar and camera data in real time, optimizing perception across weather, road, and driving conditions. For example, at highway speeds in poor weather, millimeter-wave radar can dominate perception; in dense urban environments at low speeds, cameras are more important for lane markings, traffic signs, and scene understanding; lidar contributes to general obstacle detection and night-time AEB. Dynamic sensor configuration can save compute resources, reduce energy use, and improve perception and safety.

Comparison: Front-End vs Centralized Processing of 4D Imaging Radar

Front-end processed imaging radar

- Compute is limited; higher compute leads to larger power consumption, affecting radar data density and sensitivity.

- Fixed compute modes must be provisioned for worst-case scenarios, which may be unnecessary in common situations.

- Higher front-end radar cost, because data processing is performed at the sensor node.

- Sensor fusion is limited to object-level fusion.

Centralized domain-controller radar processing

- Stronger and more efficient centralized compute improves angular resolution, data density, and sensitivity.

- Compute resources can be dynamically allocated across multiple radars according to the scenario, improving utilization and perception results.

- Lower radar front-end cost, since front-end modules are purely sensors without compute units.

- Enables deep fusion between 4D radar data and camera data.

Power, Thermal, and OTA Considerations

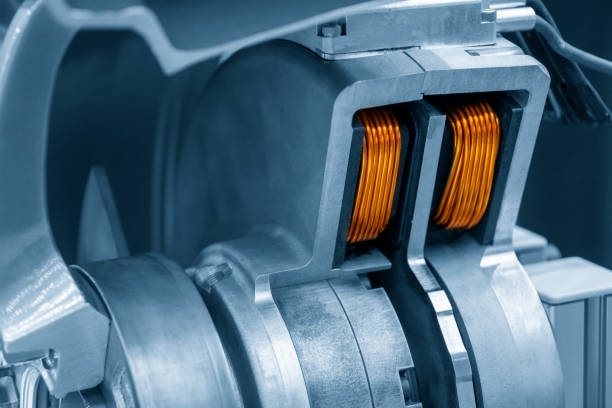

With the growth of electric vehicles, energy efficiency and maximizing range per charge are key considerations for every vehicle subsystem. Centralized AI domain-control SoCs can offer energy advantages. Multi-chip domain controllers can consume significant power, shortening vehicle range. Excess SoC heat may require active cooling or even liquid cooling in some architectures, increasing vehicle size, cost, and weight and reducing battery range.

AI-based driving software is rapidly becoming a system-critical element. How AI is implemented affects SoC selection and the time and cost of system development. Run-time efficiency is important: executing modern neural networks with minimal development effort and energy consumption without sacrificing accuracy requires careful hardware choices and support for middleware, device drivers, and AI toolchains to shorten development time and reduce risk.

After vehicles ship, software must be updated to fix issues or add features. A single-domain-controller centralized architecture simplifies over-the-air updates compared with updating many separate front-end modules individually, which is more expensive and complex. This OTA approach increases the importance of system-level cybersecurity in design.

SoC Requirements and 4D Imaging Radar

SoC selection affects many aspects of the vehicle design and overall autonomous capability. The Ambarella CV3-AD685 is positioned as a high-compute centralized domain-control AI SoC targeted at L3/L4 driver assistance systems and supports centralized processing and deep fusion of raw 4D imaging millimeter-wave radar data.

Traditional 4D imaging radar uses fixed modulation schemes that require trade-offs in performance. Emerging 4D radar designs combine sparse antenna arrays with AI algorithms that dynamically learn and adapt to the environment, such as virtual aperture imaging (VAI) techniques. This approach breaks the modulation-induced performance trade-offs and can improve 4D imaging radar resolution by orders of magnitude. As a result, angular resolution, system accuracy, and overall performance improve while antenna counts, form factor, power budget, data-transfer requirements, and cost are reduced.

CV3 supports direct transmission of RAW radar data from sensor front ends to the domain controller and performs necessary radar computations on-chip, including high-quality point-cloud generation, processing, and tracking. The CV3-AD685 includes dedicated 4D imaging radar processing hardware to efficiently handle concurrent data streams from multiple radars.

CV3-AD685 SoC Architecture

The SoC integrates a neural-network processor (NVP), a general vector processor (GVP) for accelerating general machine vision algorithms and millimeter-wave radar processing, a high-performance image signal processor (ISP), 12 Arm Cortex-A78AE cores and multiple R52 CPUs, stereo vision and dense optical-flow engines, and a GPU for 3D rendering such as surround-view. The CV3-AD architecture is designed specifically for automotive driver-assistance workloads and differs from general-purpose GPU-centric architectures, which are optimized for broad parallel compute. For driver-assistance tasks, the CV3 architecture provides higher efficiency and lower power consumption than many competing general-purpose designs.

Conclusion

Recent years have seen substantial advances in driver-assistance technology. Several advanced driver-assistance functions are now mainstream, and new capabilities continue to emerge. The current industry challenge is moving L3 and L4 prototypes into mass production.

Key to that progress are sensor selection, vehicle architecture, and domain-controller SoC choice. Using processors designed for centralized sensor fusion, along with AI-driven innovations such as sparse millimeter-wave radar arrays, enables centralized processing of millimeter-wave radar data and deep fusion with camera data. These improvements in performance and sensitivity can reduce reliance on lidar, lower system cost, and improve environmental perception capabilities.

ALLPCB

ALLPCB