Introduction to Memory Hierarchy

The memory hierarchy in a computer system is a structured organization of storage layers, each with varying access speeds, capacities, and costs. This design aims to balance speed, capacity, and cost effectively.

Levels of the Memory Hierarchy

The memory hierarchy typically consists of the following levels:

1. Registers

Registers are the fastest storage units within the CPU, used to hold instructions and temporary data. Despite their extremely limited capacity, they provide very high access speeds. Registers store data currently in use or frequently accessed, reducing the need to access other layers of memory.

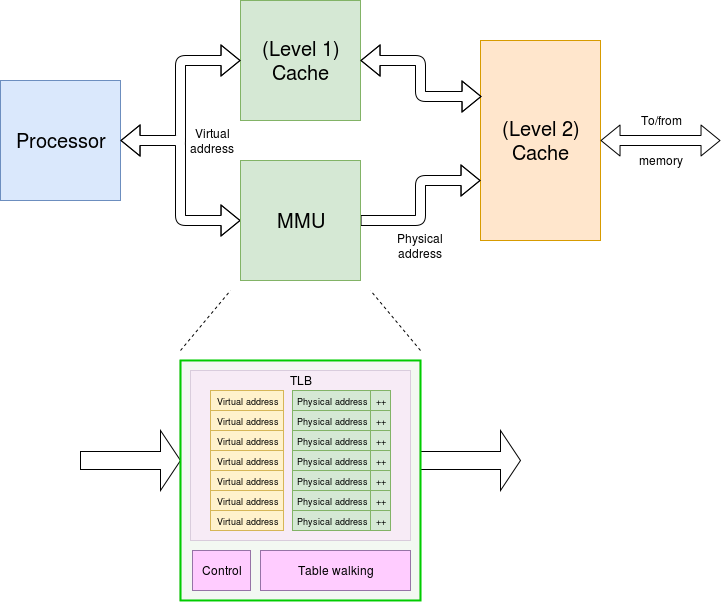

2. Cache Memory

Cache memory resides between the CPU and main memory. It often includes multiple levels, such as L1, L2, and L3 caches. While larger than registers, cache memory is smaller than main memory. Its purpose is to store the most frequently accessed data and instructions, reducing latency associated with accessing main memory.

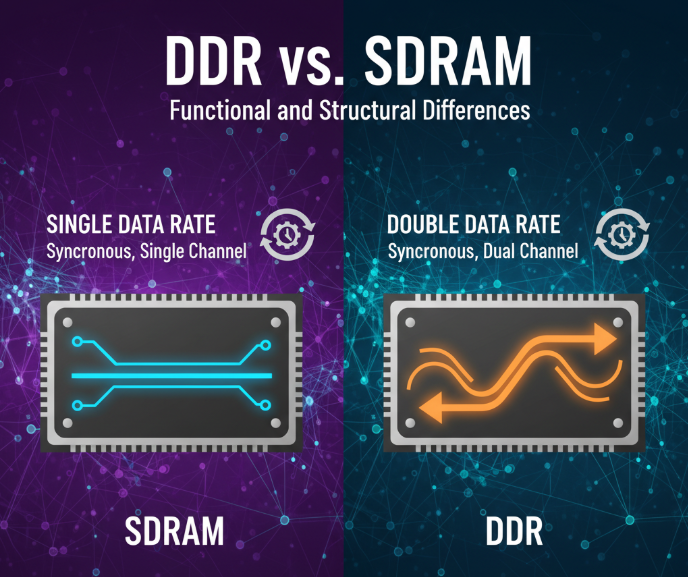

3. Main Memory

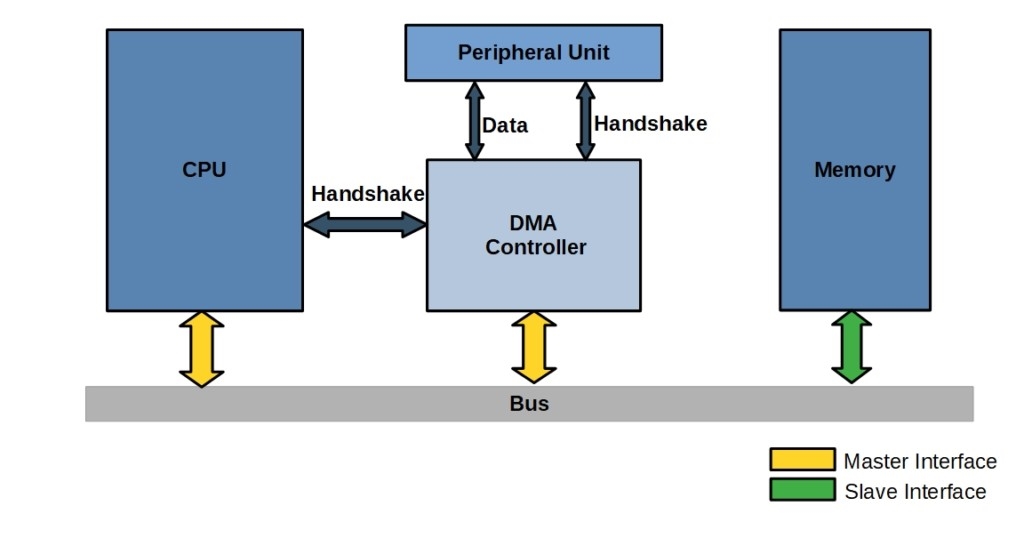

Main memory, typically composed of dynamic random-access memory (DRAM), serves as the primary storage medium for programs, data, and the operating system. While main memory offers a larger capacity than cache, its access speed is slower due to its distance from the CPU and reliance on bus communication.

4. Secondary Storage

Secondary storage includes devices such as hard drives, solid-state drives (SSD), and optical discs. It is used for long-term storage of data and programs. While secondary storage offers high capacity, its access speed is significantly lower. Data must be transferred to main memory for CPU access.

Reasons for the Hierarchical Design

The hierarchical design of memory serves several purposes:

1. Access Speed

Different memory levels have varying access speeds. Faster memory stores frequently used data to minimize the need for accessing slower layers, reducing CPU idle time and improving system performance.

2. Capacity

As storage capacity increases, access speed typically decreases. Faster memory levels are smaller in capacity to maintain high speeds, while slower levels compensate with larger capacities to meet storage demands.

3. Cost

Faster memory is generally more expensive, while slower memory is more economical. The hierarchical approach leverages cost-effective solutions to balance performance and cost.

Speed Relationships in the Hierarchy

The speed of memory access decreases as we move from registers to secondary storage. Below is a summary of the speed relationships:

1. Registers

Registers, located within the CPU, operate at the same frequency as the CPU and offer the fastest access speeds, often within a single CPU cycle.

2. Cache Memory

Cache memory, positioned closer to the CPU than main memory, provides faster access speeds. Though slower than registers, it significantly reduces the latency of accessing main memory.

3. Main Memory

Main memory¡¯s access speed is slower due to the physical distance from the CPU and the use of bus communication. Its capacity is much larger than cache memory.

4. Secondary Storage

Secondary storage, such as hard drives and SSDs, is the slowest layer in the hierarchy. Data must be transferred to main memory for CPU processing, adding significant latency.

Conclusion

The memory hierarchy is designed to balance performance, capacity, and cost by optimizing the organization of different types of memory. This layered structure ensures efficient use of fast memory while accommodating the storage needs of modern computing systems.

ALLPCB

ALLPCB