Overview

Large AI models are advancing at unprecedented speed, driving the shift to an intelligent world. Compute, algorithms, and data are the three pillars of AI. While compute and algorithms are tools, the scale and quality of data ultimately determine the capabilities of AI. Data storage converts information into corpora and knowledge bases and, together with compute, is becoming foundational infrastructure for large AI models.

High-reliability, high-performance, and shared storage is becoming the preferred data foundation for databases such as Orac. Looking ahead, enterprise data storage trends include the following areas.

Large AI models require more efficient collection and preprocessing of massive raw data, higher-performance training data loading and model checkpointing, and more timely, accurate domain inference knowledge bases. New AI data paradigms such as near-memory computing and vector storage are rapidly developing.

Big data applications have evolved from historical reporting and trend prediction to supporting real-time precise decision-making and automated intelligent decisions. Data paradigms exemplified by near-memory computing will significantly improve analytics efficiency in lakehouse platforms.

Open-source based distributed databases are taking on increasingly critical enterprise roles. New high-performance, high-reliability architectures that combine distributed databases with shared storage are forming.

Multi-cloud has become the new normal for enterprise data centers, with on-premises data centers and public clouds complementing each other. Cloud deployment is moving from closed full-stack models toward open decoupling to enable multi-cloud application deployment with centralized data and resource sharing.

The concentration of enterprise private data in large AI models increases data security risks. Building a comprehensive data security framework that includes storage-native security is urgent.

AI is driving a shift in data center compute and storage architectures from CPU-centric to data-centric models, and new system architectures and ecosystems are being rebuilt.

AI techniques are increasingly integrated into storage products and management systems, improving storage infrastructure SLAs.

1. Large AI Models

The arrival of large AI models has accelerated AI development beyond previous expectations. Storage, as the primary carrier of data, must evolve in three aspects: governance of massive unstructured data, an order-of-magnitude performance improvement, and storage-native security. On top of exabyte-scale expansion, storage systems will need to support aggregate bandwidths on the order of hundreds of GB/s and tens of millions of IOPS to achieve 10x or greater performance gains.

Enterprises using large models, HPC, and big data require rich raw data from the same sources: operational transaction logs, experimental data, and user behavior. Building large models on the same data infrastructure used for HPC and big data is the most cost-effective approach, enabling a single dataset to serve multiple environments.

All-flash storage will provide substantial performance improvements and accelerate model development and deployment. Data-centric architectures enable decoupling and interconnection of hardware resources to accelerate on-demand data movement. Emerging techniques such as data fabric, vector storage, and near-memory computing will lower the barriers to data integration and use, improving resource efficiency and reducing the difficulty of adopting large models in industry. Storage-native security frameworks will protect enterprise private data assets and support safer use of large models.

2. Big Data

Big data applications have progressed through three phases: traditional reporting, predictive analytics, and proactive decision-making.

Traditional data warehouse era: Enterprises built subject-oriented, time-variant datasets in warehouses for accurate historical description and reporting, suitable for TB-scale structured data.

Traditional data lake era: Enterprises used Hadoop-based data lakes to process structured and semi-structured data and to predict future trends from historical data. This phase produced a siloed architecture with separate data lakes and data warehouses, requiring data movement between them and preventing real-time and proactive decisions.

Lakehouse era: Enterprises began optimizing the IT stack to enable real-time and proactive decisions by converging lake and warehouse capabilities. Core measures include joint innovation with storage vendors to decouple compute and storage in the big data stack and to share a single data copy between data lake storage and warehouse services, eliminating data movement and enabling real-time, proactive analytics.

3. Distributed Databases

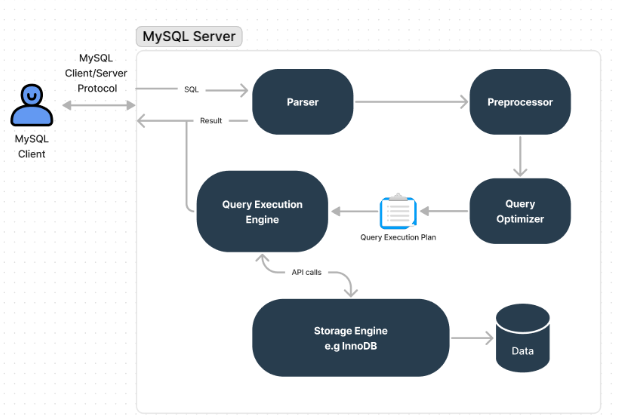

Open-source databases such as MySQL and PostgreSQL occupy the top positions globally and are reshaping enterprise core systems. To ensure stable business operations, architectures that separate compute and storage in distributed databases are becoming the de facto standard.

Major banks and cloud database vendors have adopted compute-storage separation for next-generation core systems. Vendors including Amazon Aurora, Alibaba PolarDB, Huawei GaussDB, and Tencent TDSQL have shifted toward this architecture, which is now the prevailing approach for distributed database deployments.

4. Cloud-native and Multi-cloud

Enterprise cloud infrastructure has moved from single-cloud to multi-cloud. No single cloud can satisfy all application and cost requirements, so many enterprises adopt multi-cloud architectures combining multiple public clouds and private clouds.

Key capabilities for multi-cloud infrastructure fall into two categories. The first enables cross-cloud data mobility, such as cross-cloud tiering and backup, allowing data to reside on the most cost-effective storage. The second is cross-cloud data management, which provides global data views to guide placement and scheduling of data to applications that extract the most value.

Open, decoupled architectures that allow hardware resources and data to be shared across clouds and to move as needed are required to realize multi-cloud benefits.

5. Unstructured Data

Technologies such as 5G, cloud computing, big data, AI, and high-performance data analysis are driving rapid growth of enterprise unstructured data: video, audio, images, and documents are growing from petabytes to exabytes. Examples include a genomic sequencer producing 8.5 PB per year, a large operator processing 15 PB per day, a remote sensing satellite collecting 18 PB per year, and an autonomous vehicle generating 180 PB per year for training.

Enterprises must first ensure capacity: storing more data at the lowest cost, smallest footprint, and lowest power. Second, data must be able to move: efficient, policy-driven data flow both between and within data centers. Finally, data must be usable: mixed workloads of video, audio, images, and text must meet application requirements.

6. Storage-native Security

Data is the foundation of AI, and its protection is critical. According to the Splunk 2023 State of Security report, over 52% of organizations experienced data-exposing malicious attacks and 66% faced ransomware, underscoring rising data security importance.

Data interacts with storage throughout its lifecycle: creation, collection, transmission, use, and destruction. As the final repository and "vault" for data, storage provides near-data protection and media-level control that are essential for data protection, backup and recovery, and secure data destruction.

Storage-native security strengthens protection through architectural and design principles and includes two aspects: device-level security capabilities and data protection mechanisms provided by storage systems.

7. All-flash Deployments

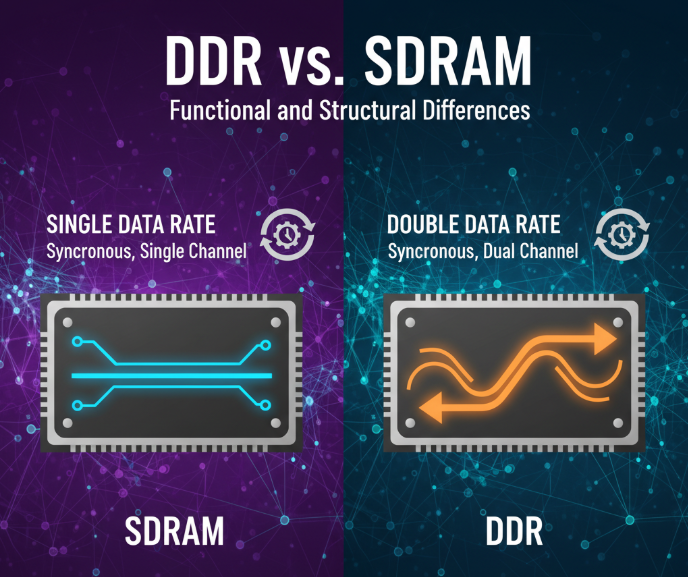

By 2022, SSD market share and shipments exceeded those of HDDs by over 2x, accounting for more than 65% of the market. The industry is moving toward widespread flash adoption.

Enterprise SSD costs are largely determined by NAND dies. 3D NAND layer increases and QLC adoption have driven down all-flash BOM costs. Mainstream 3D NAND now reaches 176 layers in production, with roadmaps above 200 layers, nearly doubling layer counts since 2018. In terms of die types, TLC has become the mainstream for enterprise SSDs, and QLC SSDs are increasingly used.

8. Data-centric Architecture

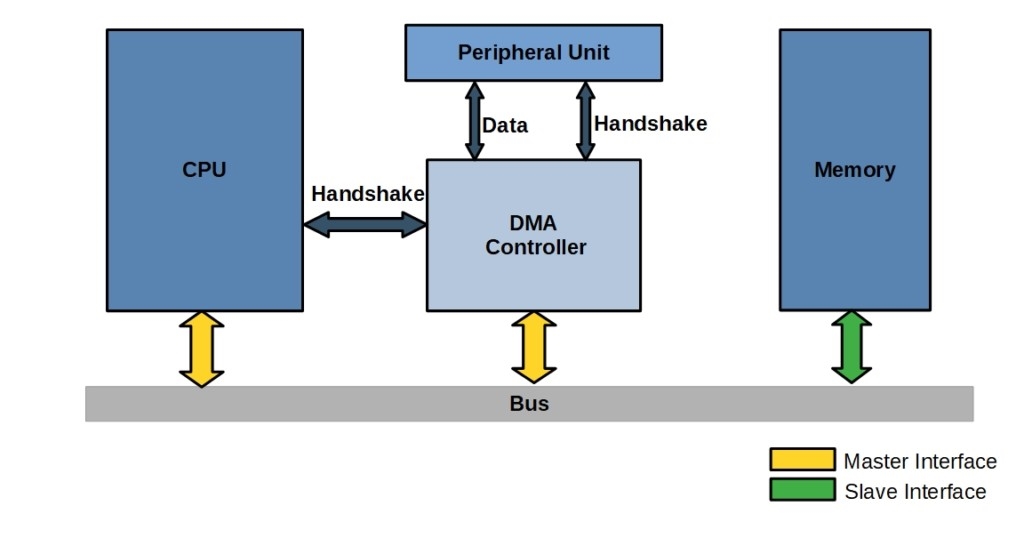

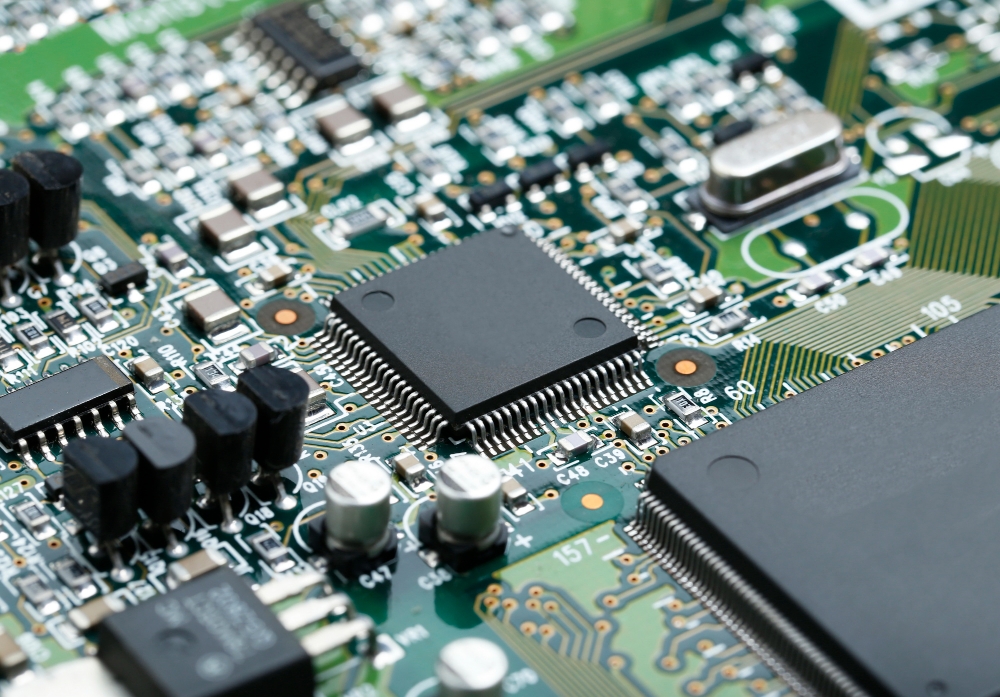

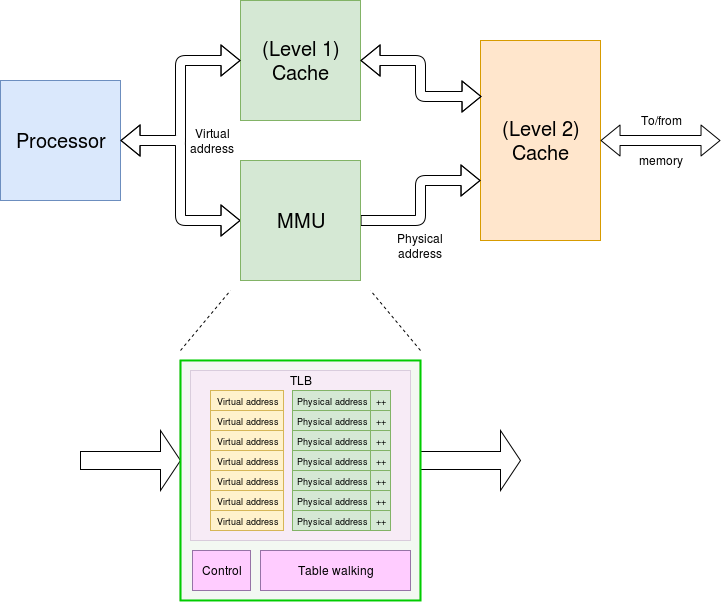

AI and real-time analytics demands have diversified compute beyond CPUs to include CPU+GPU+NPU+DPU. As CPU performance scaling slows and server architectures evolve toward composable designs, storage architectures will also shift to data-centric composable models to boost system performance.

Future storage systems will integrate diverse processors (CPU, DPU), memory pools, flash pools, and capacity disk pools connected by new data buses. This will enable data to be placed directly into memory or flash on arrival, avoiding CPU bottlenecks in data access.

9. AI-enabled Storage

AI techniques can predict trends in performance, capacity, and component failures to reduce incident rates. In complex fault scenarios, storage management systems augmented by large models can accelerate interactive diagnostics and assist operators in rapid problem localization, substantially shortening mean time to repair.

10. Storage Energy Efficiency

Under carbon neutrality goals, green low-carbon operation is a key direction for data centers. Storage accounts for more than 30% of data center IT energy consumption, so reducing storage energy use is essential for achieving net-zero emissions.

Consolidating protocols and eliminating isolated silos improves utilization. A single storage system supporting file, object, and HDFS protocols can meet diverse workloads and consolidate different storage types. Resource pooling increases utilization efficiency.

About 83% of storage energy is consumed by media. For equivalent capacity, SSDs consume 70% less energy and use 50% less space than HDDs. Deploying high-capacity SSDs and high-density disk enclosures increases capacity per watt and reduces processing and storage energy per unit of data, enabling more capacity in less space.

ALLPCB

ALLPCB