Overview

Arm Kleidi AI is a software library designed to improve AI performance on Arm CPUs. This article explains how to run the KleidiAI microkernel in a bare-metal environment and compares code generation across several C/C++ toolchains to identify more efficient builds.

Scope and tools

This article shows how to run the KleidiAI microkernel on bare metal and performs basic benchmarks across different compilers and optimization levels. The work uses components from Arm Development Studio, including a fixed virtual platform (FVP) and a license for Arm Compiler for Embedded (AC6). It also describes how to inspect optimizations performed or missed by the compiler.

Setting up the bare-metal project

The three toolchains evaluated are:

- Arm Compiler for Embedded (AC6)

- Arm GNU Toolchain (GCC)

- Arm Toolchain for Embedded (ATfE), a next-generation Arm embedded compiler that was in beta at the time of writing

To run the KleidiAI kernel in a bare-metal project, follow the guidance from the Kleidi documentation. This work started from C++ example projects in Arm Development Studio: startup_Armv8-Ax1_AC6_CPP for AC6, startup_Armv8-Ax1_GCC_CPP for GCC, and a port included in the ATfE test package. Each project implements the same functionality but requires tweaks to Makefiles and linker scripts.

Toolchain fixes and changes

After pasting the code from the Kleidi guide, the following simple changes were required so all three projects run correctly:

- Include float.h to define FLT_MAX

- Add the include path for KleidiAI headers

- Change the target architecture to armv8.2-a+dotprod+i8mm

Running the code requires an Arm core that supports the i8mm extension. This extension is optional across Armv8.2-A through Armv8.5-A but is mandatory on later cores that support advanced SIMD. Arm Neoverse V1 is a suitable choice. The FVP configuration used was -C cluster0.NUM_CORES=1 -C bp.secure_memory=false -C cache_state_model=0.

A read-modify-write sequence in the startup code that sets SMPEN caused problems on the Neoverse V1 FVP. The startup code was adapted from Cortex-A startup sequences, so removing that sequence resolved the issue for this evaluation. A full review of startup code against Neoverse requirements would be appropriate in a production port, but removing the sequence was sufficient for these experiments.

Some code was added to populate matrices with random data. This is not strictly necessary because memory already contained repeating nonzero patterns, but random initialization was useful for consistent benchmarking.

Project-specific fixes included:

- ATfE project: RAM size in the linker script was set to 0x80000, which is too small and causes heap/stack collisions. The solution is to set a larger RAM range in the linker script, consistent with the FVP default RAM.

- GCC project: .init_array was assigned to address 0x80100000, which conflicted with .eh_frame. Removing that manual address assignment resolved the conflict.

After these changes, all three toolchains were able to run the KleidiAI kernel on bare metal. The next step was performance testing.

Benchmark method and results

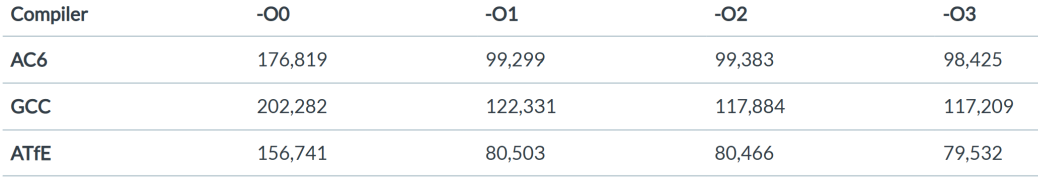

The FVP cycle counter was used as the performance metric. While not perfect, it is sufficient for this comparison because all three compilers ran the same workload and any measurement errors should be consistent across runs. The cycle counts were recorded at -O0, -O1, -O2, and -O3 while performing processor startup, matrix setup, and executing the KleidiAI kernel.

Two observations stand out. First, most performance gains appear at -O1. -O2 and -O3 provide smaller incremental improvements, with GCC showing a somewhat larger benefit at higher optimization levels. This is expected because the KleidiAI kernel already contains extensive hand-written assembly; the surrounding code is short and simple, so aggressive compiler optimizations have limited additional benefit.

Second, ATfE appears noticeably faster than AC6 and GCC. That advantage prompted a deeper investigation into where the gains originate.

Deeper analysis

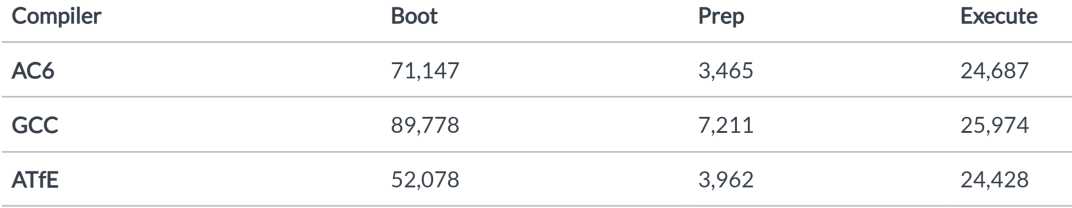

To simplify analysis, measurements focused on the -O1 optimization level, since most gains were observable there. The code was separated into three parts to improve analysis granularity:

- Startup: all startup code up to entry of main()

- Prepare: allocate memory for matrices and fill them with random data

- Execute: run the KleidiAI kernel

Cycle counts were recorded for each stage:

Execution time of the KleidiAI kernel was similar across compilers: ATfE was slightly ahead of AC6 by about 1%, and GCC was a bit behind. Re-running this test at -O2 and -O3 showed GCC closing the gap and occasionally overtaking at -O3, consistent with higher-level optimizations producing further wins.

In the Prepare stage, ATfE and AC6 were close while GCC lagged. At -O2 and -O3 GCC reduced some of the difference, suggesting that different compilers enable different optimizations at different levels.

The primary reason ATfE reduced overall elapsed time was faster startup. One hypothesis is that ATfE uses a lighter C library (picolibc) in the runtime setup, compared with ArmCLib used with AC6 or newlib used with GCC. In this benchmark, the test project contains a small amount of application code, so startup overhead is a large fraction of total runtime. For larger workloads, startup time would represent a smaller proportion of total run time, reducing the apparent advantage.

Analyzing compiler optimizations

To see which optimizations ATfE applied or missed, the -Rpass and -Rpass-missed options are useful. Each option accepts =.* for all passes or =

for a specific pass. For example, -Rpass=inline shows which calls were inlined, while -Rpass-missed=inline reports which calls were not inlined. -Rpass-missed is particularly valuable because it reveals how source code might be refactored to enable more optimizations.

A quick review of ATfE optimization reports at -O0, -O1, -O2, and -O3 showed the following patterns:

Even at -O0 the compiler inlined some Arm C Language Extensions (ACLE) intrinsics, such as vaddq_s16, since those map to single instructions and inlining avoids call overhead without a meaningful code-size tradeoff.

At -O1 the compiler performed a large number of function inlines, especially for small functions like the random number generator. It also hoisted expressions or instructions out of loops when they do not change per iteration.

At -O2 the compiler began loop vectorization, with some vectorizations deferred to -O3. The compiler uses heuristics to weigh the cost and benefit of each optimization. As with inlining, different loops may use different vectorization strategies even at the same optimization level.

At -O3 some loops are unfolded.

The hoisting mechanism is worth illustrating. Consider this simplified excerpt from a KleidiAI source file:

for (size_t dst_row_idx = 0; dst_row_idx < dst_num_rows; ++dst_row_idx) { for (size_t dst_byte_idx = 0; dst_byte_idx < dst_num_bytes_per_row; ++dst_byte_idx) { const size_t block_idx = dst_byte_idx / block_length_in_bytes; const size_t nr_idx = block_idx % nr; const size_t n0_idx = dst_row_idx * nr + nr_idx; }}The compiler recognizes that the multiplication in n0_idx need not be inside the inner loop because dst_row_idx and nr are constant within that inner loop:

src/kai_rhs_pack_nxk_qsi4cxp_qs4cxs1s0.c47: remark: hoisting mul [-Rpass=licm]96 | const size_t n0_idx = dst_row_idx * nr + nr_idx;| ^The compiler hoists the multiplication to the outer loop, producing code similar to:

for (size_t dst_row_idx = 0; dst_row_idx < dst_num_rows; ++dst_row_idx) { const size_t hoist_temp = dst_row_idx * nr; for (size_t dst_byte_idx = 0; dst_byte_idx < dst_num_bytes_per_row; ++dst_byte_idx) { const size_t block_idx = dst_byte_idx / block_length_in_bytes; const size_t nr_idx = block_idx % nr; const size_t n0_idx = hoist_temp + nr_idx; }}Developers can perform such manually, but that can harm readability and maintainability. The compiler considers these factors and lets developers focus on functional clarity while applying such optimizations automatically.

ATfE -Rpass output contains extensive information on applied and missed optimizations. These reports help developers understand how the compiler transforms code and guide source changes that enable further optimization. This is a large topic and will be explored further in follow-up posts.

Conclusion

Arm Development Studio provides a suitable toolset for experimenting with KleidiAI on bare metal, including example projects, an FVP for testing, and AC6 licensing. When evaluating compiler performance, it is important to gather relevant data for all runtime phases. For example, a superficial conclusion that "ATfE builds are about 20% faster than AC6 builds" is easy to reach if startup-heavy benchmarks are used. ATfE makes heuristic decisions about optimizations based on estimated costs and benefits and provides options to inspect both applied and missed optimizations. Use of those reports can guide source changes that enable additional compiler optimizations.

ALLPCB

ALLPCB