RDMA: Redefining Remote Memory Access

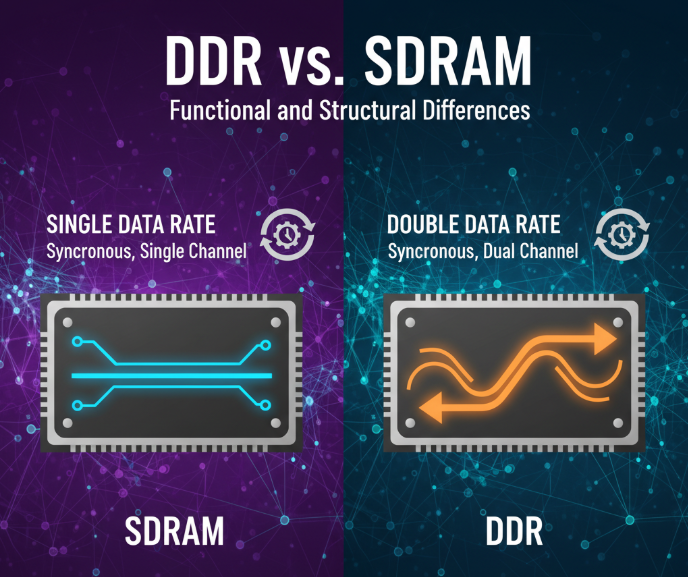

Remote Direct Memory Access (RDMA), a leader in high-speed network memory access technology, has fundamentally changed how programs access memory resources on remote computing nodes. Its performance advantage lies in bypassing the operating system kernel (such as sockets and the TCP/IP stack), enabling a new paradigm in network communication. This architectural shift reduces CPU overhead associated with kernel operations, allowing direct memory read/write operations between nodes via their Network Interface Cards (NICs), which are also referred to as Host Channel Adapters (HCAs) in some contexts.

RDMA Hardware Implementations: InfiniBand, RoCE, and iWARP

RDMA is primarily implemented using three key technologies: InfiniBand, RoCE, and iWARP. Among them, InfiniBand and RoCE are widely recognized by industry experts for their high performance and broad adoption. These technologies are particularly effective in bandwidth- and latency-sensitive scenarios such as large-scale model training, where RDMA's low-latency, high-efficiency characteristics enable the construction of high-performance network systems and significantly improve data transmission and system performance.

Inside InfiniBand: High-Bandwidth Networking at Its Peak

InfiniBand currently supports mainstream 100G and 200G transmission speeds, with Enhanced Data Rate (EDR, 100G) and High Data Rate (HDR, 200G) being common terms. While InfiniBand offers excellent performance, its high cost often limits its use in general applications. However, it remains essential in supercomputing centers at universities and research institutions, where it supports critical computational tasks.

Unlike traditional network switches, InfiniBand networks adopt a ¡°fat-tree¡± topology to ensure seamless communication between any two computing nodes. This topology includes a core layer responsible for traffic forwarding and a leaf layer that connects to compute nodes. The high cost of implementation arises from the need for 36-port aggregation switches, with half of the ports connecting to compute nodes and the other half to core switches. Each cable costs around USD 13,000, and redundancy is required to maintain lossless communication.

InfiniBand delivers unmatched bandwidth and low latency. According to data from Wikipedia, its latency is significantly lower than Ethernet¡ª100 nanoseconds versus 230 nanoseconds¡ªmaking it a core technology in top global supercomputers, adopted by companies such as Microsoft and NVIDIA and by U.S. national laboratories.

Exploring RoCE: A Cost-Effective RDMA Alternative

RoCE (RDMA over Converged Ethernet) offers a cost-effective alternative to InfiniBand, providing RDMA capabilities over Ethernet. While not low-cost per se, RoCE is often chosen for its more economical approach to RDMA deployment. Its rapid development has made it a competitive substitute for InfiniBand, particularly in cost-sensitive scenarios. However, achieving a truly lossless network with RoCE remains challenging, and the total network cost typically remains above 50% of that of InfiniBand.

Enabling Large-Scale Model Training with GPUDirect RDMA

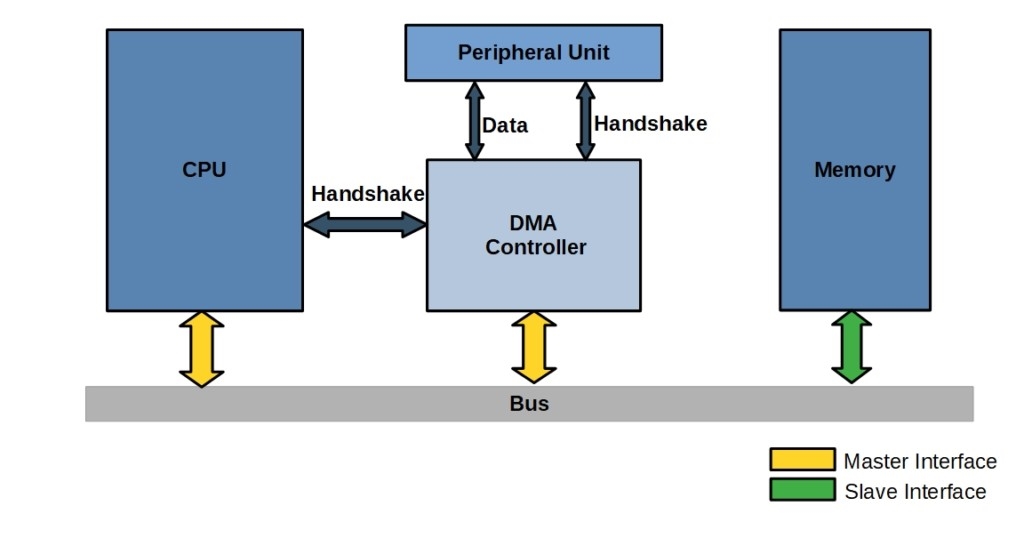

In large-scale model training, communication costs between nodes are critical. GPUDirect RDMA¡ªa solution combining InfiniBand and GPU technologies¡ªenables direct GPU-to-GPU data exchange across nodes, bypassing the CPU and system memory. This is particularly advantageous since large models are typically stored in GPU memory. Unlike traditional approaches that copy model data to the CPU for inter-node transfer, GPUDirect RDMA allows direct GPU communication, significantly improving efficiency and performance.

Strategic Network Configuration for Large-Scale Models

Optimizing performance in large-model applications hinges on precise configuration, particularly between GPUs and InfiniBand NICs. NVIDIA¡¯s DGX systems illustrate a 1:1 pairing strategy between GPUs and InfiniBand NICs. A typical node may include nine NICs¡ªone for storage, eight for GPUs. While ideal, this configuration is costly. A more cost-effective approach is a 1:4 NIC-to-GPU ratio.

Both GPUs and NICs connect via PCIe switches, generally supporting two GPUs each. Ideally, each GPU would have dedicated access to a NIC. However, shared access to a NIC and switch by two GPUs introduces competition and potential performance bottlenecks.

The number of NICs directly affects contention and communication efficiency. A single 100 Gbps NIC provides 12 GB/s bandwidth, which scales nearly linearly with additional NICs. For example, pairing eight H100 GPUs with eight 400G InfiniBand NDR NICs yields exceptional data transfer performance.

Equipping each GPU with a dedicated NIC is the optimal setup to minimize contention and maximize communication efficiency and overall system performance.

Rail-Optimized Topology for High-Performance Model Networks

Designing a high-performance rail-style topology is critical for large-scale model training. This approach is an evolution of the traditional fat-tree architecture used in high-performance computing (HPC).

The illustrated architecture contrasts the basic fat-tree layout with the rail-optimized version. Two MQM8700 HDR switches form the network core, connected via four HDR cables for low-latency, high-bandwidth communication. Each DGX GPU node is equipped with nine InfiniBand NICs (labeled HCAs), one dedicated to storage and eight to model training.

The wiring strategy connects HCA1, HCA3, HCA5, and HCA7 to the first HDR switch, while HCA2, HCA4, HCA6, and HCA8 link to the second. This symmetrical design ensures multipath, high-efficiency data transport for large-scale parallel computation.

To maintain an unblocked and optimized network, each DGX node's eight NICs are directly linked to individual leaf switches¡ªeight in total. HCA1 connects to switch 1, HCA2 to switch 2, and so forth. Two spine switches interconnect the four blue-marked leaf switches via 80 cables. The leaf switches are positioned in the lower layer and physically connected to compute nodes.

This setup offers excellent scalability and ultra-low latency, eliminating data transfer bottlenecks. Each NIC can directly communicate with any other in the network at maximum speed, allowing seamless, real-time remote memory access and enhancing GPU collaboration in large-scale parallel computing.

Choosing Between InfiniBand and RoCE

In high-performance, lossless network environments, the decision to adopt InfiniBand or RoCE should align closely with the application¡¯s requirements and existing infrastructure. Both technologies offer low latency, high throughput, and minimal CPU overhead, making them highly suitable for HPC deployments.

ALLPCB

ALLPCB