Overview

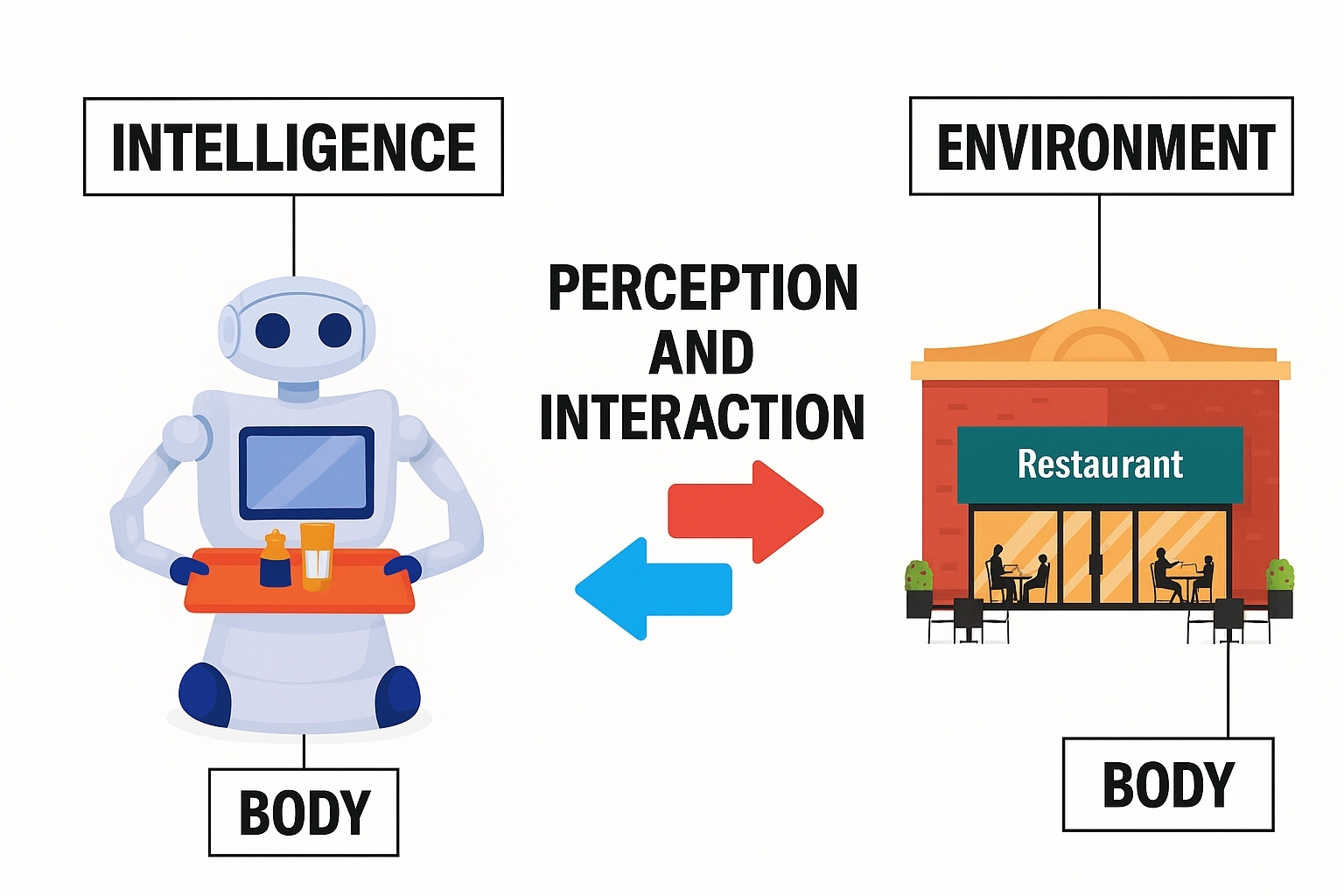

The principle of edge AI is to deploy artificial intelligence algorithms and models to edge devices close to data sources, allowing local data processing, analysis, and decision making without transmitting data to remote cloud servers. Edge AI aims to bring AI capabilities down to edge devices.

Distributed Architecture

Edge AI uses a distributed computing architecture that shifts compute tasks from a centralized cloud to individual edge devices. Edge devices can include smartphones, smart cameras, industrial sensors, smart home devices, and so on. These devices have varying levels of compute capability and can process collected data locally, reducing dependence on cloud compute resources. Data collection, preprocessing, analysis, and decision making occur near the data source, avoiding the network latency and bandwidth costs of sending large volumes of data to the cloud. For example, in smart surveillance, a camera can analyze video locally to detect anomalous behavior and raise an alert immediately without uploading all video to the cloud.

Model Lightweighting

Because edge devices have limited compute, storage, and power budgets, large, complex AI models typically cannot run efficiently on them. Models therefore need to be lightweighted through techniques such as model compression, pruning, and quantization. Model compression reduces the number of parameters, lowering storage and compute complexity. Pruning removes unimportant neurons or connections to improve runtime efficiency. Quantization converts floating-point parameters to lower-precision fixed-point representations, reducing compute and memory use.

Edge Device Deployment

Deploying lightweight models to edge devices requires consideration of the device hardware architecture, operating system, and development environment. Different edge devices may use different processor architectures, such as ARM or x86, and models must be optimized and adapted for these architectures to ensure efficient execution. Corresponding applications or software frameworks must be developed to invoke and manage AI models on the device.

Data Collection and Preprocessing

Edge devices collect data via various sensors such as cameras, microphones, temperature sensors, and accelerometers. Raw sensor data often contains noise, redundancy, and inconsistencies, so preprocessing is required. Preprocessing includes data cleaning, feature extraction, and normalization. Data cleaning removes noise and outliers. Feature extraction derives useful features from raw data to reduce dimensionality. Normalization maps data to a specific range to improve model convergence and accuracy.

Real-Time Inference and Decision Making

Preprocessed data is fed to AI models deployed on edge devices for real-time inference. Models analyze inputs and produce outputs that the device uses to make decisions and perform actions. For example, in autonomous driving, an on-board compute platform processes data from cameras, radar, and LiDAR, performs environment perception, object detection, and path planning, and then controls vehicle speed, steering, and braking based on inference results.

Cloud Collaboration and Updates

Although edge AI emphasizes local processing, edge devices may still collaborate with the cloud when needed. For complex problems or large-scale analysis, a device can upload selected data to the cloud for further processing. The cloud can also push updated AI models, algorithms, and knowledge bases to edge devices to remotely update and improve system performance and adaptability.

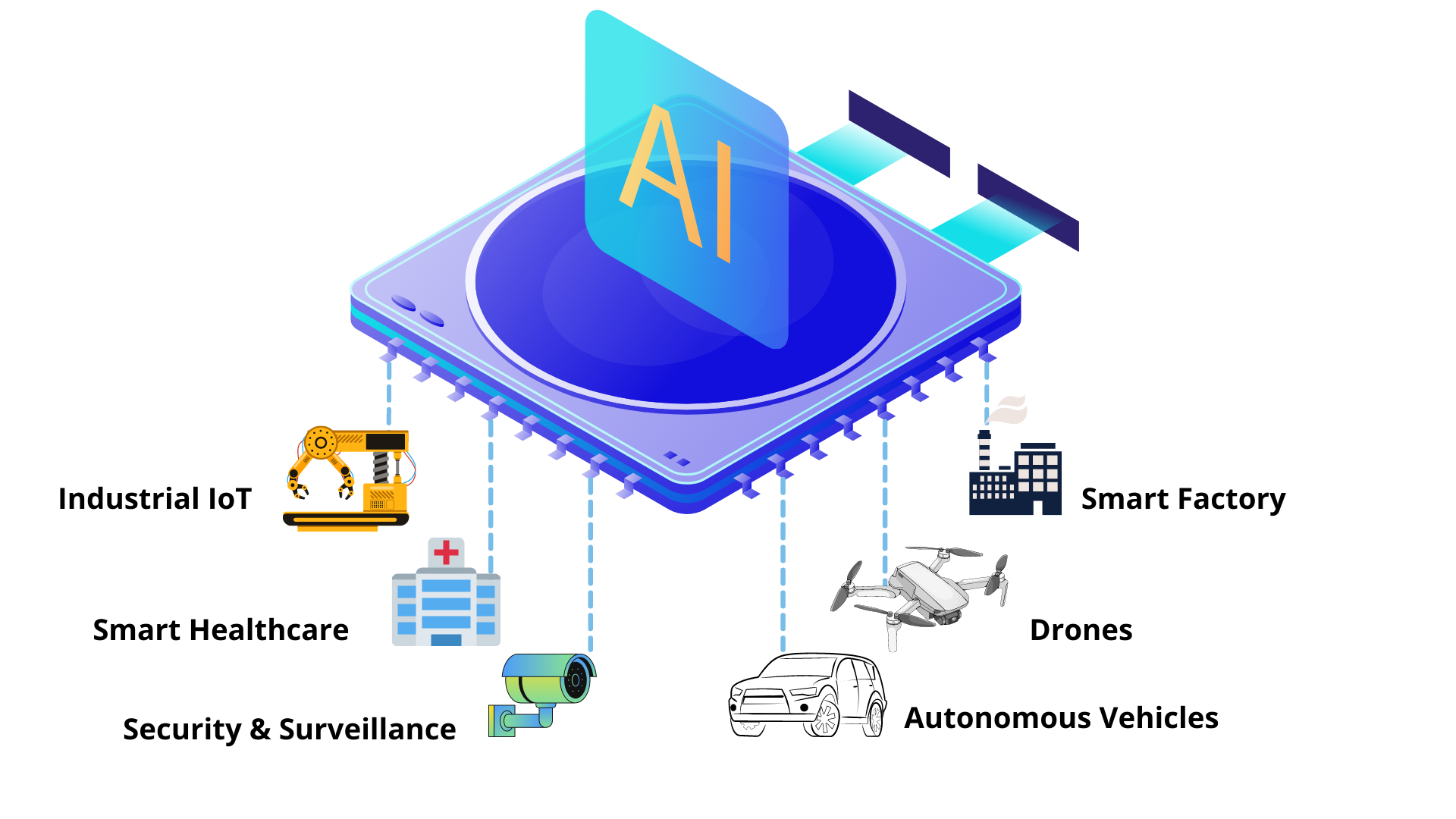

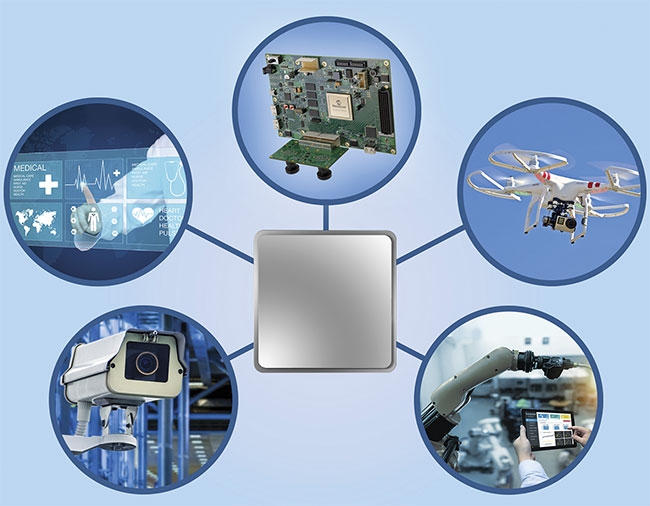

Common Edge Device Types

Edge AI depends on a variety of devices with different characteristics and functions to suit diverse applications. Common device categories include:

Smartend Devices

Examples: smartphones, smart cameras, smart wearables. Smartphones provide strong compute power, multiple sensors (cameras, microphones, accelerometers, gyroscopes), and robust connectivity (Wi-Fi, 4G/5G). They can run edge AI applications such as image recognition, voice interaction, and real-time health monitoring. For example, using a phone to identify landmarks from photos uses on-device edge AI.

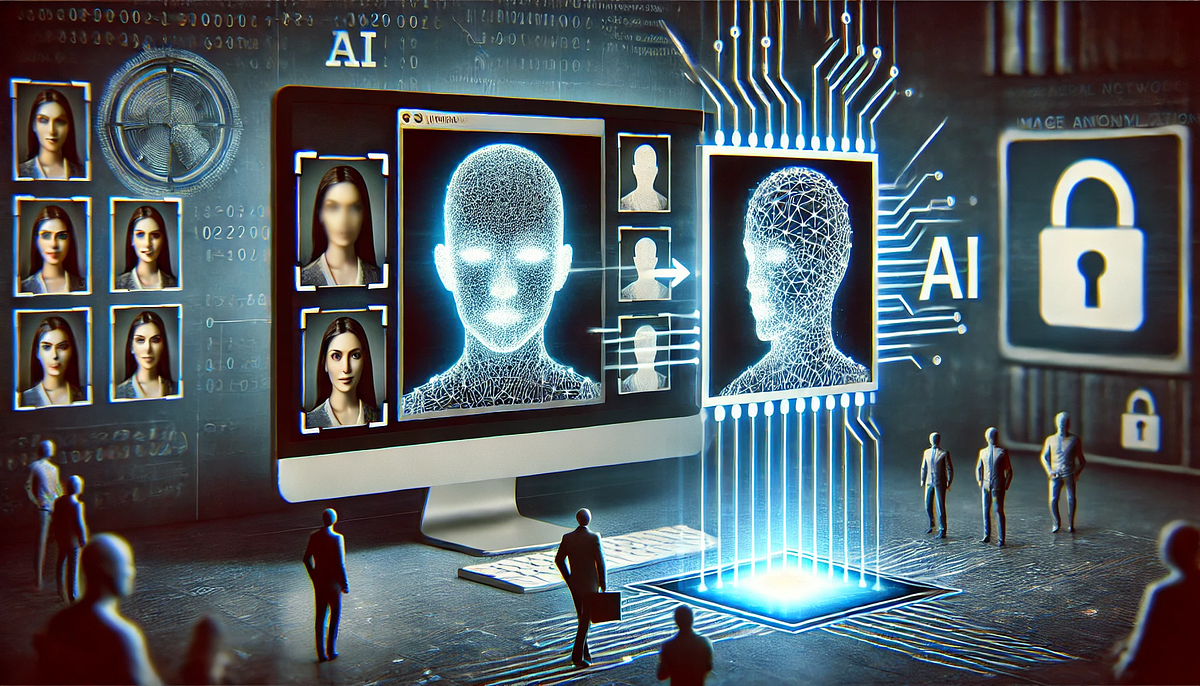

Smart Cameras

Smart cameras embed image sensors and some compute capability to capture and process images locally. They are widely used in surveillance and intelligent transportation. In security, smart cameras can perform face recognition and behavior analysis, detecting running or loitering and issuing alerts. In traffic, they can identify vehicles and count traffic flow.

Wearables

Examples: smartwatches and fitness bands. Wearables are compact and close to the body, typically equipped with sensors such as heart rate and sleep monitors and low-power processors. They are mainly used for health monitoring and activity tracking. A smartwatch can monitor heart rate, blood pressure, and sleep quality in real time and apply edge AI algorithms for preliminary analysis and health suggestions.

Industrial Devices

Examples: industrial sensors, industrial gateways, industrial robot controllers. Industrial sensors collect parameters in real time such as temperature, pressure, flow, and vibration; some have local processing capabilities. In industrial automation, sensors can analyze data with edge AI for real-time equipment monitoring and fault prediction. For instance, vibration analysis can detect potential faults early and avoid unplanned downtime.

Industrial Gateways

Industrial gateways bridge on-site equipment and the cloud, offering compute and communication capabilities and connecting devices that use different industrial protocols. They can preprocess and run edge compute on collected data, integrating data from multiple devices for analysis and remote monitoring. In factories, gateways can gather data from production lines and apply edge AI to optimize processes and improve efficiency.

Industrial Robot Controllers

Robot controllers manage motion and operations of industrial robots and require high compute performance and real-time response. Combined with edge AI, controllers can give robots smarter perception and decision making. On assembly lines, robots can use edge AI to recognize part shapes and positions and automatically adjust actions to improve accuracy and throughput.

Smart Home Devices

Examples: smart speakers and smart appliances. Smart speakers integrate microphone arrays, speakers, and speech recognition chips to enable voice interaction via edge AI for command recognition and local processing. Users can control home devices by voice, such as turning lights on or adjusting the thermostat. Smart appliances like refrigerators, air conditioners, and washing machines include sensors and microprocessors to monitor operating status and environment and use edge AI for intelligent control and optimization. For example, a smart refrigerator can suggest shopping lists based on stored food, and a smart air conditioner can adjust operation by occupancy and indoor/outdoor temperatures.

Intelligent Transportation

Examples: in-vehicle compute platforms and roadside units. In-vehicle platforms offer high performance and low latency to meet the needs of autonomous driving. They process sensor data in real time for perception, object detection, path planning, and decision control to ensure safe driving. Roadside devices such as intelligent traffic signals and roadside units collect traffic flow and vehicle speed and can communicate with vehicles. Edge AI enables real-time traffic monitoring and optimized control, such as adjusting signal timing based on traffic flow and providing route guidance to vehicles.

Edge AI Environment Setup and Model Deployment

Hardware selection: Choose appropriate edge hardware based on model size and compute requirements. For models with high compute needs, select edge compute boxes with high-performance processors such as GPUs or TPUs. For simpler tasks, embedded processors like ARM Cortex series may suffice.

Operating system and development environment: Install a suitable operating system on the edge device, such as Linux or Android, and configure the development environment including compilers and debugging tools. Install inference frameworks that support edge model execution, such as TensorFlow Lite, PyTorch Mobile, or ONNX Runtime, which enable efficient deployment of trained models on edge devices.

Model conversion: Convert trained models into formats supported by the edge device. For example, a model trained with TensorFlow can be converted to .tflite using the TensorFlow Lite Converter for execution within TensorFlow Lite.

Model deployment: Transfer the converted model files to the edge device storage by wired methods such as USB or Ethernet, or wireless methods such as WiFi or Bluetooth.

System integration: Integrate the deployed model with other software modules on the edge device to form a complete pipeline for data collection, preprocessing, model inference, and result output. For example, implement data acquisition programs that stream sensor data to the model for inference and control actuators based on inference outcomes.

ALLPCB

ALLPCB