Introduction

Embodied intelligence has gained significant attention recently. What exactly is embodied intelligence, and which categories and core technologies does it include? This article provides a concise overview.

What Does "Embodied Intelligence" Mean?

"Intelligence" here refers to AI. The term "embodied" originates in philosophy and cognitive science. Embedded in the English word "embodied" is the idea of "having a body" or "bringing into a body."

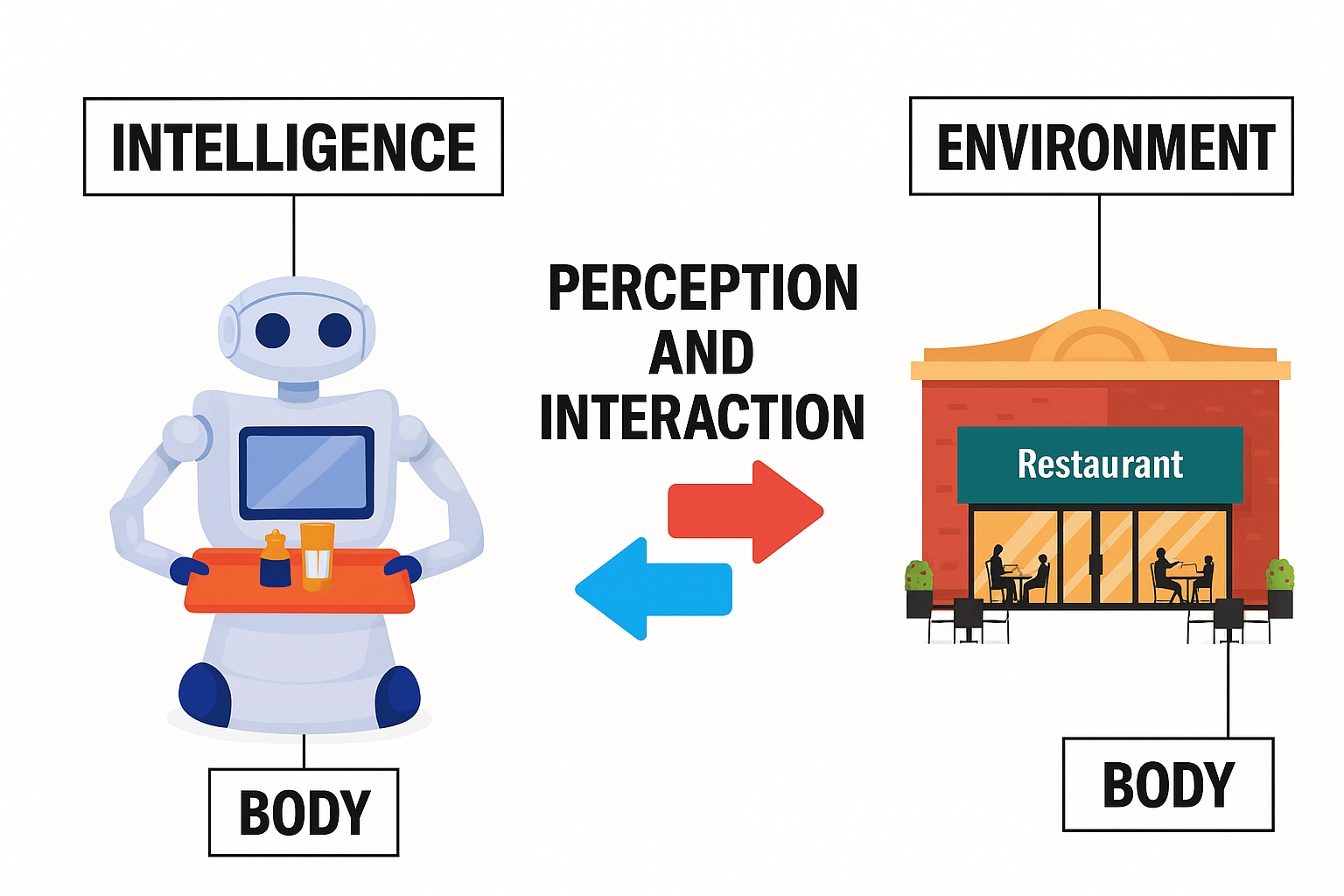

Embodied intelligence therefore means giving intelligence a physical body. More precisely, besides having a physical body, the key characteristic is the ability to interact with the environment and continuously adapt and optimize through that interaction.

In other words, embodied intelligence is an intelligence system that perceives and acts through a physical body. The physical agent interacts with its environment to gather information, understand situations, make decisions, and act, thereby producing intelligent and adaptive behavior.

The three essential elements of embodied intelligence are the body, the brain, and the environment. As Fei-Fei Li has noted, the essence of "embodied" is not the body itself but the requirement and functionality of interacting with and acting in the environment.

Distinguishing Embodied and Disembodied Intelligence

Large models and virtual agents used on phones or computers (for example ChatGPT and DeepSeek) excel at processing text, images, and video and can communicate and output information effectively. However, phones and computers have limited perception and virtually no ability to act in the physical world, so they cannot interact with the environment. This type of intelligence is disembodied intelligence, where intelligence and body are decoupled.

If a physical robot carries an AI "brain" but only has perception (cameras, sensors) without actuation (no arms, legs, or wheels), or vice versa, it is not embodied intelligence. True embodied intelligence requires an AI brain plus a body with both perception and action capabilities that can interact with the external environment in real time.

History and Development

Embodied intelligence is not new. Its conceptual roots trace back to 1950, when Alan Turing published "Computing Machinery and Intelligence," outlining two possible AI development paths: one abstract, like the intelligence needed for chess, and another emphasizing strong perceptual abilities and the capacity to act and learn in the real world. These two paths correspond roughly to disembodied and embodied intelligence.

For many decades, AI research focused mainly on disembodied approaches due to technological limitations. Robotics followed a largely separate technical path with limited intelligence, weak actuation, and poor perception.

In 1986, Rodney Brooks proposed a new view that intelligence need not rely on complex symbolic representations and reasoning; agents could generate intelligent behavior through direct physical interaction with the environment. His work gave important theoretical support to embodied intelligence, and his robots demonstrated autonomous navigation and interaction in complex environments. Brooks is often regarded as a founding figure for embodied intelligence.

Embodied intelligence has accelerated since the 21st century as information technology, electronics, sensor technology, and mechanical engineering matured. Two key trends enabled this acceleration:

- The emergence of powerful large models and agents capable of efficiently learning from and processing large amounts of perceptual data.

- The limitations of traditional automation, which could only execute rigid programs, motivated integrating general AI capabilities into physical systems to expand their applicability.

Recent public demonstrations—from robot performances to robot marathons and major AI and robotics conferences—have brought embodied systems into wider public view.

Categories of Embodied Intelligence

Embodied intelligence covers many categories. Functionally, systems can be industrial robots, service robots, or special-purpose robots. Morphologically, they can be humanoid robots, wheeled robots, legged robots, and more. Common forms include:

Humanoid Robots

Humanoid robots are currently the most visible and widely discussed category. Their human-like structure makes them versatile for using handles, climbing stairs, and operating tools, enabling easier integration into human-oriented environments. They also have interaction advantages, using gestures and expressions to communicate, which can improve user acceptance. Current use cases include home service, medical care, industrial tasks, logistics sorting, and retail services. Humanoid robot competitions in sports and locomotion are also a technical benchmark for teams and products.

Wheeled Robots

Wheeled robots move primarily on wheels and are common in warehousing, logistics, inspection, and security. Many designs combine wheels with robotic arms, known as wheeled-arm robots. Their advantages include higher travel speeds and efficient material handling. These robots typically have strong environment perception for autonomous navigation and obstacle avoidance.

Legged Robots

Quadruped robots, often called robot dogs, belong to the legged robot category. Legged designs mimic insect or reptile locomotion and offer superior terrain adaptability, flexibility, and stability, enabling movement over rugged terrain or debris for exploration and rescue tasks. They can also serve as robotic companions or assistive devices in domestic and special-needs contexts.

Autonomous Vehicles, Drones, and Unmanned Vessels

Autonomous cars, drones, and unmanned vessels are embodiments of embodied intelligence. They use sensors (cameras, radar, etc.) to perceive the environment in real time and apply AI algorithms for data processing and decision-making to achieve autonomous driving, navigation, and obstacle avoidance. Many bio-inspired forms and specialized morphologies exist for different use scenarios.

Core Technologies

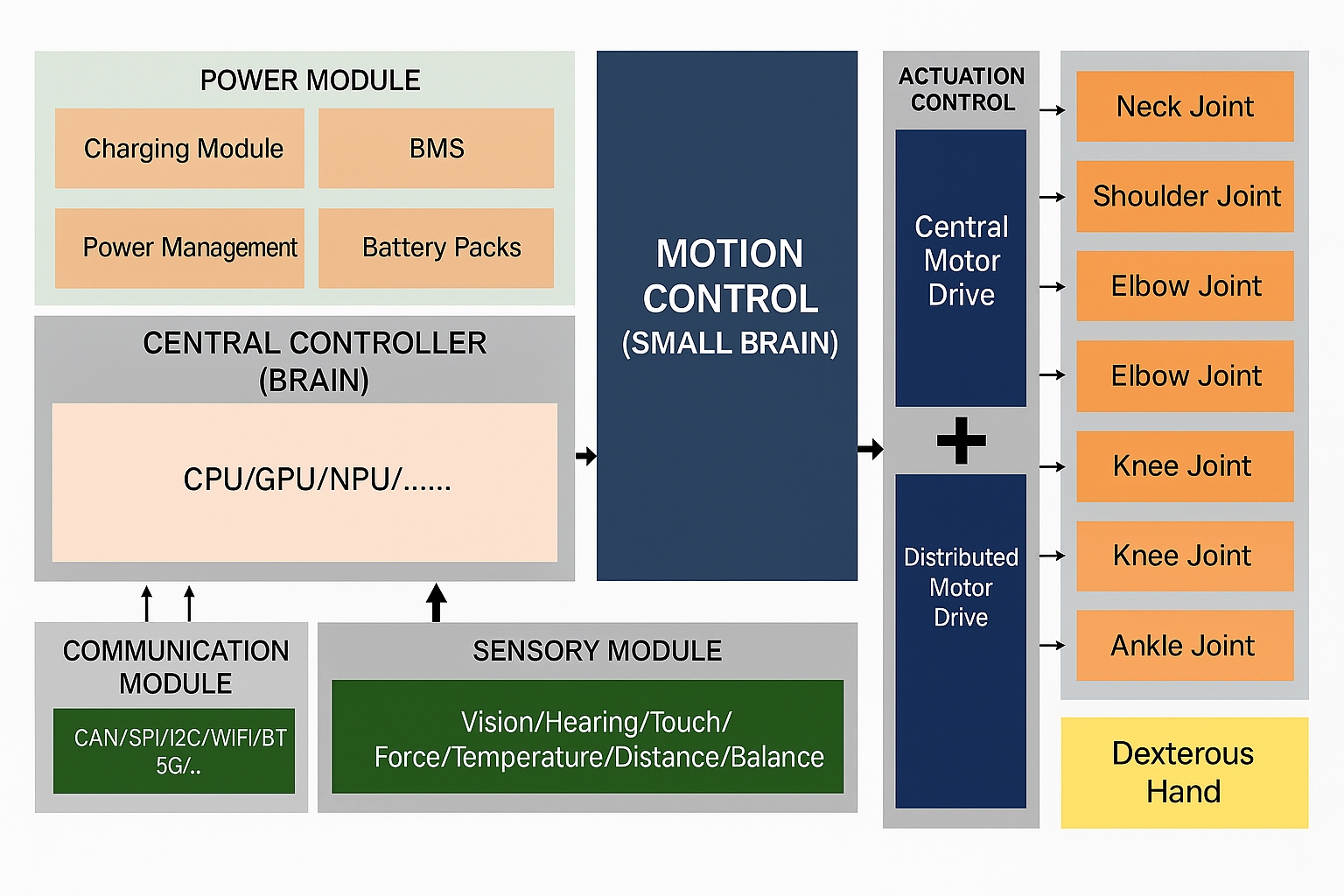

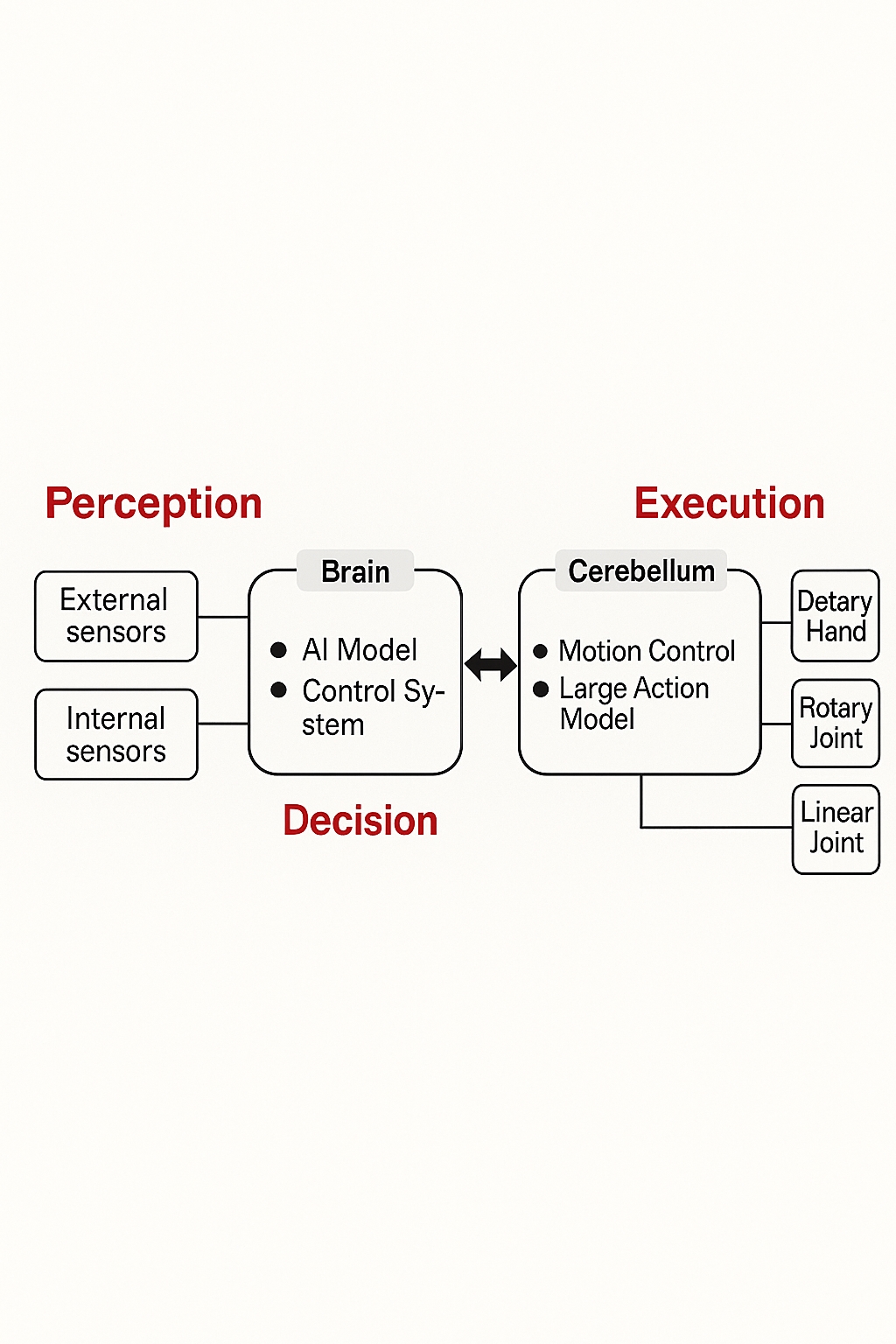

The technology stack of embodied intelligence is often divided into environment perception, motion control, and human-machine interaction modules, or more conceptually into body, brain, and cerebellum. A typical architecture includes these three core parts.

Body

The body comprises head, torso, limbs, joints, dexterous hands, and other mechanical components, including sensors, actuators, drive and power systems, and communications. The mechanical system defines structural strength, mobility, and appearance. Sensors capture external and internal state information and include cameras, microphones, pressure sensors, and joint angle sensors. Drive systems use motors, hydraulics, and similar actuators; energy sources include lithium batteries and fuel cells.

Key technical areas for the body include mechanical design, lightweight materials, precision actuators, power solutions, thermal management, and robust communications.

Brain

The brain handles perception, understanding, and planning, typically driven by large language models and visual-language-action (VLA) models. Perception fuses multimodal sensor data to monitor position, posture, and motion state in real time to avoid instability. Decision-making algorithms depend on the system architecture and employ techniques such as reinforcement learning for trial-and-error interaction and imitation learning to mimic human motion.

Algorithmic approaches include hierarchical decision models, which decompose tasks into layers with multiple neural networks combined via pipelines, and end-to-end models, which map task goals directly to action commands with a single network. The brain requires substantial compute, so systems often split processing between cloud-based and local on-board computation to meet latency, bandwidth, and reliability requirements.

Cerebellum

The cerebellum converts decisions into concrete actions via motion control and action generation. It uses motion control algorithms and feedback control systems to offload low-level control from the brain. Key technologies include model predictive control (MPC), force and compliance control, and real-time response optimization.

Technical Challenges

Despite strong interest, embodied intelligence faces significant challenges.

Technical Complexity

Many subfields in embodied systems have high implementation difficulty. For perception, reliably understanding external information in dynamic, cluttered, or low-light environments with occlusion and noise is challenging. For motion control, integrating mechanics, dynamics, and control theory to produce stable, flexible movement across scenarios is difficult. Public demonstrations of robots stumbling, falling, or behaving unpredictably highlight remaining problems across perception, decision, and execution stages.

Data Requirements

Like AIGC, embodied intelligence requires large datasets. Collecting real-world embodied data is costly and often insufficient in quantity and diversity. To address this, industry frequently uses simulated environments for large-scale data collection. Simulated data is less realistic but cheaper and larger in scale, making it useful for early-stage development.

Safety and Security

Public concerns include misuse by malicious actors, privacy violations, and speculative fears about consciousness or autonomy turning harmful. No system is absolutely secure; large-scale adoption of embodied systems will require robust safety, security, and privacy safeguards.

Funding and Talent

Embodied intelligence development is capital intensive. Sustained research and high-quality teams require long-term funding and talent recruitment. High market interest attracts investment, but when development stalls or commercialization proves difficult, startups may fail, leaving setbacks for the ecosystem.

Other unresolved issues include toolchains, standardization, ethics, and energy efficiency. These problems require long-term research and iterative development. Caution and realistic expectations are advisable for this emerging field.

Conclusion

Embodied intelligence combines physical bodies with AI capabilities to interact with the real world. It draws on advances in sensing, actuation, control, and large-scale AI models. While the market outlook is large, the field faces substantial technical, data, safety, and resource challenges that will require time and coordinated effort across industry, academia, and policy.

According to industry estimates, the global AI robot market reached USD 14.3 billion in 2023 and is projected to reach about USD 82.47 billion by 2032, with a compound annual growth rate of 21.5%.

ALLPCB

ALLPCB