Overview

In 2023, breakthroughs in large models and the rise of generative AI are driving a new phase of innovation in the AI industry and prompting shifts in compute architectures.

According to the report "2023–2024 China AI Compute Development Assessment", the global AI hardware market (servers) is expected to grow from $19.5 billion in 2022 to $34.7 billion in 2026, a five-year CAGR of 17.3%. In China, the AI server market is estimated to reach $9.1 billion in 2023, up 82.5% year over year, and $13.4 billion in 2027, a five-year CAGR of 21.8%. The Chinese compute market, especially intelligent computing, is expanding rapidly.

1. CPU + GPU as the Primary Heterogeneous AI Computing Model

In the large-model era, building and fine-tuning generative AI foundation models to meet application requirements will change the infrastructure market and create development opportunities. An application-oriented approach with system-level focus is expected to guide compute upgrades.

From a technical perspective, heterogeneous computing remains a key trend in chip development. In a single system, heterogeneous computing uses different types of processors (such as CPUs, GPUs, ASICs, FPGAs, and NPUs) to work together on specific tasks to optimize performance and efficiency, making more efficient use of diverse compute resources to meet varied needs. For example, GPUs can accelerate model training, especially for large models; CPUs can handle data preprocessing, model tuning, control, and resource coordination; FPGAs can provide inference acceleration for edge deployments and faster real-time inference.

IDC research shows that, as of October 2023, the Chinese market generally views "CPU+GPU" as the main heterogeneous combination for AI computing.

(Source: "2023–2024 China AI Compute Development Assessment")

2. Three Major Challenges for AI Chips in the Large-Model Era

The rising demand for AI compute creates opportunities for Chinese chip vendors. IDC expects AI chip shipments in China to reach 1.335 million units in 2023, up 22.5% year over year.

At the same time, several development challenges have emerged for China's AI chips in the large-model era.

First, there is a significant performance gap with international leading AI chips. For example, Nvidia's latest H200 GPU delivers nearly five times the performance of its A100 GPU. Most Chinese AI chips achieve less than 50% of those performance levels for large-model cluster training, with only a few approaching A100/A800 performance. This implies roughly a three-generation performance gap in large-model training capabilities.

Second, the software and ecosystem disadvantage is significant. Nvidia's CUDA ecosystem has benefited from 17 years of development and over $10 billion of investment, and it now supports over 3 million developers globally, making it a de facto standard. In contrast, Chinese AI chip vendors collectively hold under 10% market share, and their software stacks and ecosystems are fragmented and incompatible.

Third, capacity constraints and limited access to key technologies for advancing to high-end nodes are also restricting AI chip development.

3. Addressing Three Hurdles for Heterogeneous Compute

Based on the current situation, Lin Yonghua, Deputy Director and Chief Engineer at the Beijing Academy of Artificial Intelligence, identifies three major constraints for heterogeneous compute in the large-model era.

Constraint 1: Different compute types cannot be pooled for training

Heterogeneous mixed distributed training today faces several challenges: incompatible software and hardware stacks across device architectures; potential differences in numerical precision; inefficient communication between architectures; and mismatched compute and memory capacities that make load balancing difficult.

These challenges are not easily solved at once. Researchers have experimented with heterogeneous training on devices of the same architecture but different generations, or on different devices with compatible architectures, and will explore training across different architectures in the future. FlagScale is a framework that supports pooled training across multiple vendors' heterogeneous compute resources and currently implements heterogeneous pipeline parallelism and heterogeneous data parallelism.

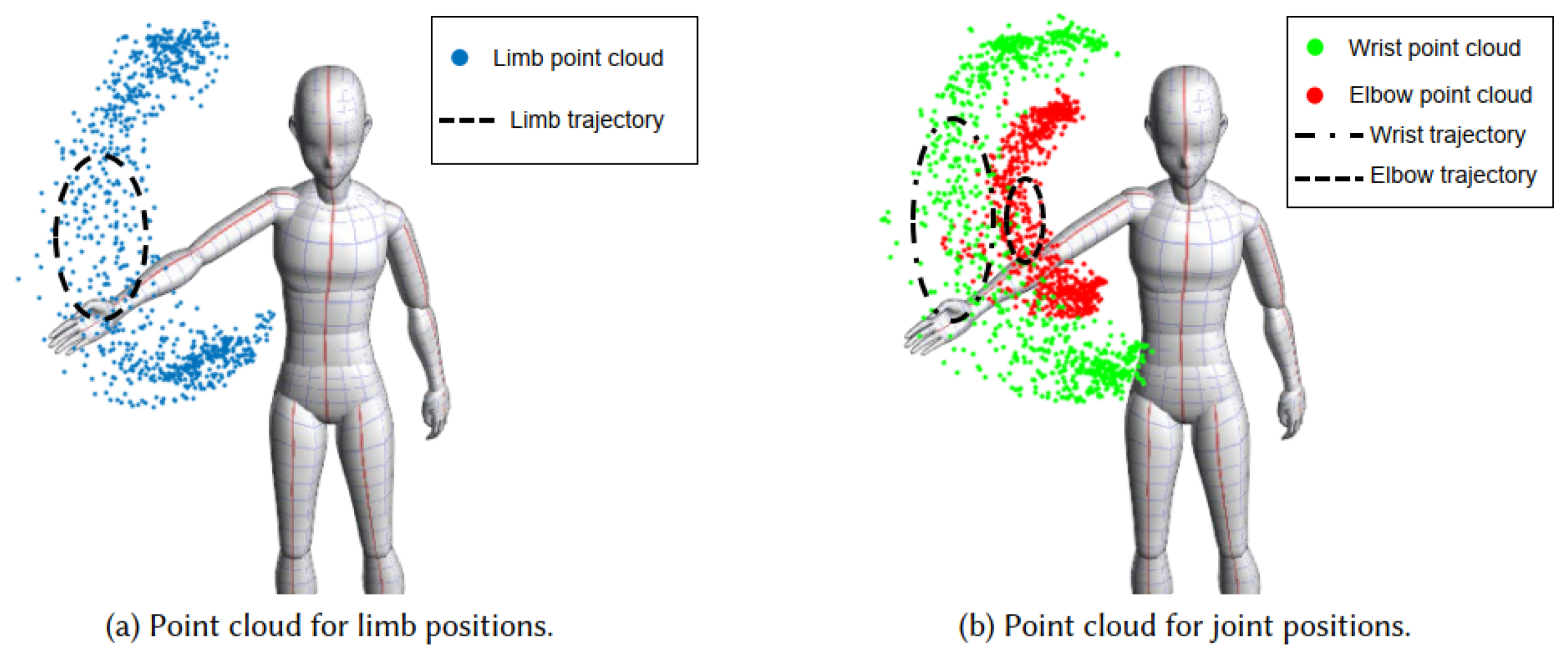

Heterogeneous pipeline parallelism: In this mode, pipeline parallelism can be mixed with data parallelism, tensor parallelism, and sequence parallelism to achieve efficient training. Based on memory use characteristics in backpropagation, this mode places devices with larger memory earlier in the pipeline and devices with smaller memory later, assigning network layers according to device compute to balance load.

Heterogeneous data parallelism: In this mode, tensor parallelism, pipeline parallelism, and sequence parallelism can be mixed for large-scale efficient training. Devices with larger compute and memory handle larger micro-batch sizes, while devices with less compute and memory handle smaller micro-batches, enabling load balancing across devices.

FlagScale's heterogeneous mixed training experiments on Nvidia and Tianshu Zhixin clusters show good returns: in three configurations, performance approached or even exceeded theoretical upper bounds, indicating low efficiency loss for heterogeneous mixed training and good training gains.

Lin Yonghua explains that FlagScale is implementing pooled training between Nvidia clusters and Tianshu Zhixin clusters, and plans to support pooled training across more Chinese vendors' compute clusters. The goal is to standardize communication libraries for heterogeneous chips and enable high-speed interconnectivity.

She notes that chip generations will often be mixed during iterative upgrades. Continued work on compatibility for mixed heterogeneous training and flexible combination of commercial resources in the same data center can help maximize performance and efficiency.

Constraint 2: CUDA dependence makes operator libraries hard to adapt across hardware

The software ecosystem for Chinese AI chips is weak, and mainstream AI frameworks primarily support Nvidia chips. Domestic AI chips must adapt to multiple frameworks, and each framework upgrade requires repeated adaptation. Each AI chip vendor also has its own incompatible low-level software stack.

Under large-model demands, these issues cause three impacts: missing operators and optimizations required for large models, preventing models from running or causing low efficiency; precision discrepancies due to architectural and software implementation differences; and high porting costs to enable large-model training on domestic AI chips.

Lin Yonghua argues that building a common, open AI chip software ecosystem is critical. Based on large-model research and development needs, the infrastructure layer should build a next-generation open, neutral AI compiler intermediate layer that adapts to the PyTorch framework and supports open programming languages and compiler extensions. Next steps include exploring core common technologies to maximize hardware utilization, performing extreme co-optimization of typical and complex operators across hardware and software, and open-sourcing results to efficiently support large-model training.

Constraint 3: Diverse chip architectures and software complicate evaluation and deployment

There are many AI chip vendors with different architectures and toolchains, numerous AI frameworks, and an expanding variety of scenarios and complex models. This creates heavy adaptation workload, high development complexity, and difficulty in standardizing evaluation metrics, which in turn hinders product deployment and scaling.

Lin Yonghua believes that evaluation of heterogeneous AI chips has important value for the industry ecosystem. The field currently lacks a widely recognized, neutral, open, and open-source evaluation system tailored to heterogeneous chips. An open AI chip evaluation project should be established, including baseline environments, heterogeneous chip base software, and test suites, and should comprehensively evaluate model support, chip training time and compute throughput, interactions with other server components, and support for different frameworks and software ecosystems.

4. Conclusion

Large-model development has increased demand for intelligent compute. IDC data indicate that from 2022 to 2027, China's intelligent compute capacity is expected to grow at a CAGR of 33.9%, exceeding the 16.6% CAGR of general compute capacity over the same period.

Chinese AI chip vendors face both opportunities and challenges. Addressing single-chip compute bottlenecks and multi-chip heterogeneous pooled training will require a global approach to building compute infrastructure platforms. In particular, constructing software ecosystems that match hardware—operating systems, middleware, and toolchains—will become increasingly important as large models move from foundational research to application deployment. Completing this software and infrastructure development is a necessary step for AI chips to support large-scale application deployment and will have a lasting impact on China's AI chip industry.

ALLPCB

ALLPCB