Introduction

Artificial intelligence (AI) has significant potential. A U.S. artificial intelligence safety commission noted that, compared with humans, machines can observe, decide, and act more quickly and efficiently, which can provide a competitive advantage across many fields. Regarding applying AI to wargaming, U.S. think tank research argues that AI systems not only automate many processes in wargaming and improve efficiency, but also enable novel forms of human-machine collaboration. The rise of AI presents unprecedented opportunities for wargaming and may drive disruptive change in the field.

Case Studies of AI in Wargaming

AI technologies are already mature in some areas, but applying AI to the specialized domain of wargaming remains largely unexplored. Most related research remains theoretical, with a smaller number of practical experiments.

RAND Corporation: Strategic and operational-level wargaming using AI and simulation

RAND conducted exercises to explore how NATO, led by the United States, might respond to threats from major strategic competitors such as Russia in the European theater. The focus was on the Baltic states as NATO members and a scenario where threats or conflict escalate in the near to mid-term (around 10 years or more). Using AI and simulation, the exercise performed quantitative analyses to test NATO operational concepts. The conclusion was that AI methods based on probabilistic reasoning can provide adjudicators with estimated probabilities of success for proposed courses of action, which helps adjudicators make better-informed decisions.

U.S. Joint Professional Military Education: AI-enhanced strategic-level wargaming experiments

This exercise tested the feasibility of applying AI models to strategic-level wargaming, compared AI-enabled exercises with traditional manual adjudication, and explored the impact of AI on professional military education. The scenario involved a town power grid disrupted by a cyberattack, and the exercise simulated the limited time window for handling such incidents by restricting decision time. Findings included: first, AI models can closely collaborate with humans and assist decision-making in wargames; second, AI models offer rapid decision recommendations, comprehensive scenario analysis, and good adaptability to dynamic problems; third, AI models can help decision-makers better adapt to complex cyber environments and prepare for crises.

RAND Corporation: Tactical-level wargaming experiments on AI-enabled vehicles

In September 2020, RAND researchers simulated company-level engagements between a Blue force (U.S.) and a Red force (Russia), using remotely operated non-autonomous combat vehicles and AI/ML-enabled combat vehicles in tactical actions. The experiment applied representative AI and machine learning technologies in a tabletop tactical wargame and conducted a series of plays, analyses, and tests to assess the potential and limits of AI/ML applied to combat vehicles. Two scenarios were set: a baseline scenario and an AI/ML scenario, differing in vehicle command and control methods. Conclusions were: first, remotely operated robotic vehicles had clear exploitable disadvantages compared with autonomous or manned vehicles; second, AI and machine learning capabilities can be integrated into tactical ground combat wargaming; third, wargames involving both operators and engineers help sponsors and procurers clarify requirements and engineering metrics for AI/ML systems.

Advantages of Applying AI to Wargaming

Why apply AI to wargaming, and what advantages does it bring? Research from academic institutions, research agencies, and think tanks abroad has investigated these questions extensively.

AI can create more realistic scenarios

When designing wargame scenarios, designers typically add elements to enhance realism. Manually or algorithmically adding these elements can be time-consuming and often performs poorly when reproducing complex scenarios. AI can improve scenario design realism in two ways. First, AI can quickly generate content tailored to a scenario. The emergence of models such as ChatGPT (a natural language AI model) and Stable Diffusion (an AI image generation model) shows that AI content-generation techniques have matured; AI-generated content can closely resemble human-created work and can be used to deepen and supplement wargame scenarios, enhancing participant immersion and enabling more realistic decisions. Second, AI can automate scenario execution. Entities in scenarios that do not require human control can be autonomously managed by AI, keeping exercises efficient and coherent while introducing richer variation to better reflect the complexity of real combat environments.

AI can augment computational capability and decision-making

AI offers new possibilities for handling fuzzy and uncertain problems, allowing powerful computational resources to be integrated into wargaming. This significantly increases efficiency and saves adjudicators time and effort, enabling them to run more scenarios or focus on critical issues. On one hand, AI can perform millisecond-level analyses to assist adjudicators. On the other hand, AI can directly process natural language, enabling adjudicators to interact with systems without intermediary technical personnel, which improves overall exercise efficiency.

AI can provide higher-quality decision recommendations

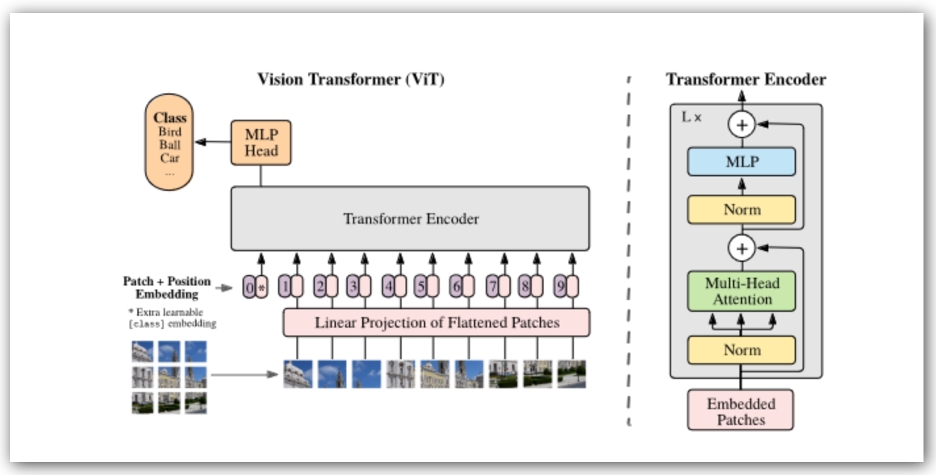

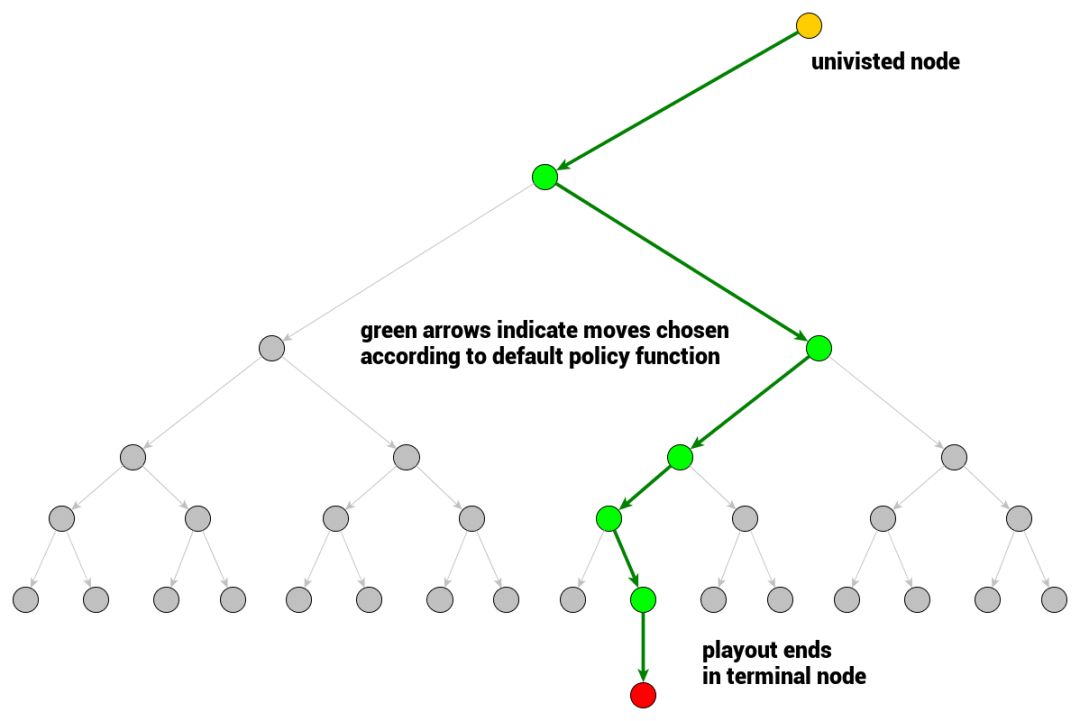

The algorithms behind AI help generate higher-quality strategies. In some respects, AI can match an experienced advisor by offering efficient, high-quality recommendations. First, training on large datasets gives AI a sample-size advantage over typical human adjudicators, improving the generalizability of AI-generated strategies and often better reflecting realistic operational conditions. Second, algorithmic mechanisms provide inherent advantages. AI methods used in wargaming commonly employ Monte Carlo Tree Search (MCTS), which cycles through four steps: selection, expansion, simulation, and backpropagation. The selection and expansion steps enable cross-comparison and selection among multiple options, while simulation allows repeated refinement of single options. These complementary mechanisms not only improve decision quality but also help detect potential risks in a timely manner.

AI can consider more comprehensive factors and details

AI, leveraging multithreading and high-rate processing, can consider more comprehensive information than humans in wargaming, offering broader perspectives or surprising conclusions. Human attention is limited; psychologist Daniel Kahneman described attention as a finite cognitive resource. AI can process large volumes of information rapidly, providing global and systemic perspectives in short timeframes. This helps adjudicators better consider risks, consequences, and costs when making decisions, leading to higher-quality outcomes. Additionally, due to algorithmic features such as MCTS encouraging thorough simulation of each branch, AI can surface strategies that humans might not anticipate and help adjudicators break out of cognitive fixations.

AI can enable deeper human-machine collaboration

Wargaming requires human participation, and AI builds a bridge between humans and machines. Research on AI-enabled human-machine collaboration in wargaming generally follows two directions. One is the "human-in-the-loop" approach. Former U.S. Deputy Secretary of Defense Bob Work proposed a "Centaur" human-machine collaboration model that aims to establish tight human-machine coupling while keeping critical decisions in human hands; many U.S. studies on human-machine collaboration in wargaming are influenced by this model. The other is the "human-out-of-the-loop" approach. These collaboration modes either focus on training human adjudicators with AI opponents to sharpen decision skills or let AI automatically make simple, low-risk decisions to increase overall efficiency. For example, in May 2020 DARPA launched the Constructive Machine Learning Adversary for Battle Test (COMBAT) program to build AI adjudicator teams to act as Red forces in wargames against Blue forces.

Conflicts and Limitations of AI in Wargaming

Despite many benefits, AI is not a panacea for wargaming. Practical experience shows several limitations.

Black-box effect versus verifiability

Understanding how AI reaches a decision requires a high level of expertise, producing a serious black-box problem. When AI is involved in wargaming, non-experts cannot easily understand the AI's decision logic or verify the comprehensiveness of training data, which undermines the credibility of conclusions.

High cost versus the need for generality

AI remains a high-tech capability with substantial application costs. Wargame systems developed at high cost typically need a certain level of generality to justify expenses. Current AI models have limited generality because their performance depends on training data, which cannot cover every possible situation. If exercise rules change beyond the training dataset, models require retraining. High-cost, low-generalizability systems consume large budgets and cannot easily spread costs across projects, which hinders wider adoption.

Pursuit of high realism and large-scale systems versus controlling complexity

AI enables designers to create richer scenario elements and broader wargaming systems. However, excessive automatic generation of elements can overwhelm adjudicators with information, increasing difficulty of use and diverting attention from key issues. AI capabilities can also tempt designers to build larger, more comprehensive systems, which increases development difficulty and raises long-term operation and iteration costs.

Excessive workload reduction versus preserving the human factor

AI assistance can save adjudicators time and attention, allowing them to handle more tasks or concentrate on crucial issues. However, poor design may lead to excessive reliance on AI. Peter Perla, a leading wargame practitioner, argued in The Art of Wargaming that wargaming is most effective when adjudicators make decisions and must deal with the consequences. Without proper constraints, adjudicators may transfer decision-making authority to machines, undermining the goal of training decision-makers and failing to simulate real-world decision pressure, which harms exercise validity.

ALLPCB

ALLPCB