Overview

Artificial intelligence (AI) and machine learning (ML) are being applied to low-power microcontrollers (MCUs) to enable edge AI/ML solutions. These MCUs are integral to many embedded systems and, with cost-effective, energy-efficient, and reliable performance, can now support AI/ML workloads. Integrated AI on MCUs is particularly useful in wearables, smart home devices, and industrial automation. The rise of TinyML, which focuses on running ML models on small, low-power devices, highlights progress in this area. TinyML enables on-device intelligence, supports real-time processing, and reduces latency, which is critical in environments with limited or no connectivity.

What is TinyML

TinyML refers to applying machine learning models on small, low-power devices, especially MCU platforms optimized to run ML within constrained resource budgets. This enables edge devices to make intelligent decisions, perform real-time processing, and minimize latency. Techniques such as quantization and pruning reduce model size and speed up inference. Quantization reduces weight precision to significantly decrease memory usage with minimal accuracy loss. Pruning removes less important neurons to further shrink models and improve latency. These techniques are essential for deploying ML on resource-constrained devices.

Frameworks and Toolchains

Popular frameworks for ML model development include PyTorch and TensorFlow Lite. PyTorch is an open-source ML library widely used to develop AI applications, including those that can be deployed on MCUs. TensorFlow Lite for Microcontrollers (TFLM) runs optimized TF Lite models on highly constrained MCU-class devices. TFLM supports conversion to compact FlatBuffer formats, reducing model footprint and optimizing inference on MCUs.

ARM's CMSIS-NN library provides optimized neural network kernels for Cortex-M processors to run TFLM models, improving performance and lowering memory usage on ARM-based MCUs.

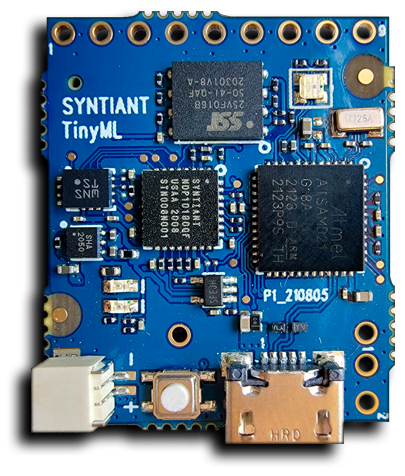

Hardware Accelerators

Some MCUs include dedicated AI/ML hardware accelerators that significantly improve model performance, enabling more complex applications to run faster and more efficiently on constrained devices. AI accelerators excel at parallelizing operations such as matrix multiply and convolution by exploiting multiple forms of parallelism. They perform many computations simultaneously, increasing throughput while maintaining low power consumption. These accelerators also optimize memory access patterns, reducing data transfer overhead so the main CPU—typically a Cortex-M core—can enter low-power modes or handle additional tasks. The result is improved performance, lower power consumption, and reduced latency.

Practical Applications

TinyML has diverse and impactful applications. Examples include:

- Wake-word detection and visual wake-up: Devices trigger actions when a keyword is spoken or when a person is detected in an image. This is used in smart speakers and security cameras to activate on wake words or motion.

- Predictive maintenance: Sensors on factory equipment monitor vibration and temperature; TinyML models detect anomalies and predict maintenance needs before failures, reducing downtime and maintenance costs.

- Gesture and activity recognition: Wearables with accelerometers and gyroscopes monitor activities such as walking, running, or specific gestures. TinyML models analyze sensor data in real time for fitness tracking or medical diagnostics.

- Smart agriculture: TinyML is used for environmental monitoring to analyze soil moisture and weather data, optimizing irrigation to improve crop yield and resource efficiency.

- Health monitoring: Devices requiring long battery life and real-time processing, such as continuous glucose monitors (CGMs), benefit from TinyML. Non-contact bed sensors can assess breathing patterns to support remote monitoring, aiding elder care and chronic disease management.

Getting Started

To build TinyML applications, understand TinyML fundamentals and select appropriate hardware. Depending on the use case, sensors such as accelerometers, microphones, or cameras are needed to collect data. Set up a development environment by installing vendor IDEs, SDKs, and TinyML libraries.

Next, collect and prepare application-specific data. For example, a gesture recognition system requires accelerometer data for different gestures. Preprocess the collected data—filter noise, normalize values, and segment into windows—to make it suitable for training. Train models using frameworks like TensorFlow or PyTorch on high-performance machines. After training, apply optimizations such as quantization and pruning.

Convert optimized models into MCU-friendly formats, such as TensorFlow Lite. Deploy the optimized model to the MCU and integrate it with application firmware. Thorough testing is required to meet performance and accuracy requirements. Iterative refinement based on real-world performance is critical to improving TinyML applications.

Using Silicon Labs Solutions for AI/ML on Microcontrollers

Silicon Labs provides solutions for implementing AI/ML on MCUs. EFR32/EFM32 (xG24, xG26, xG28) and SiWx917 series microcontrollers combine low power and strong performance, making them suitable for TinyML. The following sections summarize technical guidance for AI/ML on Silicon Labs MCUs.

Data Collection and Preprocessing

Data collection: Use sensors connected to the MCU to collect raw data, such as accelerometers, gyroscopes, and temperature sensors.

Preprocessing: Clean and preprocess data for training, including noise filtering, normalization, and windowing. Silicon Labs provides data collection and preprocessing tools.

Data collection tools are provided by partner SensiML.

Model Training

Model selection: Choose appropriate ML models for the application. Common choices include decision trees and support vector machines.

Training: Train models on high-performance cloud servers or local machines with GPUs using TensorFlow. This involves feeding preprocessed data into the model and tuning parameters to minimize error.

Model conversion: Use the TensorFlow Lite converter to transform trained models into formats compatible with TFLM. Converting to FlatBuffer format produces a compact binary that can be efficiently stored and accessed on MCUs. FlatBuffers allow direct access to the model without decompression, which is important for MCUs with limited memory and compute resources. Once in FlatBuffer format, the model can be executed on the MCU to perform inference. This conversion reduces model size and makes it suitable for devices with code space typically under 3 MB and RAM around 256 KB, and with clock rates below 1 GHz.

Model Deployment

SDK integration: Integrate TFLM with the MCU using the vendor SDK.

Flashing the model: Program the converted model into MCU flash memory using the vendor IDE, which provides a user interface for programming Silicon Labs MCUs.

Inference and optimization: Apply quantization and pruning to reduce model size and improve performance.

Running inference: Once deployed, the model accepts new input data and provides predictions on the MCU.

Software Toolchain and Resources

Silicon Labs provides software tools that support developers using popular toolchains like TinyML and TensorFlow to build and deploy AI/ML algorithms. In addition to native TensorFlow support and optimized kernels for efficient inference, Silicon Labs collaborates with AI/ML tool providers such as SensiML and Edge Impulse to offer an end-to-end toolchain for embedded, wireless-optimized ML development.

Available resources include:

- Machine learning applications repository: a collection of embedded applications that use ML for industrial and commercial scenarios, supported by TFLM. See the SiliconLabs machine_learning_applications repository for examples.

- Machine Learning Toolkit (MLTK): a Python package with command-line utilities and scripts to support ML model development on Silicon Labs embedded platforms. It includes performance evaluation tools for ML models on embedded targets and supports TensorFlow training workflows.

- Reference datasets: MLTK includes datasets used by reference models.

- Audio feature generator: an audio front end that initializes according to configuration and runs the microphone in streaming mode to generate features for TensorFlow Lite models.

- MLPerf Tiny benchmark: an MLCommons benchmark suite designed to measure inference performance and energy efficiency on minimal, low-power devices. It targets deep embedded use cases such as IoT and smart sensing. Silicon Labs has participated in MLPerf Tiny submissions demonstrating MLTK capabilities, including reference models for anomaly detection, image classification, keyword spotting, and visual wake words.

Embedded Development Workflow

Developing ML-enabled embedded applications typically follows two workflows:

- Use the vendor IDE to create the embedded application and wireless firmware.

- Create the ML workflow that produces models and artifacts to be added to the embedded application.

Example Target Applications

Motion detection: Motion sensors in commercial buildings often control lighting based on occupancy. When occupants move only their hands while typing, motion sensors might not detect presence, leading to lights turning off. Combining audio sensors with motion detectors allows ML algorithms to process audio cues such as typing sounds so lighting systems can make better decisions.

Predictive maintenance: Use EFR32 MCUs to build predictive maintenance systems that collect vibration and temperature data with connected sensors, train models to predict faults, and deploy models on MCUs for real-time monitoring.

Health monitoring: Build wearable health monitors using EFM32 MCUs to collect vital signs like heart rate and body temperature. Train models to detect anomalies and deploy them on MCUs for real-time analysis.

Smart agriculture: Develop intelligent irrigation systems using MCUs to collect soil moisture and weather data, train models to optimize water usage, and deploy models on MCUs to control irrigation.

Conclusion

MCUs have evolved from simple controllers into capable platforms for AI. By adopting AI-optimized MCUs, new applications for battery-powered smart devices become feasible. From smart home devices to industrial sensors, AI-driven MCUs are reshaping the future of embedded systems.

ALLPCB

ALLPCB