Overview

This article reviews progress in on-path storage systems. It first describes the hardware architecture and performance characteristics of programmable network devices, then summarizes two major challenges for building high-performance on-path storage systems: the division of responsibilities between software and hardware, and system fault tolerance. The review classifies existing on-path storage systems by the tasks executed on programmable network devices (caching, coordination, scheduling, aggregation), and analyzes design challenges and software techniques through representative system examples.

The survey concludes by identifying key research directions for on-path storage systems, including switch-NIC coordination, security, multi-tenancy, and automatic offload.

Key Points

- Introduces hardware architectures and performance characteristics of programmable network devices, and summarizes two major challenges for high-performance on-path storage systems.

- Classifies existing on-path storage systems based on tasks executed by programmable network devices.

- Identifies research directions that require further exploration for on-path storage systems.

Storage System Fundamentals

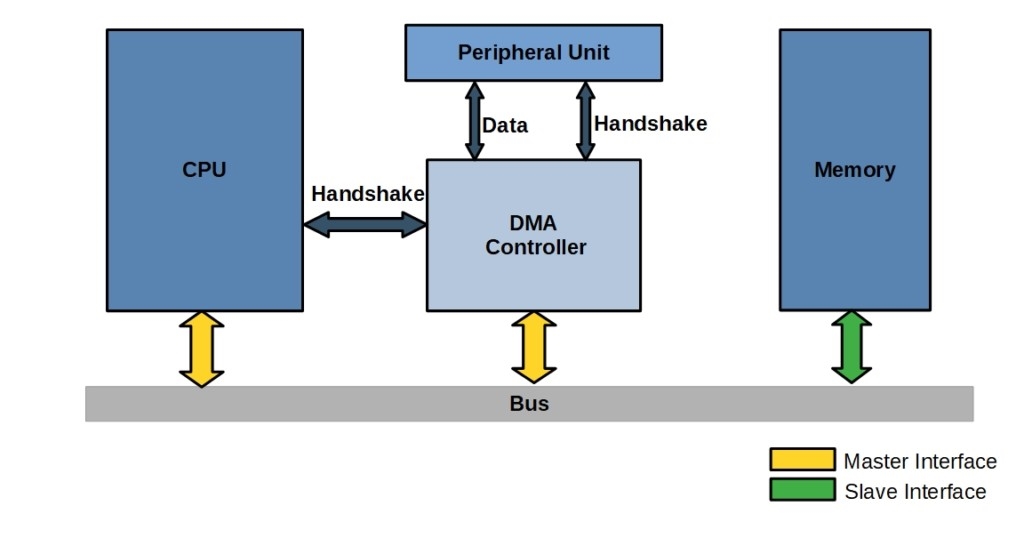

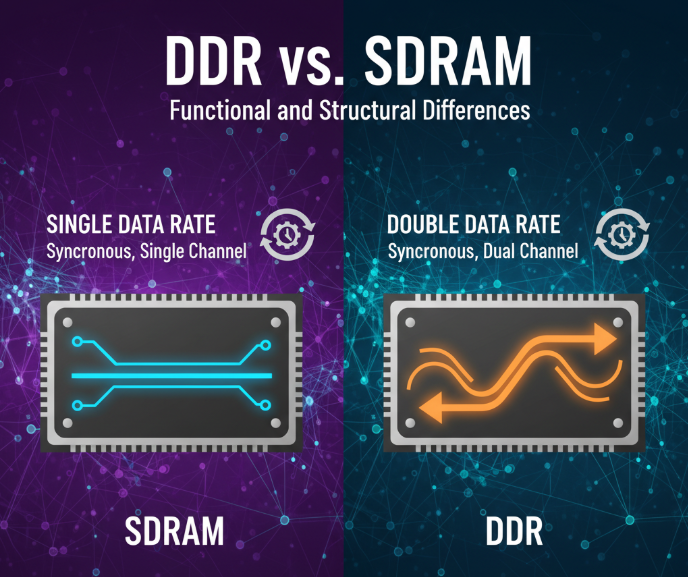

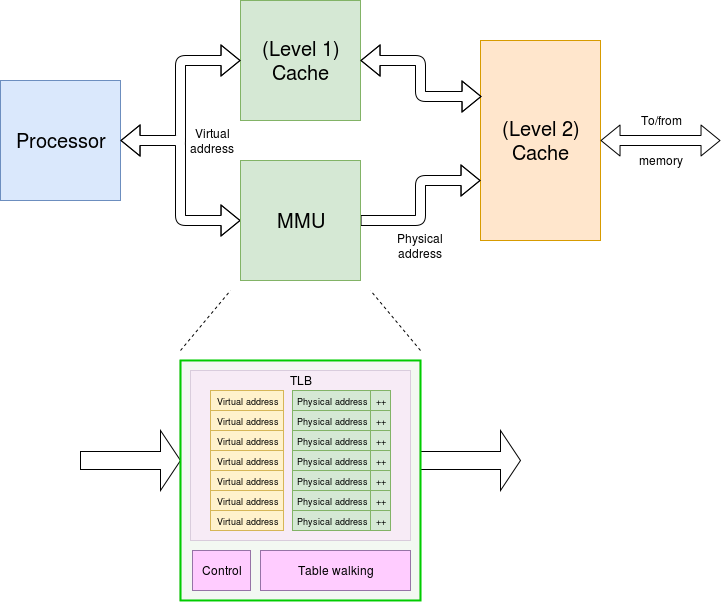

Common intelligent NICs use one of four programmable chip types: ARM CPUs, network processors (NP), application-specific integrated circuits (ASICs), and field-programmable gate arrays (FPGAs). Each option has trade-offs in performance, usability, and expressiveness, as summarized in the original paper.

Research on extending network hardware expressiveness can be grouped into three categories, as described in the paper.

Fault Tolerance for Programmable Switches

One protocol records client update requests in the switch's persistent log area and returns a completion message to the client early, while servers asynchronously process the update; this reduces client request latency. RedPlane achieves fault tolerance by replicating modification requests to multiple servers, which store the modified data in local DRAM to tolerate switch crashes.

On-path Storage System Categories

On-path storage systems execute storage tasks in the data transfer path using programmable network devices, changing the traditional CPU-centric architecture. Based on the tasks performed by programmable network devices, on-path storage systems fall into four categories: on-path data caching, on-path data coordination, on-path data scheduling, and on-path data aggregation. The following sections introduce these four categories and analyze representative systems.

On-path Data Caching

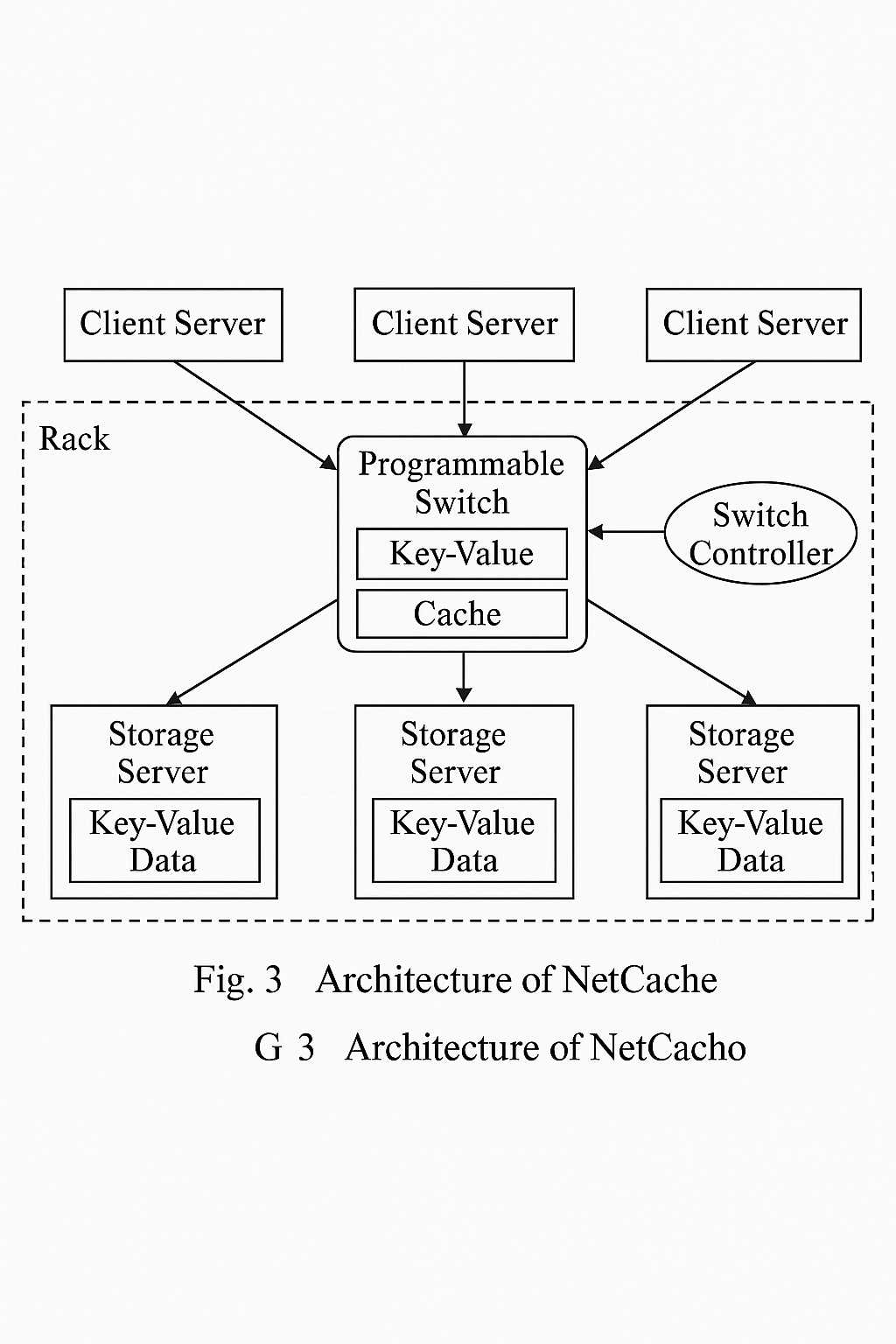

NetCache architecture comprises four components: 1) storage servers that store key-value data in DRAM; 2) client servers that issue key-value requests (Get, Put, Del); 3) a programmable switch that caches hot key-value pairs to serve Get operations and collects hot-key statistics; 4) a switch controller that adds or removes key-value pairs from the switch. Backend storage servers, the programmable switch, and the switch controller are located in the same rack, so all client requests traverse that switch.

Thanks to high switch aggregation bandwidth, NetCache improves throughput by an order of magnitude under skewed Get-intensive workloads. NetCache is limited to a single rack; DistCache extends this to large clusters using independent cache allocation and two-stage randomized selection. NetChain implements reliable key-value storage using chain replication, storing each key-value pair in SRAM on multiple switches.

On-path Data Coordination

Concordia, proposed by Tsinghua University, uses programmable switches to accelerate cache coherence protocols for distributed shared memory. Distributed shared memory systems over high-speed networks (for example, RDMA) support large-scale in-memory computation like graph processing. Even with high network bandwidth, network latency and access speed remain inferior to local memory, so servers maintain local caches and must preserve coherence. Traditional coherence protocols incur expensive distributed coordination. Directory-based protocols introduce multiple network round trips and create bottlenecks for hot data; broadcast-based protocols waste network and CPU resources. Concordia leverages the switch's position in the network core to design an efficient on-path coherence protocol. The original paper includes an architecture diagram.

Other works also use programmable switches for coherence. Pegasus from the University of Washington addresses load imbalance by replicating hot objects across multiple servers instead of caching, with the switch maintaining a list of servers holding each hot object and updating it on writes so subsequent reads see fresh data. Mind from Yale targets disaggregated memory scenarios where compute nodes access remote memory pools and cache data locally; it uses programmable switches to ensure cache consistency across nodes. NetLock from Johns Hopkins implements a high-performance lock manager in the switch memory, improving throughput and maintaining per-lock queues to fairly serve conflicting lock requests and reduce tail latency.

On-path Data Scheduling

SwitchTx, from Tsinghua University, scales on-path distributed transactions by abstracting the transaction coordination process as multiple collect-and-distribute operations and offloading them to multiple programmable switches in the cluster, avoiding single-point bottlenecks present in systems like Eris. The system components and transaction flow are illustrated in the original paper.

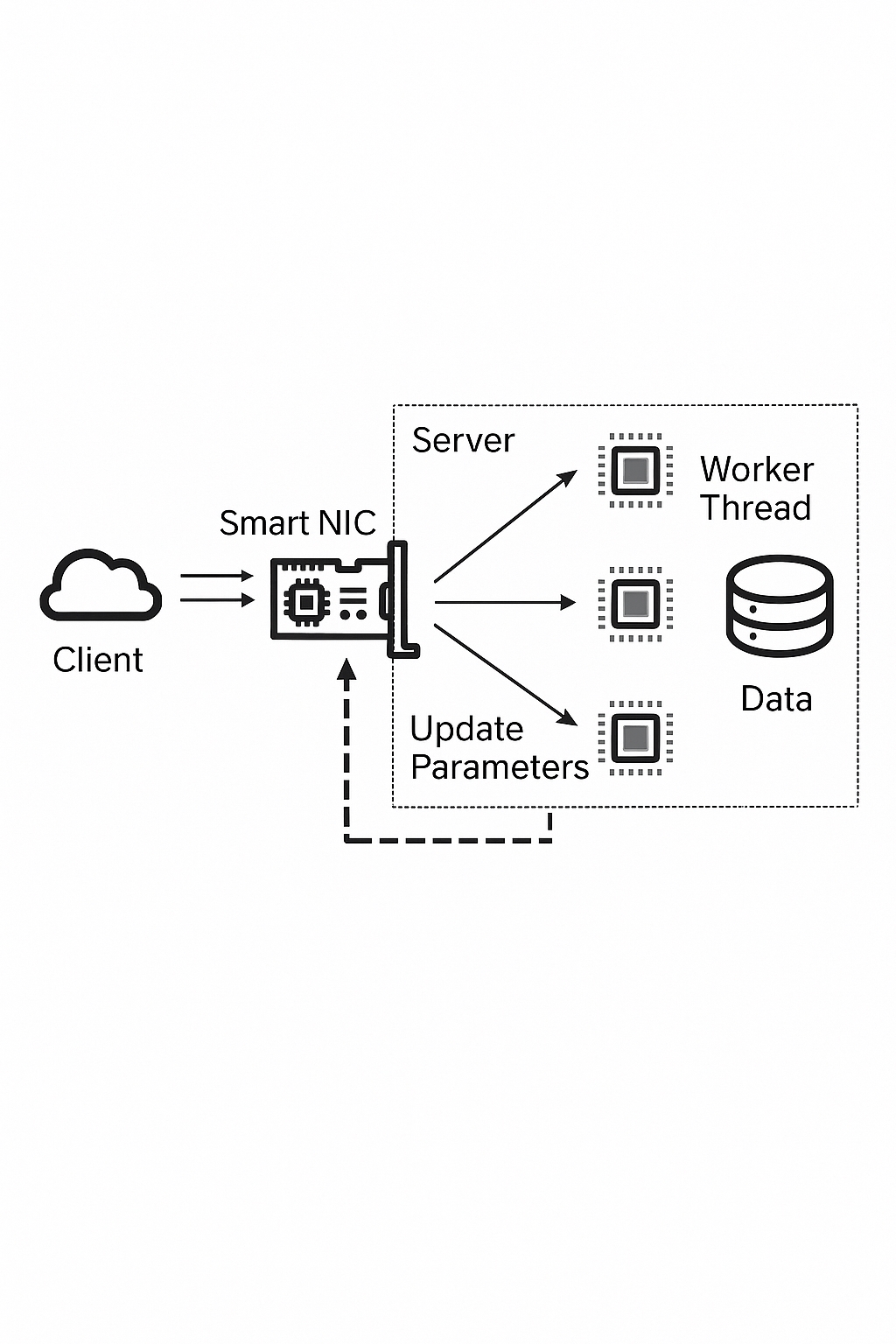

Xenic from the University of Washington offloads transaction coordination and data concurrency indexing to on-path intelligent NICs based on ARM CPUs. Xenic stores transaction transient state on the client NIC to carry out coordination, reducing communication latency. Server NICs use NIC memory to store hot data and lock metadata, eliminating PCIe overhead for remote data access, and use the NIC ARM CPU for complex data access to reduce server CPU load. Xenic designs a Robin Hood hash index that coordinates NIC memory and host memory to reduce DMA counts during remote access. Replica operations during transactions are performed entirely on the NIC, further reducing host CPU overhead. Several distributed file systems also offload replica operations to intelligent NICs.

On-path Data Aggregation

R2P2 and RackSched support load-balanced scheduling among servers but do not consider data consistency: the latest version of some data may reside only on certain servers, so the switch cannot arbitrarily schedule related RPCs. Harmonia and FLAIR use programmable switches to perform consistency-aware request scheduling for replica protocols, where data is redundantly stored via consensus across servers (one primary and multiple replicas). The challenge is integrating the switch with the consensus protocol to identify which servers hold the latest version for a read. Harmonia maintains a fine-grained hash table in the switch to track items undergoing concurrent writes; reads for these items are routed to the primary, while other reads may be scheduled to any replica. FLAIR partitions the key space and records the stability state of each partition in the switch: partitions with ongoing writes are marked unstable and their reads are routed to the primary; reads for stable partitions are load-balanced across replicas.

Microsoft Research proposed SwitchML to offload model parameter aggregation during machine learning training to programmable switches. Because programmable switches lack floating-point support, SwitchML uses a co-design approach where servers quantize floating-point parameters to fixed-point before aggregation, allowing the switch to aggregate fixed-point values. Tsinghua University proposed ATP, which uses multiple switches to accelerate training and supports multi-tenant training. King Abdullah University of Science and Technology proposed OmniReduce for sparse training workloads and offloads part of the aggregation algorithms to programmable switches. Other works like iSwitch and Flare design custom switch hardware to accelerate aggregation, with iSwitch using FPGA and Flare using P4N hardware; Flare also supports user-defined aggregation data types.

Summary and Outlook

The survey analyzes challenges in building on-path storage systems starting from the characteristics of programmable network hardware (programmable switches and intelligent NICs), and categorizes existing research in detail. Research to date has used programmable network hardware to accelerate storage system modules including caching, coordination, scheduling, and aggregation, achieving significant performance improvements. However, research must continue in four areas before on-path storage systems can become widely adopted in data centers and supercomputing centers.

1. Switch and NIC Coordination

Most current on-path storage systems use programmable switches or intelligent NICs in isolation and cannot provide comprehensive storage function offload.

2. Multi-tenancy

When on-path storage systems are deployed in cloud environments, they must efficiently support multi-tenancy, providing resource sharing and isolation among tenants.

3. Security

With increasing use of encryption for network data, programmable switches and intelligent NICs must efficiently process encrypted data.

4. Automatic Offload

Building a production-quality, highly reliable on-path storage system from scratch is engineering intensive. Automatically offloading modules from mature storage systems such as Memcached or Ceph to programmable switches and NICs would allow reuse of existing code while capturing the performance benefits of programmable network devices.

ALLPCB

ALLPCB