Overview

RISC-V processors have drawn wide attention for their flexibility and extensibility, but without an efficient verification strategy, design defects can hinder broader adoption. Before RISC-V, processor verification expertise was concentrated in a few commercial vendors that developed proprietary flows and tools. The rise of the open RISC-V ISA and many open implementations has increased interest and created demand for appropriate tools and expertise.

RISC-V International has announced several new extensions and the ISA encourages users to develop their own extensions and modifications. While developing extensions can be relatively fast and straightforward, verifying them is not.

There are few standard or open tools to help with processor verification. RISC-V is an open ISA that anyone can implement, but organizations entering the RISC-V market understand that avoiding license fees does not make it a low-cost option. If a team intends to succeed with RISC-V, there are no shortcuts in verification.

Many people mistakenly equate processor verification with testing whether instructions execute correctly. They build test generators or employ verification suites, but the real challenges lie in the microarchitecture and the pipeline. There is no standard method, and public discussion about microarchitecture verification is limited.

Verifying a given RISC-V CPU is often underestimated. RISC-V brings significant control and data-path complexity. Common techniques for higher performance, such as speculative execution and out-of-order execution, increase complexity and expose potential security vulnerabilities, for example Spectre and Meltdown. Many RISC-V developers come from traditional CPU backgrounds and reuse familiar techniques, but there are important ecosystem differences.

RISC-V microarchitecture verification must balance openness and flexibility with diversity and complexity. As the ecosystem matures, addressing these challenges is key to establishing RISC-V as a reliable and secure alternative to other microarchitectures such as ARM and x86. Community-driven approaches, ongoing innovation, and attention to standardization will continue to evolve the RISC-V verification environment.

Beyond Random Testing

Processor verification differs from typical ASIC verification because the processor must correctly implement every ISA operation across a vast space of possible instruction combinations. SystemVerilog and UVM are mainstays of ASIC verification. UVM is a good framework for constrained-random instruction generation, but it has limitations, notably coverage. Claiming 100% coverage for an "add" instruction rarely means all relevant operand and microarchitectural combinations are covered.

Constrained-random generators can produce hundreds of thousands of instructions targeted to specific areas, but is that sufficient? Experience from traditional CPU vendors and observations in RISC-V cores indicate that simulation-based verification alone is inadequate. This motivates the use of additional techniques such as formal verification.

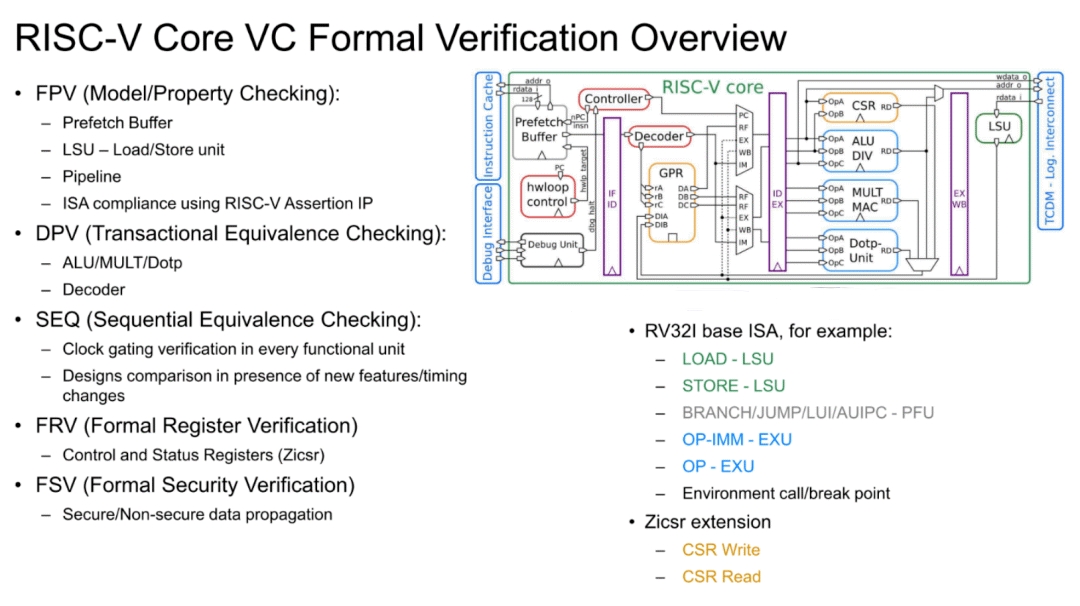

Processors are verified bottom-up, similar to system verification. Common subunits include branch predictors, parts of the pipeline, and memory systems like caches. These subunits are often a good fit for formal methods. Some teams validate components like prefetch buffers, ALUs, register models, and load-store units under constrained random tests and achieve decent coverage, but without formal verification extreme corner cases can be missed, leaving risk in the final stages of verification.

Formal Verification for Processor Submodules

After subunits are validated, they are integrated. Discovering an ALU bug only when booting Linux would be a difficult experience. A hybrid verification strategy is needed. Formal verification is valuable because it exhaustively explores input combinations against ISA-specified behavior, usually expressed as SystemVerilog assertions. Major processor vendors also maintain extensive verification suites, including UVM test platforms and test software. Simulation is necessary to validate all modules of a large processor, to ensure correct SoC integration, and to run software on the device under test.

Surprisingly, a processor core can still boot Linux with many latent bugs. Booting a real Linux system exposes issues not found by other EDA checks or formal proofs, such as many asynchronous timing anomalies.

Most teams validate by comparing implemented behavior against a reference model. Specifications are not precise in every scenario; for example, they may not specify what happens when six interrupts with equal priority occur simultaneously. Microarchitectural choices, such as which interrupt to handle and at which pipeline stage, vary between cores. In such cases, the reference model helps: when the reference and RTL differ, engineers must analyze whether the RTL behavior is acceptable.

One appeal of RISC-V is the ability to modify the processor for specific applications. The challenge is that every added feature multiplies verification effort and complexity. Custom instructions increase verification scope; teams must re-verify impacted functionality and ensure additions do not negatively affect the rest of the design, especially when changes touch pipeline control, ALU conflicts, cache behavior, or load-store paths.

When Is Verification Complete?

Verification is never truly complete. A common practical view is that verification is sufficient when the residual risk is manageable. Verification provides visibility into what has been tested and gives confidence, but it cannot guarantee the absence of defects. Simulation-based verification can produce extensive coverage reports that indicate a large portion of the design has been exercised and specific classes of bugs have been found.

However, due to processor complexity, coverage alone is insufficient. Processor coverage is not just instruction-level or decoder coupling; you must also consider instruction sequences and dynamic events inside the pipeline. With custom instructions in RISC-V, understanding the microarchitecture and how changes affect the SoC and workloads is essential. Hardware-assisted validation techniques—virtual prototypes, simulation acceleration, and hardware prototyping—are critical parts of the overall verification flow. These techniques help ensure microarchitectural decisions do not have unintended power or performance tradeoffs.

Security demands stricter verification. For products requiring specific certification levels, fault injection and diagnostic coverage analysis, as defined by standards such as ISO 26262 for functional safety, may be necessary. If faults are injected into critical functions, the design must include mechanisms to detect and handle them.

When exhaustive verification is impossible, heuristic methods become important. A practical recommendation is to use the chip from the user's perspective: run real software workloads for extended periods. Operational software testing is valuable even if it is difficult to quantify.

Security and Tooling Needs

The open nature of RISC-V brings potential security considerations. Transparency enables community review but also gives adversaries access to the same information. This heightens the need for strong security verification to ensure microarchitectures can withstand various attacks. Unlike some proprietary architectures that can keep security mechanisms confidential, RISC-V requires robust verification strategies to address security challenges.

RISC-V also needs more specialized verification tools. When processors were developed inside a handful of companies, test generators and formal tools were built in-house. With RISC-V, there is a growing market for architecture analysis, verification, and formal tools around the ISA. Over time, tools for RISC-V performance analysis and formal verification will emerge and mature.

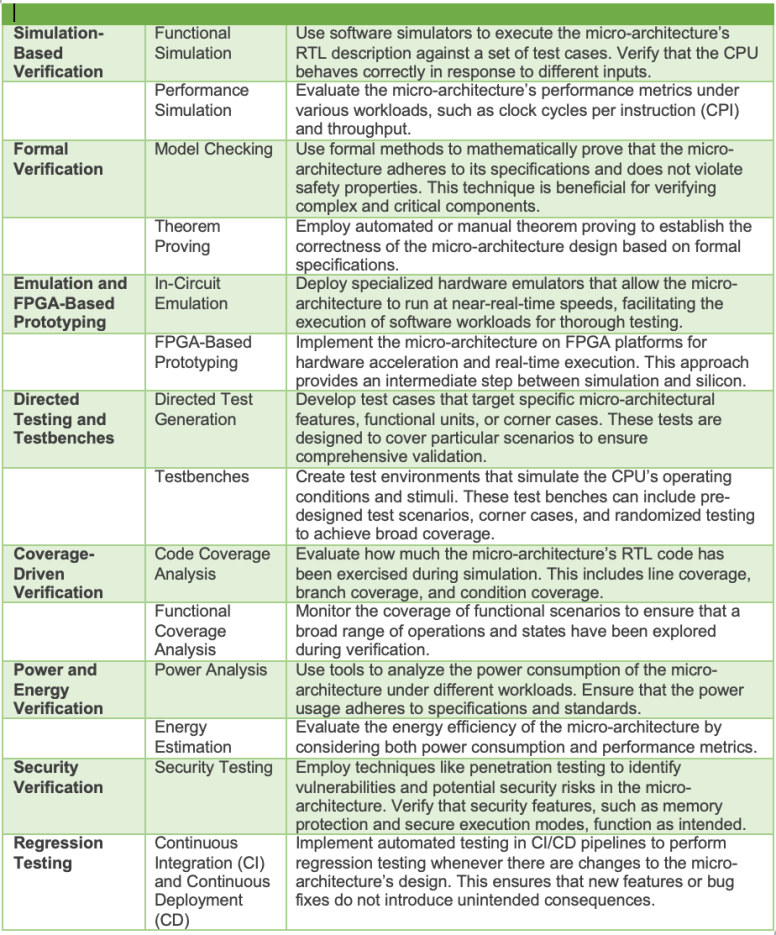

The following figure summarizes approaches to microarchitecture verification.

ALLPCB

ALLPCB