The demand for tools that streamline generative AI development is growing rapidly. Applications leveraging retrieval-augmented generation (RAG), which enhances AI model accuracy by retrieving data from external sources, and custom models allow developers to tailor AI solutions to specific needs. Previously complex, these tasks are now more accessible with new tools like NVIDIA AI Workbench.

What Is NVIDIA AI Workbench?

NVIDIA AI Workbench is a free tool that enables developers to create, test, and prototype AI applications across various GPU systems, including laptops, workstations, data centers, and cloud environments. Part of the RTX AI Toolkit introduced at COMPUTEX, it simplifies complex technical tasks for both experts and beginners. Users can set up AI Workbench in minutes on local or remote machines, start new projects, or clone examples from GitHub. Integration with GitHub or GitLab facilitates collaboration and project sharing.

Addressing AI Development Challenges

AI development often involves complex manual processes, such as configuring GPUs, updating drivers, and managing version incompatibilities. Replicating projects across systems can lead to repetitive tasks, data fragmentation, or version control issues, hindering collaboration. Moving credentials, secrets, and adjusting environments, data, models, or file locations can also limit project portability.

AI Workbench addresses these issues by offering:

- Simplified setup for GPU-accelerated environments, accessible to users with limited technical expertise.

- Seamless integration with GitHub and GitLab for improved collaboration.

- Consistent performance across local workstations, PCs, data centers, or cloud environments, enabling easy scaling.

Streamlining RAG-Based Applications

NVIDIA provides project examples to help users get started with AI Workbench. The Hybrid RAG Workbench project, for instance, enables users to run a text-based custom RAG web application for document processing on local or remote systems. Each project operates within a container, bundling all necessary components. The Hybrid RAG example pairs a Gradio chatbot frontend on the host with a containerized RAG server backend, which manages user requests, vector databases, and large language models (LLMs).

The project supports multiple LLMs and offers flexible inference options, including local execution on Hugging Face text generation inference servers, NVIDIA inference endpoints like the NVIDIA API Catalog, or self-hosted microservices such as NVIDIA NIM. It also provides:

- Performance metrics, including retrieval time, time to first token, and token velocity for RAG and non-RAG queries.

- Retrieval transparency, displaying semantically relevant text snippets fed into the LLM for improved response relevance.

- Customizable responses through parameters like maximum token count, temperature, and frequency penalty.

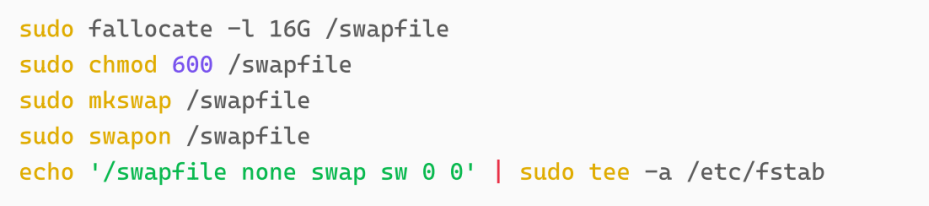

Users can install AI Workbench locally and clone the Hybrid RAG Workbench project from GitHub to their systems.

Model Customization and Deployment

AI Workbench supports model fine-tuning to adapt AI models for specific use cases, such as style transfer or behavior modification. The Llama-factory AI Workbench project offers QLoRa, a memory-efficient fine-tuning method, with a user-friendly graphical interface for model quantization. Developers can use public or proprietary datasets to meet application requirements.

After fine-tuning, models can be quantized to enhance performance and reduce memory usage, then deployed to native Windows applications for local inference or to NVIDIA NIM for cloud inference.

Hybrid Design for Flexible AI Tasks

The Hybrid RAG Workbench project supports a hybrid design, allowing local execution on NVIDIA RTX workstations or GeForce RTX PCs, as well as scaling to remote cloud servers or data centers. Users can run all Workbench projects on their preferred systems without significant setup overhead, making AI development more accessible and flexible.

ALLPCB

ALLPCB