Introduction

Driven by advances in deep learning, machine vision continues to expand the capabilities of automation and defect inspection. Integrating deep learning into embedded systems can be transformative for applications such as robotics, autonomous vehicles, and industrial processes. From autonomous actions in smart doorbells to complex drone-based inspections, advanced vision methods can enable functions that were previously impractical. However, this integration raises challenges in latency, energy efficiency, security, and privacy. Meeting these requirements requires careful selection of algorithms, adaptation for embedded systems, and deployment strategies.

Machine Vision and Embedded Constraints

Improvements in machine vision and deep learning have delivered significant benefits for industrial and home automation, especially for systems that must observe, interpret, and respond to their environment. Because operating environments for intelligent devices and embedded systems are often complex and variable, deep learning methods are increasingly necessary. Enhanced on-device intelligence supports autonomous tasks such as delivery, grounds maintenance, smart door and access control, vacuuming, manufacturing, and warehouse packing. Many of these applications also involve monitoring and defect detection.

For tasks such as inspecting pipelines, power lines, signal towers, and other assets using drones or small autonomous vehicles, adding deep-learning-based vision can improve robustness and efficiency. With sufficient data, these models typically generalize better across varying conditions than traditional vision methods.

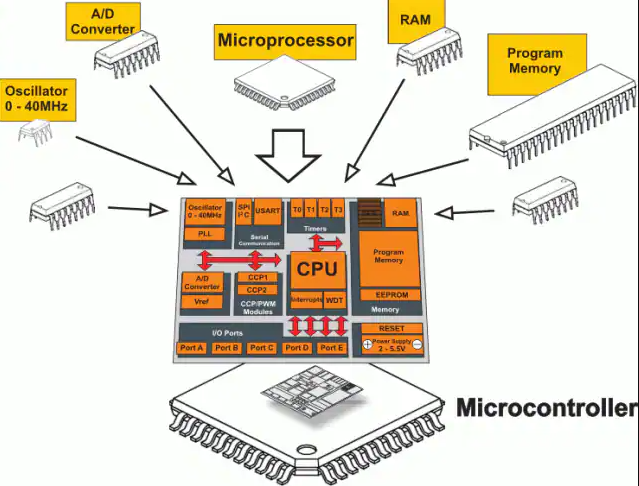

Bringing deep learning into machine vision introduces significant computational and hardware requirements that affect cost and feasibility. Because many applications require real-time detection, classification, and responses, sending data over a network for remote inference is often impractical due to tight time budgets. Consequently, deployed hardware must support low-latency inference. Power efficiency is also critical for battery-powered autonomous platforms such as drones or mobile inspectors: longer runtime on a charge improves operational efficiency. Since deep learning workloads are computationally intensive, optimizing models to run efficiently on the chosen hardware is essential.

Embedded platforms such as evaluation kits based on processors with integrated AI acceleration are designed to address these constraints, enabling on-device inference without excessive power consumption. Other important requirements include security and privacy: many deployments store data locally rather than sending it to the cloud, or require end-to-end encryption for any network traffic.

When real-time inference is required on robots or vehicles, integrating high-quality, low-latency sensor data for vision pipelines is also essential, and this need should influence hardware selection.

Algorithm Selection for Embedded Systems

Choice of deep learning algorithms for vision on embedded systems is driven by the constraints described above. Designers must pay close attention to model size on disk, memory usage, and inference latency. For many object-detection scenarios (for example, defects, people, vehicles, animals), there are pretrained "foundation" models that have been exposed to large, diverse datasets and can be adapted to downstream tasks.

However, if the target task differs substantially from the dataset the foundation model was trained on, strict performance testing is necessary. Detecting wire flaws or internal pipeline defects involves different object classes and imaging conditions than typical people or vehicle detection.

Designers should ensure that pretrained architectures are suitable for embedded deployment. Specifically, choose ultra-compact model architectures that can run on-device—ideally models sized in megabytes rather than gigabytes—and that load and run without excessive overhead. Techniques such as pruning and quantization can reduce model size and computational complexity while preserving performance.

It is also important to use relatively standard model formats (for example, TensorFlow Lite) to simplify conversion to device-specific formats. The wide availability of open models reduces the need to design and train models from scratch.

Adapting Models to the Target Application

Off-the-shelf models may underperform for a specific application because of differences between the original training data distribution (sensor type, imaging conditions) and the target deployment data. A standard object-detection model may not include classes for certain defects or rare objects of interest. In such cases, designers should fine-tune or retrain models to improve accuracy for the target classes. This requires labeled data that reflects the expected field conditions, collected from domain assets or curated to match operational scenarios.

Using a suitable labeling platform helps streamline annotation and keep outputs in deep-learning-compatible formats. Once the updated model achieves the required performance, it can proceed to deployment.

Deploying Models on Embedded Devices

Because embedded ecosystems support multiple model formats, choosing hardware with integrated software tooling simplifies model porting. The first step is to implement the data-preprocessing pipeline required by the model, or to use an embedded platform that includes standard vision preprocessing primitives. Typical preprocessing includes converting raw pixel streams to RGB, resizing or cropping frames, and other image normalization steps. Postprocessing after inference may include drawing bounding boxes on a display or aggregating detections for downstream logic.

Next, convert the model into a format suitable for the target device to enable real-time inference. This often involves exporting to a standard exchange format such as ONNX, which facilitates hardware-specific optimizations. Model and application code are then compiled and packaged into a deployable binary for the target device; open-source compilers and toolchains can assist with this step. Because model porting involves multiple detailed substeps, selecting an embedded platform that bundles relevant software components can accelerate integration.

For example, some evaluation kits are delivered with machine learning software stacks that provide preprocessing pipelines and deployment tools to streamline model conversion and packaging. Once the device runs the inference engine, its outputs can be consumed by the target application, such as defect detection or automation control.

Conclusion

Integrating deep learning into embedded computer vision enables more capable and innovative applications than traditional methods. Potential benefits span home and industrial automation and defect inspection across domains. Achieving those benefits requires careful attention to latency, energy efficiency, security, and privacy, particularly when selecting hardware. Algorithm choice, adaptation, and careful deployment are also critical to deliver the desired application performance. When these factors are addressed, embedded vision systems can deliver a new level of efficiency and capability for on-device intelligence.

ALLPCB

ALLPCB