Introduction

This long article examines the ecosystem around AI chips. The topic is broad, touching technical, commercial, and strategic dimensions, and many debates are involved. The perspective here is direct and sometimes provocative; readers should interpret accordingly.

What I Mean by "Ecosystem"

"Ecosystem" is a broad term and easy to make vague. I will focus on widely recognized components that together form the de facto industry-standard system, for example:

- Deep learning frameworks such as PyTorch

- Operator programming such as CUDA

- System-level languages like C and C++

- Algorithm and application-level languages like Python

- Build systems for C/C++ projects such as CMake

- Hardware commonly paired with AI workflows, e.g., NVIDIA GPU

- Servers based on x86 processors from Intel

- GPU-to-GPU interconnects such as NVLink

- CPU-GPU connectivity like PCIe

These software, hardware, and protocol components combine to form the most standardized stack in the industry today. The stack has evolved since the birth of modern computing, and many components carry historical weight. No single organization owns the entire system; it is the result of long competition across the industry. However, winners in that competition gain influence over particular ecosystem niches.

Ecology of Niches and Influence

Different niches depend on each other: components in one niche are often applications of components in another. A niche's influence is determined by demand rather than platform ownership. For example, NVIDIA GPUs nominally play the role of a subordinate PCIe device in an Intel-defined "central" processor model, but during the AI demand surge NVIDIA has had far greater influence over solution form factors than Intel in that domain.

Although PyTorch is built on C++ and Python, the evolution of C++ and Python alone gives them limited influence over deep learning. Influence does not mean unlimited freedom: demand constrains how players with influence can act. Intel retains broad autonomy over CPU-related features, virtualization, and many interconnect standards, but it lacks strong appeal in the AI domain.

Solution-First Thinking Is a Common Pitfall

Many companies treat end-to-end solution bundles as the primary method to gain competitiveness. In practice, a "solution" approach is typically how players that already have ecosystem dominance extend into other industries. It is the fruit of having ecosystem power, not the cause.

Startups often attempt to build full-stack solutions from chip to application, hoping software and hardware integration will create a new ecosystem. This approach mistakes cause and effect. Compatibility and affinity with existing standards are crucial. For example, CUDA extends C/C++ in a way that is highly compatible with existing C/C++ tooling, object file formats, calling conventions, and build processes. That minimal invasiveness made it easy to integrate CUDA into complex C/C++ projects.

New entrants must consider not only language affinity like C/C++, but also affinity with CUDA itself. Depending on the intended entry point into the system, different affinities matter. When NVIDIA designed CUDA, C/C++ and high-performance computing were natural choices. Today, Python has grown in importance at the application level, so affinity with Python and its ecosystem is another viable approach—but it requires careful design.

Why Building an Entire Stack Often Fails

When operator programming languages lack affinity, companies often try to solve the problem by building graph compilers, frameworks, inference engines, and even business-specific stacks. That only creates additional affinity problems and redirects the original issue. The same happens at other layers: chip vendors bundle full systems, system vendors bundle cloud stacks, and boundaries expand until compatibility issues explode. The result is an independent, private stack that looks like an integrated solution but cannot compete against the de facto standardized system.

There are exceptions: extremely focused, high-volume markets can support a private stack. Cryptocurrency mining is a prime example: demand was single-purpose and massive, enabling custom ecosystems that captured significant share. But such private solutions rarely produce long-term ecosystem dominance.

Competing for Influence, Not Just Market Share

Real competition at the solution level is about winning influence inside the standardized ecosystem. Players who control niches use their base to gradually reshape the overall system in ways that favor them and hinder rivals. For example, Intel historically controlled the upgrade pace of PCIe bandwidth, creating bottlenecks for GPUs. Intel's introduction of CXL is also an attempt to rally allies against NVIDIA.

But only players who are already "on the bus" of the standard system can compete at that level. Building a private solution gives the illusion of being in the competition but does not grant the right to define the system's rules.

Learn from NVIDIA's Long Strategic Play

NVIDIA is an instructive case, not because copying its present success is straightforward, but because its three-decade arc shows how a nobody became a player in the system and later became a rule-maker for a sizable niche. The crucial lesson is strategic persistence across decades, not simply mimicking current tactics.

When NVIDIA began, the independent GPU niche was not an obvious or guaranteed success. Intel as the incumbent defined the game's rules: the CPU as the "central" processor with extensibility via PCIe. When a device's demand stabilized, Intel could integrate its functions into the CPU. Many PCIe devices have vanished this way. The competition is therefore both between players and between players and the rule-maker.

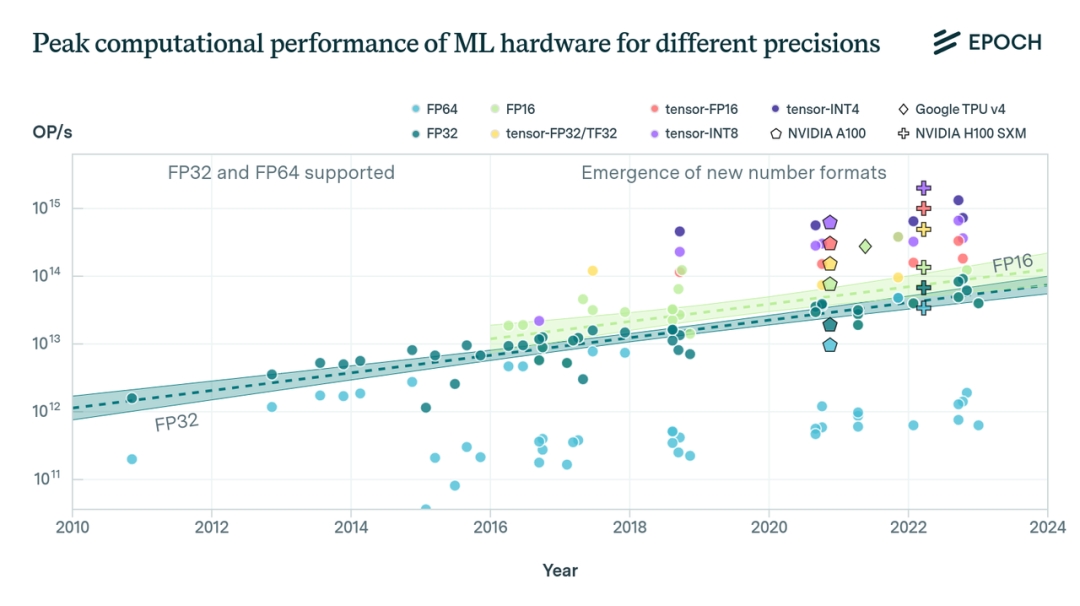

To survive, NVIDIA adopted an aggressive performance growth strategy: rather than matching Intel, it aimed to accelerate GPU performance faster than integrated graphics could follow. Jensen Huang proposed a faster cadence of performance improvement so integrated GPUs would lag. NVIDIA pushed GPU performance and power aggressively, which drove rapid improvements in game graphics and made integrated GPUs appear inadequate. Over time, discrete GPUs became a de facto standard niche and NVIDIA secured a strong position in it.

Why Replicating That Path Is Hard

Each instance of an underdog becoming a rule-maker is specific to the historical moment, the demand, and the strategic choices made. There is no generic, repeatable recipe. Every successful case redesigned the rules in a way that closed off opportunities for later entrants.

For those attempting to build AI chips now, the core idea to learn is not copying tactics but understanding the broader strategic thinking: create a trajectory of performance and ecosystem influence that outpaces incumbents, align with demand growth, and build developer affinity. Without that, GPGPU may remain the dominant approach and swallow the AI chip opportunity.

Three Levels to Clear for Ecosystem Leadership

Winning an ecosystem position generally requires clearing three escalating levels:

- Product usability and high development efficiency. Many AI chip efforts still fall short compared to NVIDIA's CUDA+GPGPU development efficiency.

- Long-term product continuity. A chip must be the start of a multi-generation platform with decades of performance improvements and stable compatibility across many product iterations.

- Market demand and ecosystem affinity. Even great technical designs struggle if they diverge too far from established system shapes. Demand must catalyze the niche.

NVIDIA took several years to convert GPUs into general-purpose accelerators and to build the CUDA ecosystem. That allowed it to clear the first two levels and then align with the third when deep learning demand surged in 2012.

Where Opportunities in the Stack Still Exist

Competition within CUDA itself, such as alternatives like ROCm, is an internal niche fight and becomes difficult after the dominant ecosystem consolidates. Higher in the stack, frameworks and tools offer other potential niches. Deep learning frameworks formed around 2012 and consolidated by the mid-2010s; PyTorch now holds a dominant position. Competing in core frameworks at this point is limited, but layers above frameworks still contain opportunities.

Large model training and inference frameworks built on top of PyTorch are an example of early-stage niches. Many players are building components in this space right now. If the importance of a given layer rises while other layers diminish, that creates potential to shape the ecosystem from a new position.

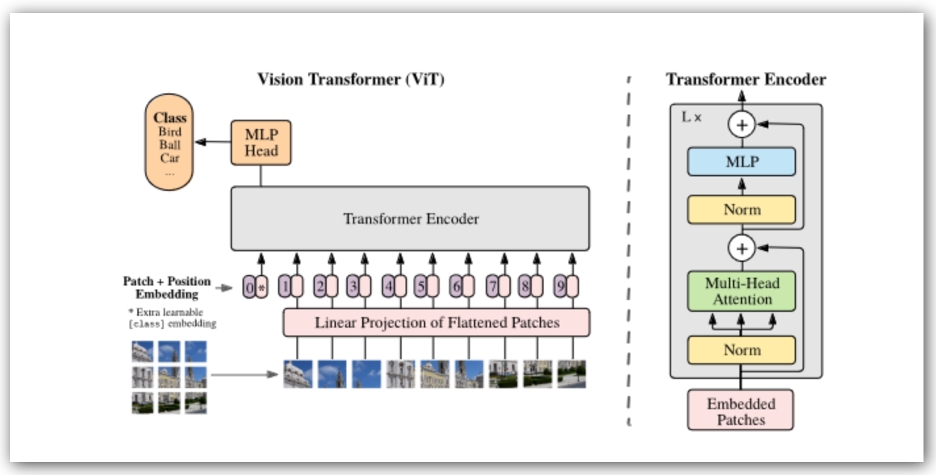

However, details matter. Frameworks primarily address graph representation and cross-device execution for algorithm researchers. They often satisfy high-frequency operator needs by shipping thousands of built-in operators, which does not fully isolate operator implementation from underlying systems. Attempts to fully separate hardware differences at the framework layer, like early TensorFlow efforts to detach GPU and TPU semantics, were painful and limited in effect.

Developer-Centric Ecosystem Strategy

Ecosystem capture relies on developers, not business customers. The goal is to make developers and their downstream components "have to use" your platform. That rarely happens through a single component being merely "good." Rather, a multi-layered stack of "good" developer-facing tools creates stronger lock-in: if many valuable tools depend on a lower-level component, users become forced to use that component.

NVIDIA focused on serving framework and tool developers: thorough documentation, detailed debugging APIs, developer outreach, and even hardware distribution for experimenters. Crucially, product performance made the developer case compelling: GPUs delivered massive speedups for deep learning workloads, so framework authors naturally prioritized GPU support. NVIDIA then supported those developers intensively, accelerating adoption of the CUDA ecosystem and creating a large base of dependent frameworks and tools.

That approach is a "subset" strategy: aim to be an indispensable subset that every broader solution uses. Many companies fall into the "superset" trap: try to build a product that does everything all competitors do plus unique features. NVIDIA, in contrast, focused on being the unavoidable subset and enabled many different frameworks and solutions to adopt CUDA.

When to Build Software and When to Enable Others

NVIDIA did build some software, but often chose to open source or to create tools that others could integrate, furthering the "unavoidable subset" effect. When NVIDIA did intervene directly, it tended to do so where the industry was not using GPUs effectively and NVIDIA had to drive behavior change. Ultimately, the "solution" approach is usually the late-stage monetization method after ecosystem dominance is in place.

Reconstructing the System from a Position of Power

Over decades NVIDIA has acquired the right to reshape parts of the system. Even so, changes remain incremental because ecosystem inertia persists. NVIDIA uses AI as an entry point to propose a new compute model for modern systems, seeking to make the underlying architecture more suitable for AI workloads. That is the privilege of the dominant player: to nudge the base platform toward a new equilibrium.

The lesson for others is not to imitate recent visible successes, but to study the long-term strategic patience, the alignment with demand, and the multi-decade execution that created the present power structure. Opportunities that look natural today were often forged under adverse conditions by those who reshaped the rules.

Closing Thoughts

Ecosystem competition is brutal and path-dependent. What appears natural now was built by players who seized historical conditions, made strategic bets, and executed relentlessly. For anyone attempting to enter the AI chip space, the challenge is to identify a realistic entry point that can build developer affinity, sustain multi-generation advancement, and align with rising demand. Shortcuts exist for niche, single-purpose markets, but they rarely lead to long-term ecosystem dominance.

ALLPCB

ALLPCB