Overview

SLAM stands for simultaneous localization and mapping. It is a technique that enables an autonomous mobile robot to estimate its own pose while building a map of an unknown environment.

The basic idea of SLAM is to use sensors mounted on the robot to gather environmental information, model the environment, and estimate the robot pose, then fuse these sources to produce an accurate map and pose estimates.

SLAM is used in areas such as autonomous driving, drones, robotics, and virtual reality. Development has been shifting from basic localization and map construction toward richer scene understanding.

Four elements of SLAM algorithms

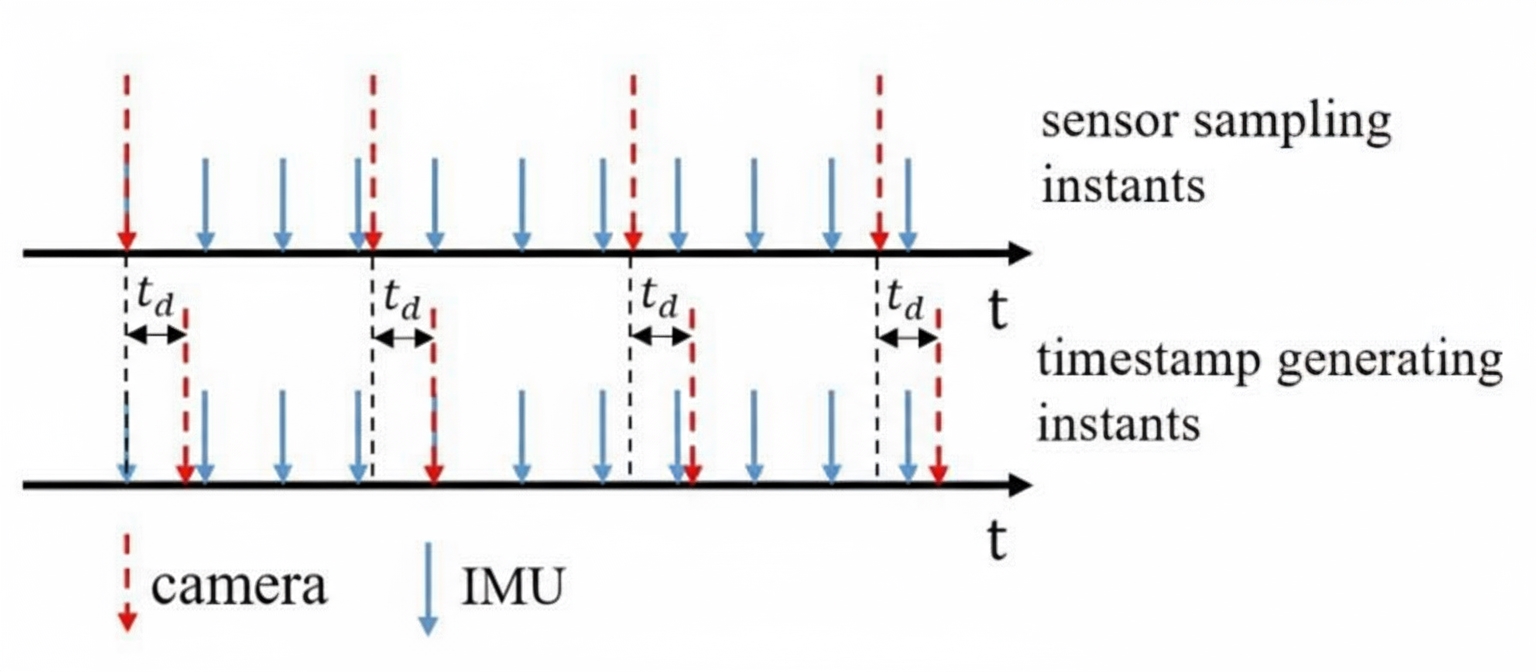

- Sensors: SLAM uses multiple sensors to acquire environmental data, for example LiDAR, cameras, and inertial measurement units (IMU). These sensors provide information such as distances, angles, and images.

- Motion model: The motion model describes how the robot moves in the environment. By modeling the robot motion, the system can predict pose changes based on known control inputs.

- Feature extraction and matching: SLAM exploits visual or geometric features (corners, edges, etc.) from sensor data for mapping and localization. Feature extraction pulls key information from images or point clouds, while feature matching associates features in the current observation with features in the existing map.

- Data association and filtering: Data association links sensor observations to map elements to determine the robot pose and map updates. Filtering refers to estimation methods for the robot state; common filters include Kalman filters and particle filters.

Common sensors in SLAM

LiDAR: LiDAR is an active sensor that emits laser beams and records their return time and intensity to measure distance and depth. Unlike cameras, LiDAR performance is less affected by ambient lighting and is widely used indoors and outdoors. LiDAR can provide high-accuracy, high-density 3D point clouds, making it common in SLAM systems for robotic navigation and autonomous vehicles.

Camera: Cameras are passive sensors that capture optical images. They are widely used in computer vision and image processing, providing rich texture and shape information. In SLAM, cameras are typically used to extract feature points and establish correspondences for mapping and localization. Cameras are lower cost than LiDAR but are sensitive to lighting conditions and typically require calibration and noise-reduction processing.

ALLPCB

ALLPCB