Overview

OpenAI released a video model named Sora that can generate up to one-minute 1920x1080 videos from text. The model outperforms earlier state-of-the-art video diffusion models that were limited to short, low-resolution outputs. OpenAI also published a short technical report that outlines Sora's architecture and applications without revealing detailed training or algorithmic specifics. The following summarizes the report and highlights technical points of interest.

LDM and DiT: The Foundation

Sora combines a latent diffusion model (LDM) with a Diffusion Transformer (DiT). A brief recap:

- Latent diffusion models (LDM) mitigate the high compute needed for high-resolution image diffusion by training an autoencoder that compresses images into a low-resolution latent representation. The diffusion model is then trained on these latents rather than on full-resolution pixels.

- Traditional LDM diffusion networks use U-Net. In many deep learning tasks, Transformer architectures scale more effectively with parameter count: larger Transformers often yield larger performance gains. DiT replaces the U-Net denoiser with a Transformer-based denoiser.

- To apply Transformer architectures to images, images are typically split into patches and each patch is treated as a sequence token. Positional encodings are added to preserve spatial ordering.

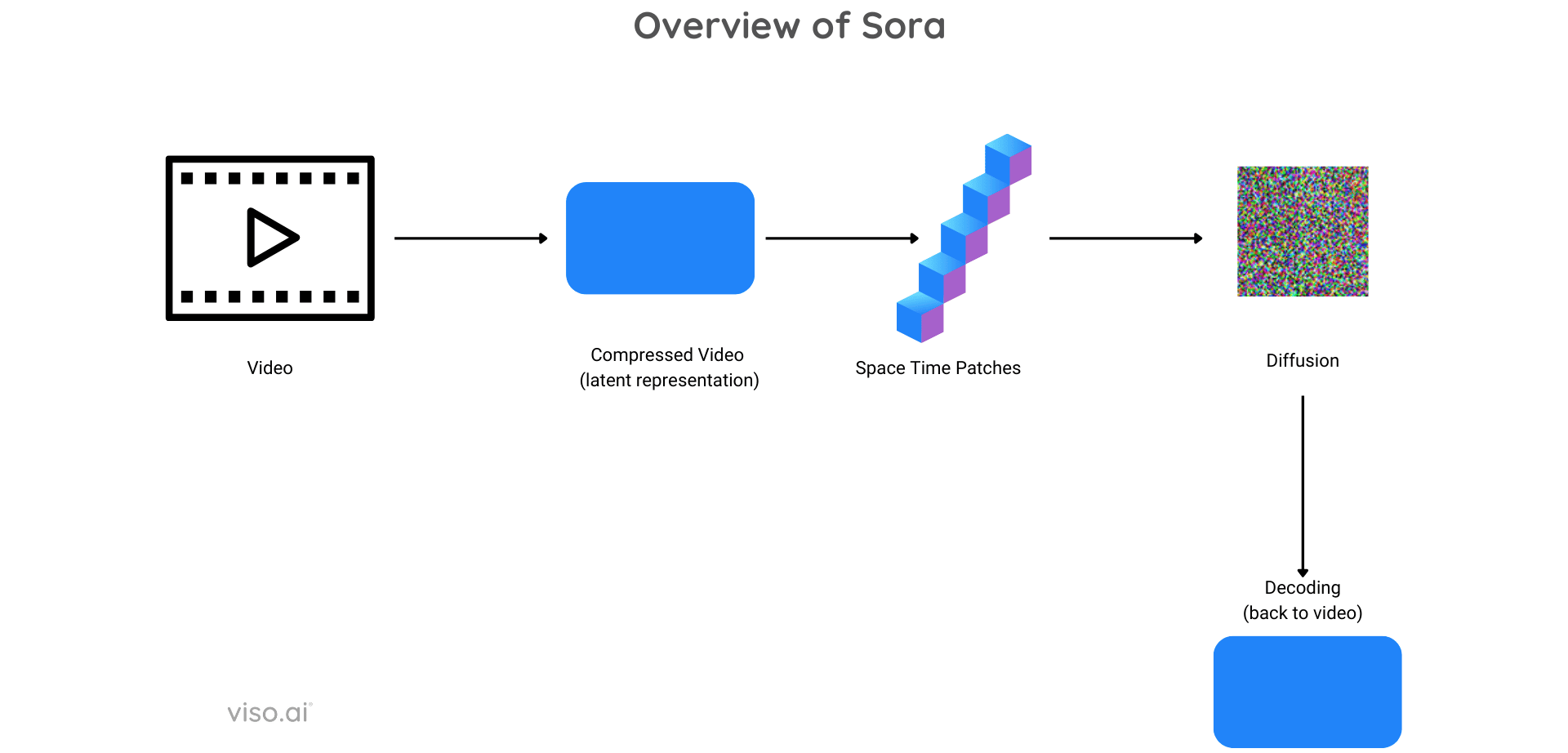

Sora as a Video DiT

Sora is a video version of DiT. The report highlights two main modifications that enable high-resolution, long-duration video generation.

Spatiotemporal Autoencoder

Prior attempts to extend image autoencoders to video would encode each frame independently, producing a sequence of compressed frames. That approach can cause temporal flicker in outputs. Some prior work fine-tuned the decoder to handle temporal coherence by adding modules that operate across time.

Sora instead trains an autoencoder from scratch that compresses videos directly in both space and time. This spatiotemporal compression reduces spatial resolution and also shortens the temporal dimension of the video latent, which likely contributes to Sora's ability to generate minute-long videos. The report states Sora can also handle single-frame inputs (images), but it does not detail how the temporal compression handles length-one sequences.

Spatiotemporal Patches for DiT

After encoding, a video becomes a compressed sequence with both reduced spatial and temporal dimensions. Sora's DiT models these compressed spatiotemporal latents. Unlike U-Net based denoisers that often require additional temporal convolutions or self-attention modules, DiT treats a video as a 3D object divided into patches, flattens those patches into a 1D sequence, and feeds that sequence into a Transformer. Each patch becomes a token, and the Transformer operates on the token sequence.

Handling Arbitrary Resolution and Duration

The report emphasizes that Sora can be trained and run on videos of arbitrary resolution (up to 1920x1080), any aspect ratio, and any duration. Training data do not need to be resized or cropped to a fixed shape. This flexibility stems from the Transformer architecture and its positional encoding mechanism. By assigning positional encodings that represent each patch's spatiotemporal position, the model can preserve relative patch relationships regardless of input shape or length.

Compared to prior approaches that resized or cropped inputs to a fixed resolution, Sora's approach avoids artifacts learned from cropped training examples. Previous models that enforced a fixed input grid implicitly learned absolute positional relationships tied to a specific grid shape, which did not generalize to other resolutions. Sora appears to use a positional encoding that preserves 3D spatiotemporal coordinates for each patch, removing that restriction.

Transformer Scalability

Transformers scale well with model size and compute. The report shows consistent improvements in generation quality as training time and compute increase, illustrating that the Transformer-based denoiser benefits from this scalability.

Language Understanding and Re-annotation

Many image-conditioned diffusion models were trained on paired image-text datasets, but human captions can be low-quality. Sora reuses OpenAI's DALL·E 3 re-annotation approach: a trained captioning model generates detailed captions for training videos. This automated re-annotation addresses the scarcity and low quality of human-labeled video captions, and likely improves the model's ability to follow complex textual instructions. The report shows examples suggesting strong abstract and relational understanding, though details of the captioning model are not public.

Other Generation Capabilities

- Conditioning on existing images or videos: Sora can extend content before or after a provided clip, and can produce video from a single image. The report does not detail the implementation; one plausible mechanism is inversion of the input into latent space followed by replacement or interpolation of latent variables.

- Video editing: Simple image-to-image techniques such as SDEdit can be applied to video editing.

- Video content blending: The report suggests blending of two videos may be implemented via interpolation of their initial latents.

- Image generation: Sora can also produce images, reportedly up to 2048x2048 resolution.

Emergent Abilities

Trained on extensive data, Sora exhibits several emergent behaviors:

- 3D consistency: videos can exhibit natural camera viewpoint changes consistent across frames.

- Long-range coherence: Sora sometimes maintains object persistence across many frames, reducing instances where objects abruptly disappear.

- Interaction with the scene: in examples such as drawing, the content on a canvas changes in step with a simulated pen stroke.

- Stylized simulation: when prompted with the name of a game such as "Minecraft," the model can generate convincing footage in that specific visual style, indicating strong style-fitting capacity.

Limitations

The report includes failure cases, for example physics-related errors such as a glass not shattering when expected. These examples indicate the model does not fully learn certain physical dynamics. Many of these limitations could be mitigated with targeted training data, but some failure modes remain.

Conclusion

Sora presents notable advances in video diffusion: it supports high-resolution, long-duration generation and uses a Transformer-based denoiser that does not constrain input resolution or duration. The main technical contributions are:

- A spatiotemporal autoencoder that compresses videos in both space and time.

- A DiT design that accepts arbitrary input shapes by using spatiotemporal positional encodings.

The second point is particularly influential: by generating positional encodings from 3D spatiotemporal coordinates, DiT avoids the one-dimensional positional encoding limitations that force a fixed input grid. This design choice may encourage a shift from U-Net to Transformer-based denoisers in future diffusion model research.

Three factors likely contributed to Sora's performance: the temporal compression in the autoencoder enabling long outputs, the Transformer-based DiT that removes spatial and temporal input shape constraints and benefits from model scaling, and a strong automated video captioning pipeline that supplies high-quality supervision. Beyond these technical points, further improvements generally require more compute and data.

Sora is likely to influence the generative content community and industry. Wider access to models with unrestricted input shapes and robust captioning pipelines would enable more research and applications. At present, many implementation and training details remain unpublished.

ALLPCB

ALLPCB