Overview

Annotating panoptic and instance segmentation datasets is labor intensive. Many generative models and NeRF-based methods try to synthesize panoptic segmentation training data directly, but they often suffer from class ambiguity in object overlap regions. This article summarizes PanopticNeRF-360, an extension of PanopticNeRF that uses coarse 3D annotations to quickly generate large numbers of high-quality RGB images and panoptic segmentation labels from novel viewpoints. The authors report reducing annotation time from 1.5 h to 0.75 min (a 120x improvement).

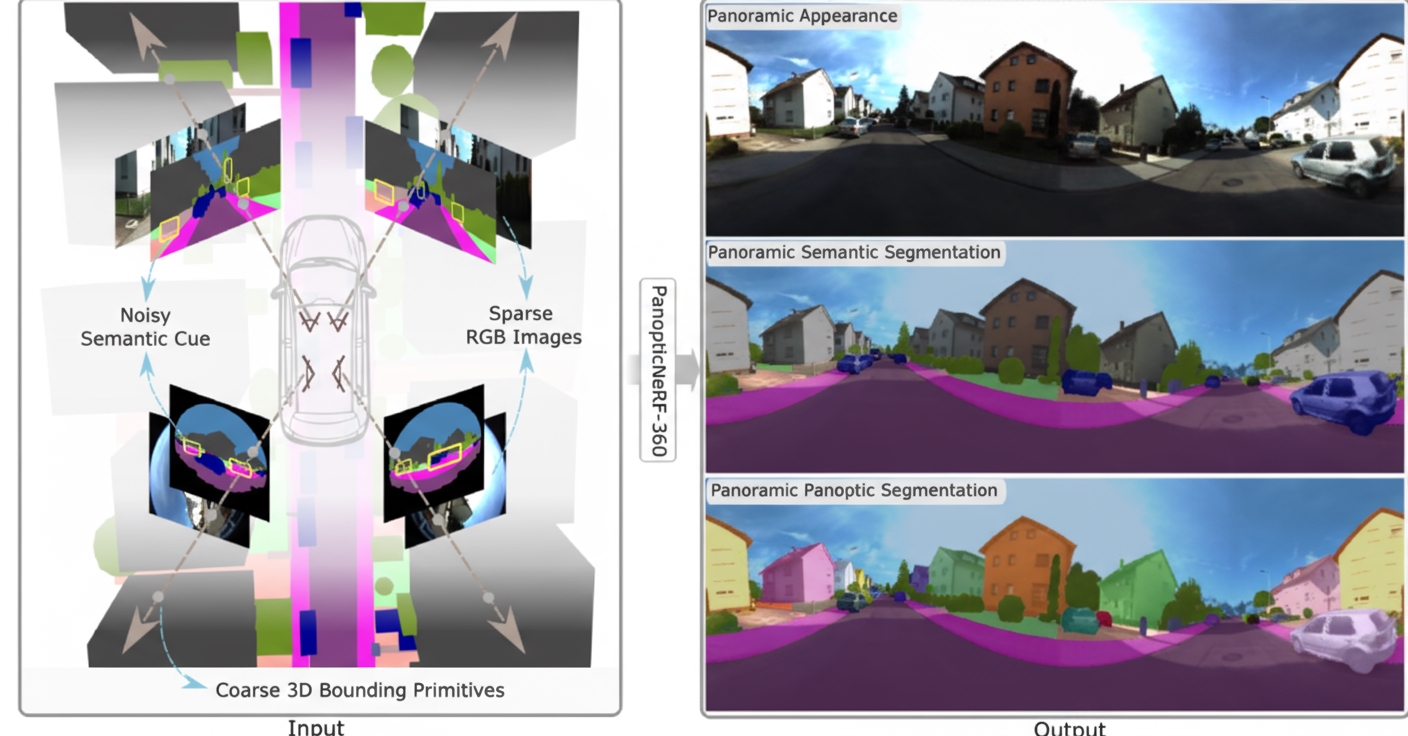

1. Results demonstration

PanopticNeRF-360 targets panoptic segmentation, so the input images are captured with fisheye cameras. The full setup uses a front-facing stereo pair, two side-facing fisheye cameras, and coarse 3D annotations (cubes, ellipsoids, or polyhedra). From this input it generates continuous RGB renderings, panoptic segmentation, and instance segmentation across novel views.

The code has been released. The following summarizes the paper.

2. Abstract

Training perception systems for autonomous driving requires extensive annotation. Manual labeling in 2D images is highly labor intensive. Existing datasets provide dense annotations for prerecorded sequences but lack coverage for rarely seen viewpoints, which can limit the generalization of perception models. This work introduces PanopticNeRF-360, a method that combines coarse 3D annotations with noisy 2D semantic cues to produce consistent panoptic labels and high-quality images from arbitrary viewpoints. The key idea is to leverage the complementary strengths of 3D and 2D priors to mutually improve geometry and semantics.

3. Method

Goal

Both PanopticNeRF and PanopticNeRF-360 aim to reduce the cost of panoptic and instance segmentation labeling by enabling automatic or semi-automatic annotation.

Main idea

The core framework uses NeRF because of its strong novel-view synthesis capability. The method constructs 3D semantic and instance fields to render numerous panoptic and instance segmentation annotations. Rather than focusing on modifying NeRF architecture, the paper uses NeRF renderings to drive a joint optimization process.

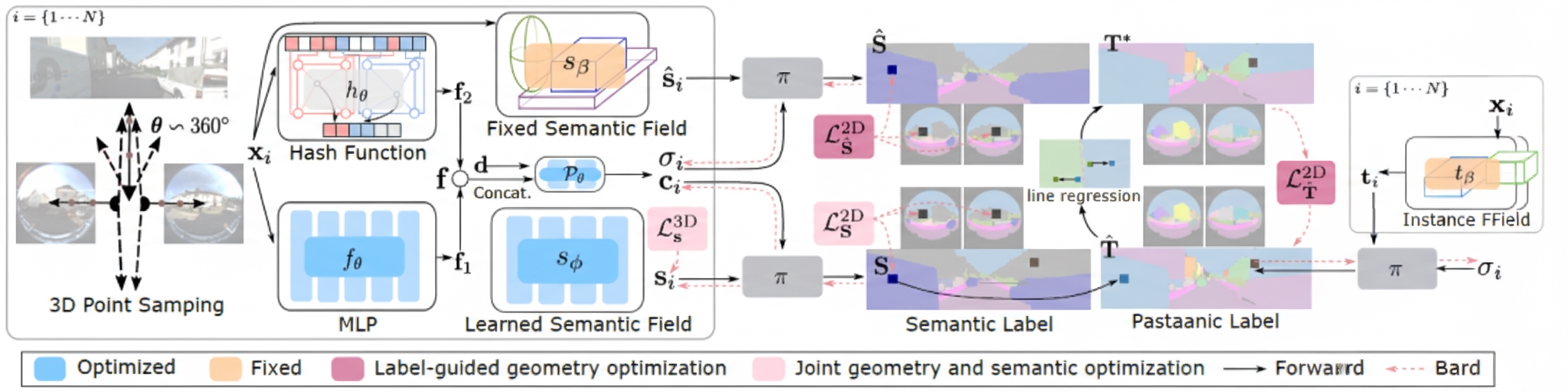

PanopticNeRF-360 architecture

Inputs are a front-facing stereo pair, two side fisheye cameras, and coarse 3D annotations (cubes, ellipsoids, or polyhedra). For each spatial point x, two MLPs and a hash grid are used to model geometry, semantics, and appearance, producing two feature vectors (f1 and f2) that are then combined. The system maintains two semantic fields: a fixed semantic field derived from the coarse 3D annotations and a learnable semantic field. Each semantic field is rendered to produce 2D semantic labels. A fixed 3D instance field is also rendered to produce 2D panoptic segmentation. The instance and panoptic outputs rendered from the fixed fields are used as pseudo-ground truth to guide geometry optimization (the optimized quantity is volumetric density in the semantic field). A joint geometry-semantic optimization is performed to resolve class ambiguity in overlapping regions of the 3D scene.

The paper contributes two main points: it is the first method to generate high-quality panoptic segmentation from coarse 3D labels, and it proposes two optimization strategies to jointly refine geometry and semantic predictions.

4. Experiments

Experiments were conducted on the KITTI-360 dataset with comparisons to other 3D-to-2D and 2D-to-2D label transfer methods. Training used a single NVIDIA RTX 3090 GPU.

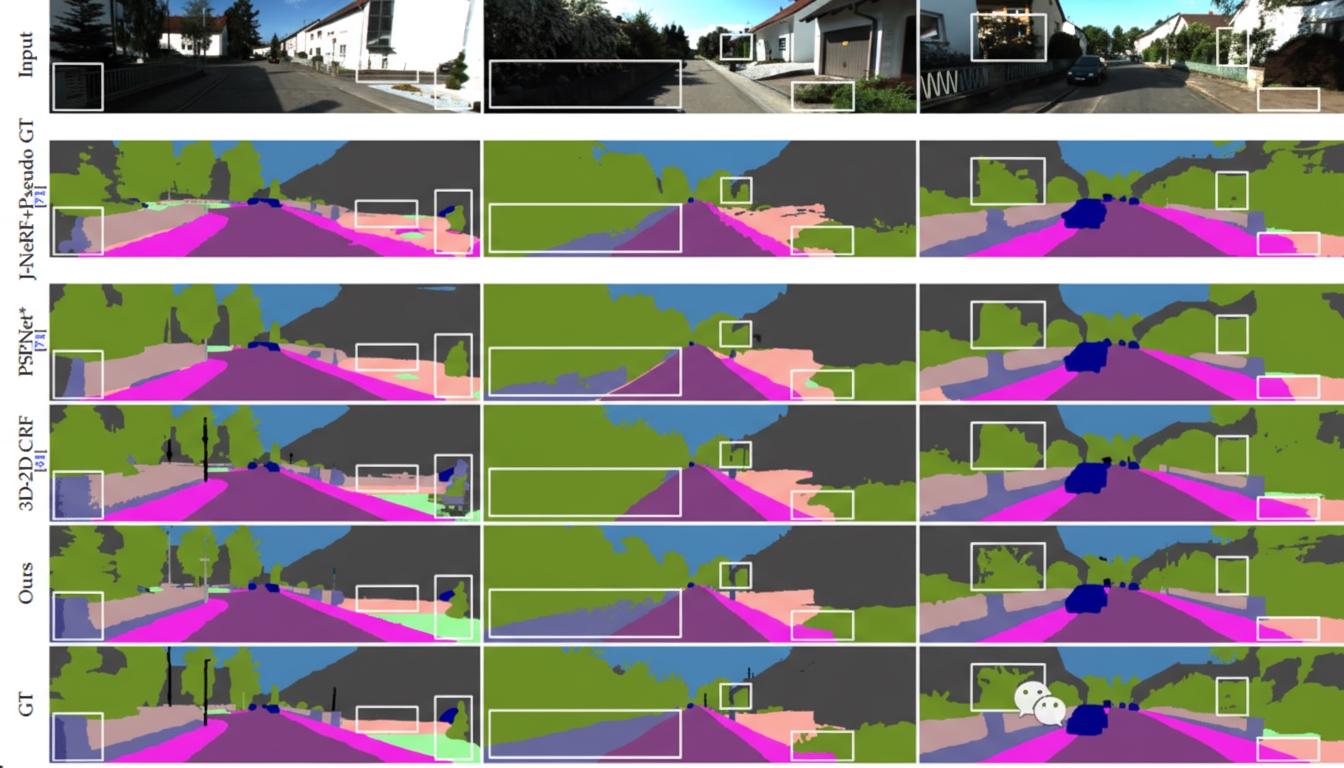

In quantitative 3D-to-2D semantic label transfer, PanopticNeRF-360 achieved the highest mIoU and accuracy. Compared to a CRF-based baseline, it improved mIoU by 2.4% and accuracy by 11.9%.

Qualitative comparisons show improved predictions in low-texture, exposure-challenged, and overlapping regions.

5. Conclusion

PanopticNeRF-360 extends PanopticNeRF to rapidly generate many novel-view panoptic labels and RGB images using coarse 3D annotations. Its geometry-semantic joint optimization helps resolve class ambiguity in overlapping regions, which has value for dataset annotation. The approach still requires coarse 3D annotations, which themselves require effort to produce; a remaining question is whether comparable 2D segmentation labels can be generated without any 3D coarse annotations.

ALLPCB

ALLPCB