Overview

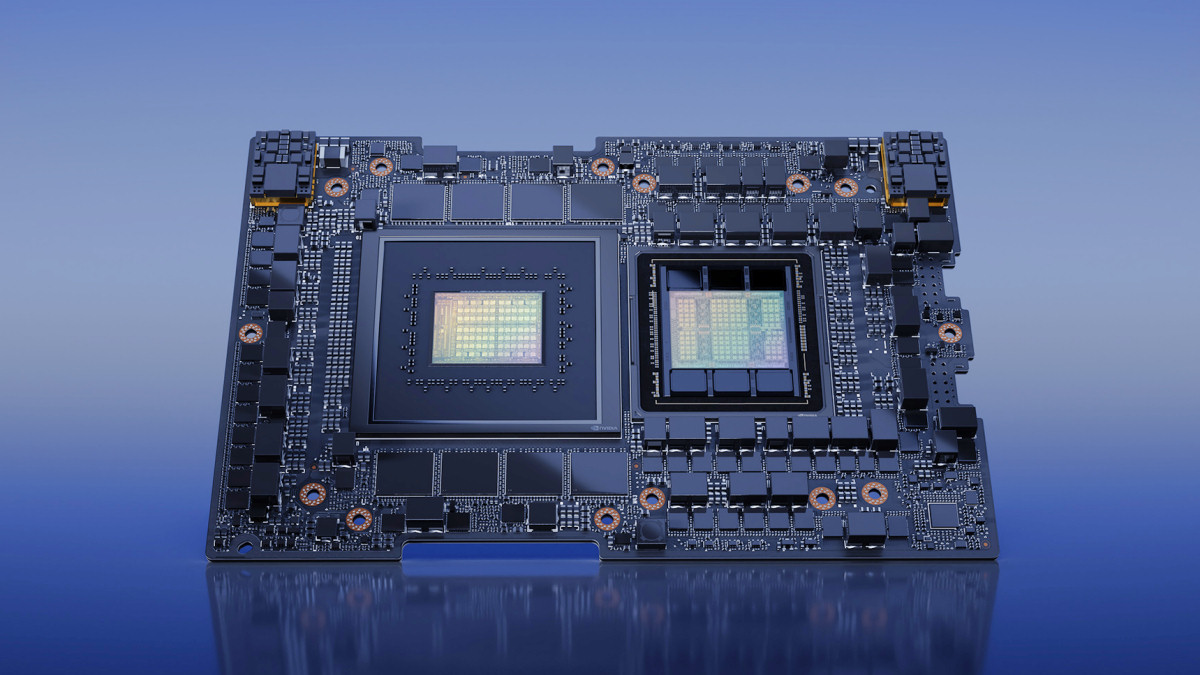

In recent months, many people across industries have become aware of ChatGPT's capabilities. ChatGPT's performance is partly due to the use of tens of thousands of NVIDIA Tesla A100 GPUs for AI inference and graphics computation.

This article briefly summarizes relevant GPU concepts and characteristics.

What is a GPU?

GPU stands for graphics processing unit.

In simple terms, a GPU is a chip specialized for graphics processing. It performs tasks such as rendering, numerical analysis, financial computing, cryptographic work, and other mathematical and geometric computations. GPUs are used in PCs, workstations, game consoles, smartphones, tablets, and other smart devices.

The relationship between a GPU and a graphics card is analogous to the relationship between a CPU and a motherboard: the GPU is the core component of a graphics card. A graphics card typically contains the GPU plus video memory, VRM modules, RAM chips, buses, cooling fans, and peripheral interfaces.

GPU vs CPU

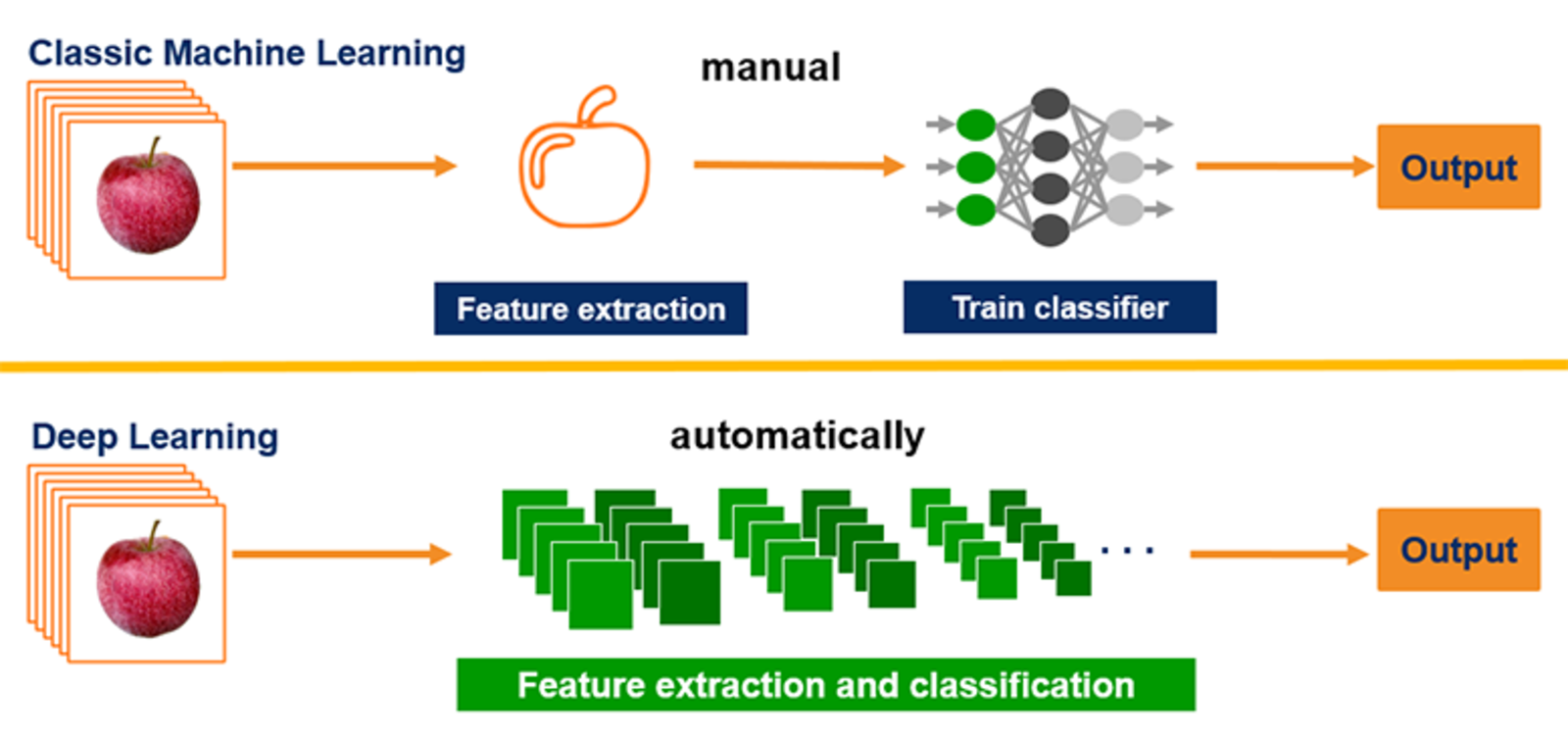

Which is stronger depends on the task. High-end GPUs can contain more transistors than many CPUs. CPUs excel at control and complex logic; GPUs excel at massively parallel mathematical computation and graphics rendering. This is why large-scale AI inference often uses many high-performance GPUs.

Different architectural composition

Both CPU and GPU are processors and include three functional parts: arithmetic logic units (ALUs), control units, and cache units. However, their relative proportions differ significantly.

- In a typical CPU, cache accounts for roughly 50%, control about 25%, and ALUs about 25%.

- In a typical GPU, cache is roughly 5%, control about 5%, and ALUs about 90%.

These architectural differences imply that CPUs provide more balanced capabilities and handle complex, varied tasks efficiently, while GPUs are optimized for performing a very large number of relatively simple arithmetic operations in parallel.

Conceptually, a GPU is like a large team of assembly-line workers that perform many simple operations quickly, while a CPU is like a specialist capable of handling more complex computations such as logic operations, user request handling, and network communication.

In CPUs, cache structures tend to be multi-level and sizable; GPUs typically have smaller L1 or L2 caches. CPUs emphasize per-thread performance and strong control logic to minimize interruption of control flow while keeping floating-point power consumption efficient. GPUs have a simpler control structure and focus on single-precision or double-precision floating-point throughput, achieving higher overall throughput.

CPUs are designed for real-time responsiveness and use multi-level caches to ensure responsiveness for multiple tasks. GPUs often use a batch-processing model, where tasks are queued and executed in sequence or in parallel batches.

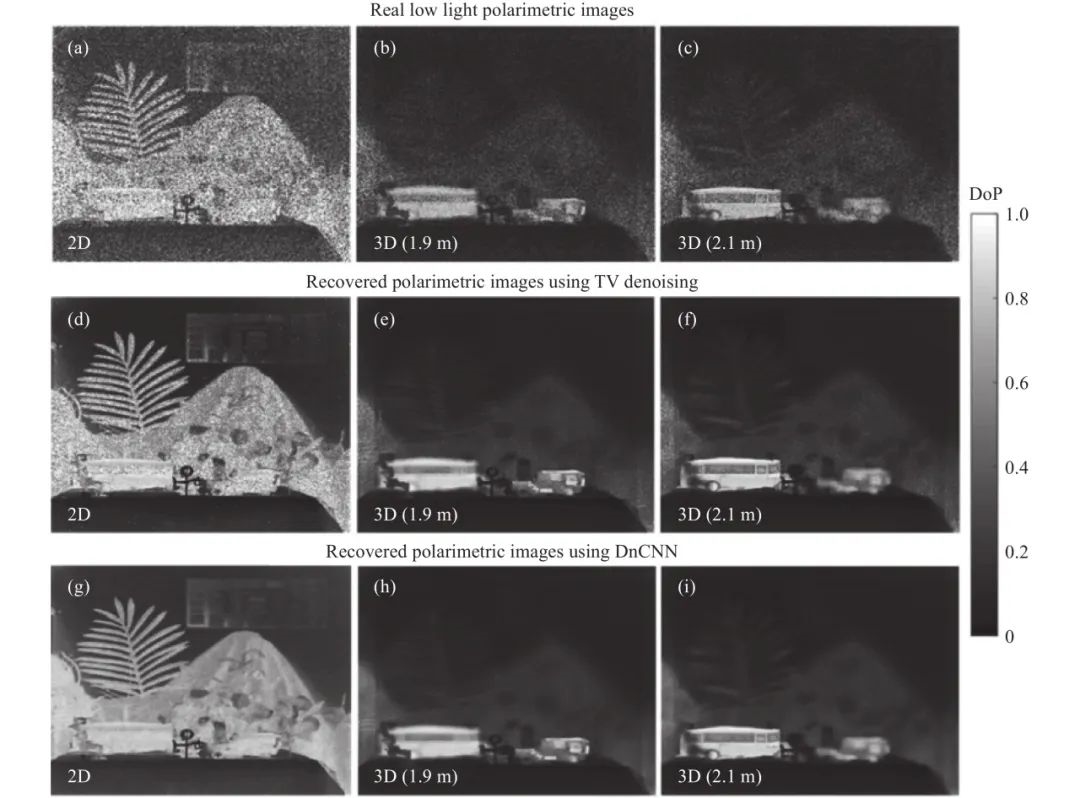

GPU in graphics processing

Consider real-time rendering of a 1080x720 image. That frame has 777,600 pixels. At a basic frame rate of 24 frames per second, the system must process 18,662,400 pixels per second.

For higher resolutions such as 1920x1080, 2K, 4K, or 8K video, the computation increases dramatically. In real-time scenarios like gaming, relying solely on the CPU for rendering would be impractical due to the required compute throughput.

Displayed 3D objects undergo multiple coordinate transformations, and surfaces are affected by environmental lighting, producing colors and shadows. These effects include diffuse reflection, refraction, transmission, and scattering.

Example: NVIDIA RTX 3090

The NVIDIA RTX 3090 contains 10,496 CUDA cores. Each core supports integer and floating-point operations and contributes to queuing and collecting results for operand handling.

Streaming multiprocessors can be viewed as independent task processing units. From a high-level perspective, one GPU can be seen as a cluster where each CUDA core performs part of the image-processing workload in parallel.

Using the earlier example of 18,662,400 pixels per second, distributing the workload across 10,496 cores results in roughly 1,778 pixels per second per core.

Besides CUDA core count, other factors that affect GPU performance include:

- Cache architecture and size

- Floating-point operation format and accuracy

- Response and scheduling mechanisms

- Core clock frequency: higher frequency increases performance and power consumption

- Memory bus width: measured in bits, wider bus allows more simultaneous data

- Video memory capacity: larger capacity enables more data to be cached

- Video memory frequency: higher frequency increases graphics data transfer speed

Summary

Whether for graphics rendering, numerical analysis, or AI inference, the underlying approach of GPUs is to decompose heavy mathematical workloads into many smaller, simpler tasks. GPUs use many parallel processing units to execute these tasks concurrently. One way to conceptualize a GPU is as a cluster in which each streaming processor acts like a small CPU core.

The above provides a concise overview of GPU concepts and working principles. There are many deeper topics in graphics processing, such as pixel coordinate transformations and triangle rasterization, which can be explored further by interested readers.

ALLPCB

ALLPCB