In recent years, edge AI has grown in popularity. The global market for edge AI is projected to expand at a 27.8% compound annual growth rate through 2035, reaching a value of about $356.84 billion.

Several factors drive this demand. Organizations often have security concerns about sending sensitive or proprietary data to the cloud, which edge processing can mitigate. Edge processing also reduces latency, which is critical for real-time applications that require immediate decisions. Industrial IoT (IIoT) devices generate data that enables data-driven operations, further increasing use cases for edge AI. Applications from portable medical devices to wearables and IIoT are driving rapid expansion of edge AI deployments.

As edge AI becomes more widespread, demand is increasing for components that can meet embedded systems data-processing requirements.

Choosing compute: MCU or microprocessor

The majority of IoT devices deployed in industrial and other embedded applications today are low-power devices with very limited memory. Processing for these devices often comes from small embedded microcontrollers (MCUs). MCUs use low-power architectures and, compared with systems based on microprocessors, offer cost and energy-efficiency advantages for embedded systems.

Before edge AI became common, MCUs generally met IoT processing needs. Traditional MCUs, however, often lack the compute required for more complex machine learning algorithms that characterize edge AI applications. Those algorithms typically run on more powerful microprocessors or on GPUs. Using microprocessors or GPUs carries trade-offs, including higher power consumption. Microprocessor- or GPU-driven edge compute is not always the most energy-efficient solution, which is why many vendors rely on MCUs.

Standalone MCUs are less expensive than GPUs and microprocessors. To scale edge AI applications, there is increasing need to improve MCUs' computational capabilities while retaining their low-cost, low-power advantages.

Over time, multiple factors have converged to drive continued improvements in edge MCUs.

Factors enabling MCU use at the edge

Although traditional MCUs are often seen as too lightweight for AI-related data processing, optimizations in MCU design combined with broader changes in the supporting technology ecosystem are enabling MCU use in edge AI scenarios.

These factors include:

- Integration of AI accelerators in MCUs: When an MCU alone cannot meet edge compute demands, integrating AI/ML accelerators such as neural processing units (NPUs) or digital signal processors (DSPs) can improve performance.

- Edge-optimized AI models: Complex, heavy-weight AI and machine learning algorithms cannot simply be ported to MCUs. They must be optimized for limited compute resources. Compact AI architectures and optimization techniques such as TinyML and MobileNet enable MCUs at the edge to run AI algorithms. STMicroelectronics, for example, offers STM32Cube.AI, a software solution that converts neural networks into C code optimized for STM32 MCUs. Even with processing and memory constraints, combining such tools with STM32N6 devices helps achieve the performance required by edge AI applications.

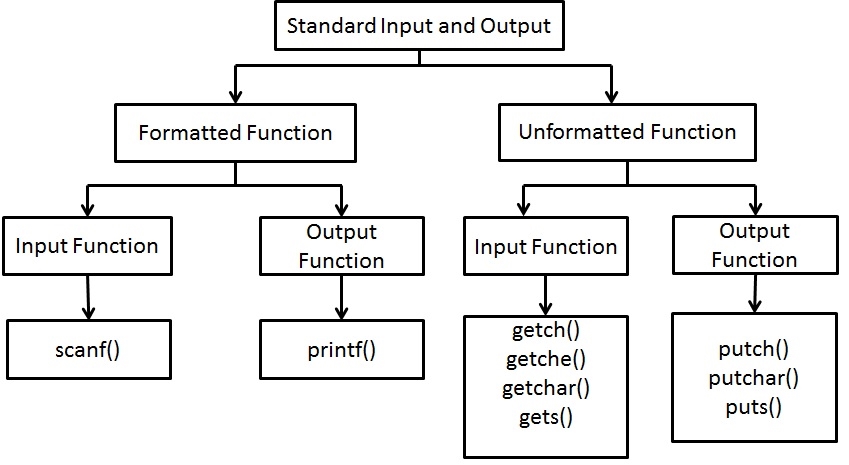

- AI ecosystem growth: Hardware capable of edge AI processing is not sufficient on its own. Deploying AI at the edge requires developer-friendly ecosystems to simplify deployment. Tools like TensorFlow Lite for Microcontrollers and resources from open communities such as Hugging Face provide pretrained models and code libraries that developers can test and customize for specific use cases. These ecosystems lower the barrier to AI adoption, allowing organizations with limited resources to access and use AI technologies without developing proprietary models from scratch.

- Vendor hardware and software ecosystems: Some vendors provide integrated hardware and software ecosystems, such as ST Edge AI Suite, which consolidate AI libraries and tools to help developers find models, data sources, tools, and compilers that generate code for MCUs.

- Pretrained models in model libraries: Pretrained models available in formats like ONNX (Open Neural Network Exchange) offer starting points for developers across domains such as computer vision, natural language processing, generative AI, and graph machine learning.

- Standardization and interoperability: Open and standardized model formats help achieve seamless integration across hardware platforms. Compatibility across software tools and MCUs reduces implementation barriers for edge AI.

- Focus on edge security: While MCUs reduce or eliminate the need to process data in the cloud, hardware components can provide additional security layers. They commonly include features such as hardware encryption and secure boot to protect data and AI models from malicious tampering.

STM32N6 hardware features

The STM32N6 series includes high-performance MCUs with NPUs, camera module bundles, and exploration kits. These devices are based on typical ARM Cortex-M architectures and include features that make them suitable for edge AI:

- Neural ART accelerator capable of running neural network models. The accelerator is optimized for compute-intensive AI algorithms and operates with a 1 GHz clock to deliver 600 GOPS at an average efficiency of 3 TOPS/W.

- Support for ARM M-profile Vector Extension (MVE) "Helium" instructions, a set of ARM instructions that enable powerful neural network and DSP capabilities. These instructions are designed to handle 16-bit and 32-bit floating point operations efficiently, which is important for ML model processing.

- ST Edge AI Suite is a repository of free software tools, use cases, and documentation to help developers of varying experience create AI for the smart edge. The suite includes tools such as the ST Edge AI Developer Cloud, dedicated neural networks in the STM32 model library, and a board farm for real-world benchmarking.

- Nearly 300 configurable multiply-accumulate units and two 64-bit AXI memory buses, with throughput up to 600 GOPS.

- Built-in dedicated image signal processor (ISP) that can connect directly to multiple 5-megapixel cameras. To build systems with cameras, developers usually fine-tune the ISP for specific CMOS sensors and lenses, which often requires expertise or third-party assistance. To support this, ST provides a desktop tool called iQTune that runs on Linux workstations, communicates with embedded code on STM32 devices, analyzes color accuracy, image quality, and statistics, and configures ISP registers accordingly.

- Support for MIPI CSI-2, a common mobile camera interface, without requiring an external ISP compatible with a specific camera serial interface.

- Integration of multiple on-chip peripherals, enabling developers to run neural networks and GUIs concurrently without deploying multiple MCUs.

- Robust security features, including Target SESIP Level 3 and PSA Level 3 certifications.

Conclusion

Historically, running machine learning on the edge required high-performance microprocessors to handle complex algorithms. With feature-rich MCUs such as the STM32N6 series, it is now more feasible to broaden AI deployment at the edge while retaining MCU advantages in cost and power consumption. Vendors are increasingly offering end-to-end ecosystems that include both software and hardware components for edge inference.

ALLPCB

ALLPCB