Overview

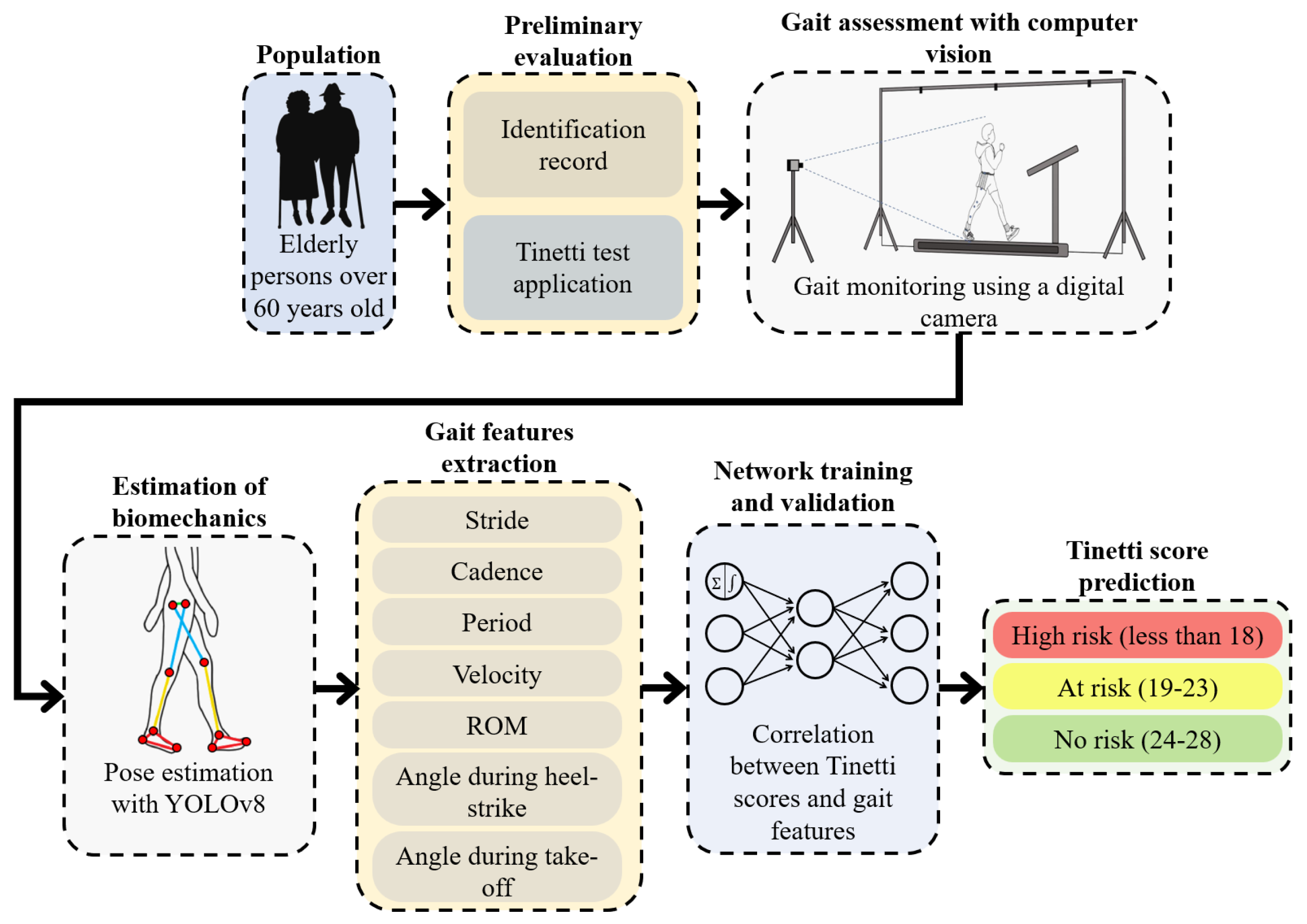

This article summarizes a paper on gait pattern recognition using computer vision and machine learning to predict falls. The study aims to develop a gait recognition computer vision system that classifies gait patterns by measuring lower-limb variables and identifying the best machine learning algorithms for distinguishing gait patterns associated with ground-level falls.

Background and research question

Gait recognition has applications in predicting fall probability in older adults, assessing function during rehabilitation, and training patients with lower-limb movement disorders. Distinguishing visually similar movement patterns associated with different pathologies is a challenge for clinicians. Automated identification and classification of abnormal gait remains a major challenge in clinical practice. The long-term goal of this work is to use AI and machine learning to develop a computer vision system for gait recognition. This study investigates the feasibility of using computer vision and ML methods to differentiate gait patterns related to ground-level falls. Methods involved capturing spatiotemporal gait data from seven healthy participants using the Kinect motion system during three walking trials: normal gait, pelvic obliquity gait, and knee hyperextension gait.

Participants

Seven healthy participants aged 23 to 29 years were recruited, including three males and four females. No participant had a history of neurological or musculoskeletal disorders. Each participant provided informed consent before the experiment.

Equipment and data capture

Equipment used in the study included an Azure Kinect DK integrated camera, an h/p/cosmos treadmill, and a laptop computer. The Azure Kinect DK camera recorded skeletal joint keypoints and angles. The camera was positioned at the participant's side, aimed at the frame center axis, and captured the whole body. Participants were recorded on video during gait tasks. The recorded tasks were designed to capture three gait patterns: normal gait (NG), pelvic obliquity gait (PO, a pelvic hiking gait), and knee hyperextension gait (KH, forward-leaning trunk gait).

Experimental procedure

Before the experiment, participants were instructed to perform the three gait patterns and were allowed three minutes of practice on the treadmill. A practice trial was conducted to verify device connections and system setup. During the formal trials, participants were asked to look forward and maintain a stable gait on the treadmill.

The three gait types were: normal gait during walking (NG); an abnormal pelvic obliquity gait (PO), characterized by trunk lift to the left followed by pelvic hike while the right leg advances; and a right-limb knee hyperextension gait (KH), characterized by a slight forward trunk lean and the right knee advancing. Only the right lower limb was used to produce the abnormal gait patterns. These gait patterns occur at moments that are critical for falls, so they are relevant for fall detection.

The treadmill was set to a constant speed of 0.1 m/s. Participants walked at this speed for one minute per trial. For each participant, each gait pattern was recorded five times, yielding five valid datasets per gait pattern and a total of 15 valid experimental datasets for offline analysis.

Data analysis and feature extraction

The study used the Azure Kinect SDK and the Azure Kinect Body Tracking SDK to track motion within the camera depth field of view. While tracking, 32 joint angle readings were recorded and saved to the computer. Spatial coordinates and skeletal joint nodes for the upper limbs, lower limbs, spine, shoulders, and hips were obtained. The study extracted lower-limb joint data for gait pattern recognition. Joint flexion and extension angles were computed from the lower-limb joint data and used as features for gait recognition.

Participants walked at 0.1 m/s. Because participants completed at least one gait cycle within 3 seconds, a 3-second time window was used to segment the recorded data into datasets. The study produced a total of 2100 feature sets labeled as normal gait, pelvic obliquity gait, or knee hyperextension gait. MATLAB was used for data segmentation.

Machine learning classifiers

Four classifiers were used to classify the gait data: convolutional neural network (CNN), long short-term memory (LSTM) network, support vector machine (SVM), and K-nearest neighbors (KNN).

- CNN: The CNN used two two-dimensional convolutional layers.

- SVM: An SVM model was applied as one of the classifiers, with Bayesian optimization used to tune the model. SVM uses differences in the data and high-dimensional features to assign labels based on their positions in feature space.

- KNN: An automatically optimized KNN model was used, with Bayesian optimization and the Euclidean distance metric for distance measurement.

- LSTM: A bidirectional LSTM (Bi-LSTM) network was used for classification. The model consisted of two Bi-LSTM layers and one fully connected layer. Bi-LSTM layers process both forward and backward input information.

Results and conclusion

The study applied CNN, SVM, KNN, and LSTM classifiers to automatically classify three gait patterns. A total of 750 data samples were collected, and the dataset was split with 80% used for training and 20% for evaluation. Results showed that SVM and KNN achieved higher accuracy than CNN and LSTM. SVM achieved the highest accuracy at 94.9 ± 3.36%, followed by KNN at 94.0 ± 4.22%. CNN accuracy was 87.6 ± 7.50%, and LSTM accuracy was 83.6 ± 5.35%.

The study demonstrates that machine learning techniques can be applied to design gait biometric systems and machine vision for gait pattern recognition. This approach has potential for remote assessment of older patients and to support clinical decision-making on management, follow-up, and treatment.

ALLPCB

ALLPCB