Overview

Since OpenAI released ChatGPT, large language models (LLMs) based on the Transformer architecture have drawn extensive technical attention worldwide and achieved significant results. Their strong understanding and generation capabilities are reshaping how artificial intelligence is perceived and applied. However, the high cost of LLM inference has significantly hindered deployment, making inference performance optimization a key area of research.

LLM inference faces large demands for computing resources and challenges in computational efficiency. Optimizing inference can reduce hardware costs and improve real-time response. It enables faster execution of natural language understanding, translation, and text generation tasks, thereby improving user experience, accelerating research, and supporting industry applications.

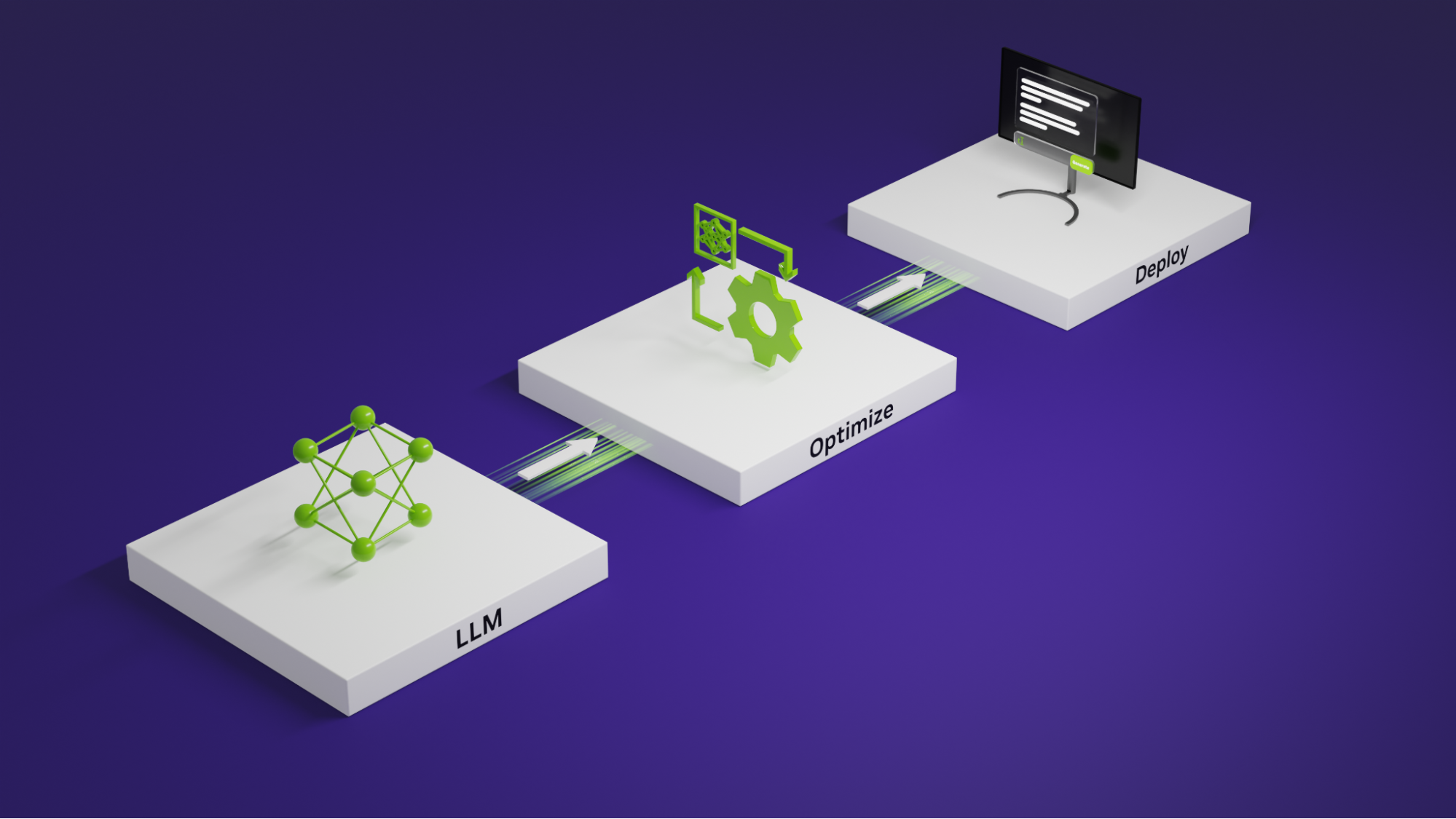

This article presents common performance optimization techniques and their characteristics from a system-level inference service perspective, and analyzes future trends and directions in LLM inference optimization.

Optimization techniques

Throughput and latency

LLM inference services focus on two metrics: throughput and latency.

- Throughput: Considered from the system perspective, throughput is the number of tokens the system can process per unit time. It is computed as the number of tokens processed divided by the elapsed time, where the token count typically equals the sum of input and output sequence lengths. Higher throughput indicates more efficient resource utilization and lower system cost.

- Latency: Considered from the user perspective, latency is the average time the user waits per token. It is computed as the time from issuing a request to receiving the complete response divided by the length of the generated sequence. In general, user experience is relatively smooth when latency does not exceed 50 ms/token.

Tradeoffs between throughput and latency

Throughput relates to system cost, while latency relates to user experience. These two metrics often conflict and require tradeoffs. For example, increasing batch size typically raises throughput by converting serial user requests into parallel processing. However, larger batch sizes can increase per-user latency, since multiple requests are combined and each user may wait longer for their result.

LLM inference optimization aims to increase throughput and reduce latency. The techniques can be grouped into six areas, which are described in detail below.

ALLPCB

ALLPCB