Overview

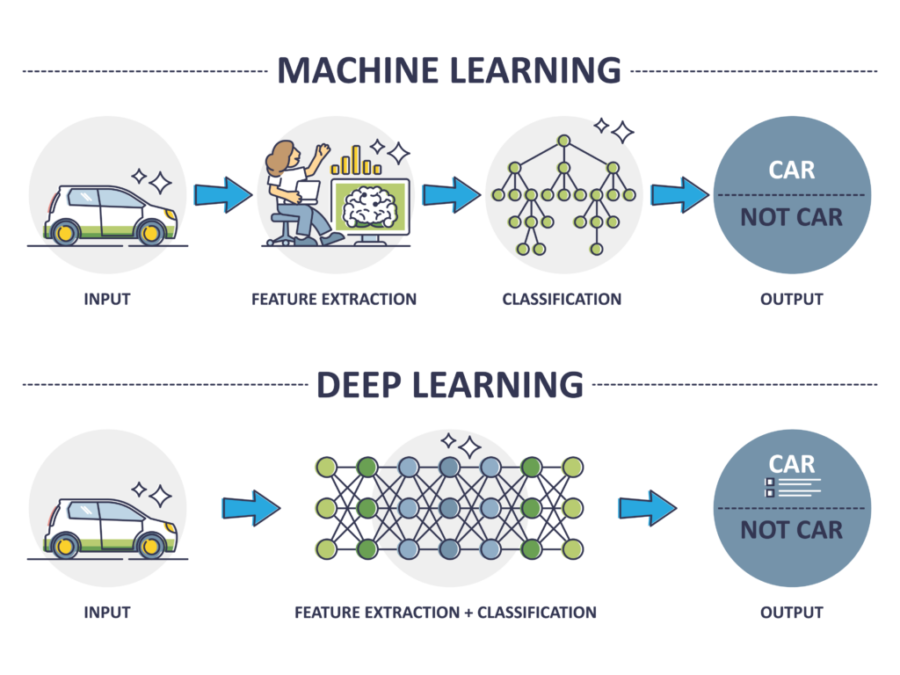

Scaling up model size has been a major driver of progress across artificial intelligence, including language modeling, image generation, and video. For visual understanding, larger models trained on sufficient data have typically shown improvements, which has made developing multi-billion-parameter models a common strategy for stronger visual representations and improved downstream performance.

Research Question

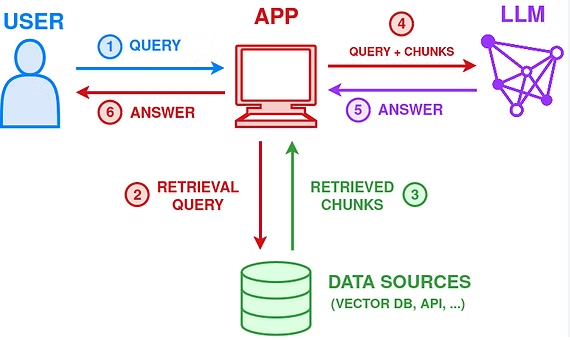

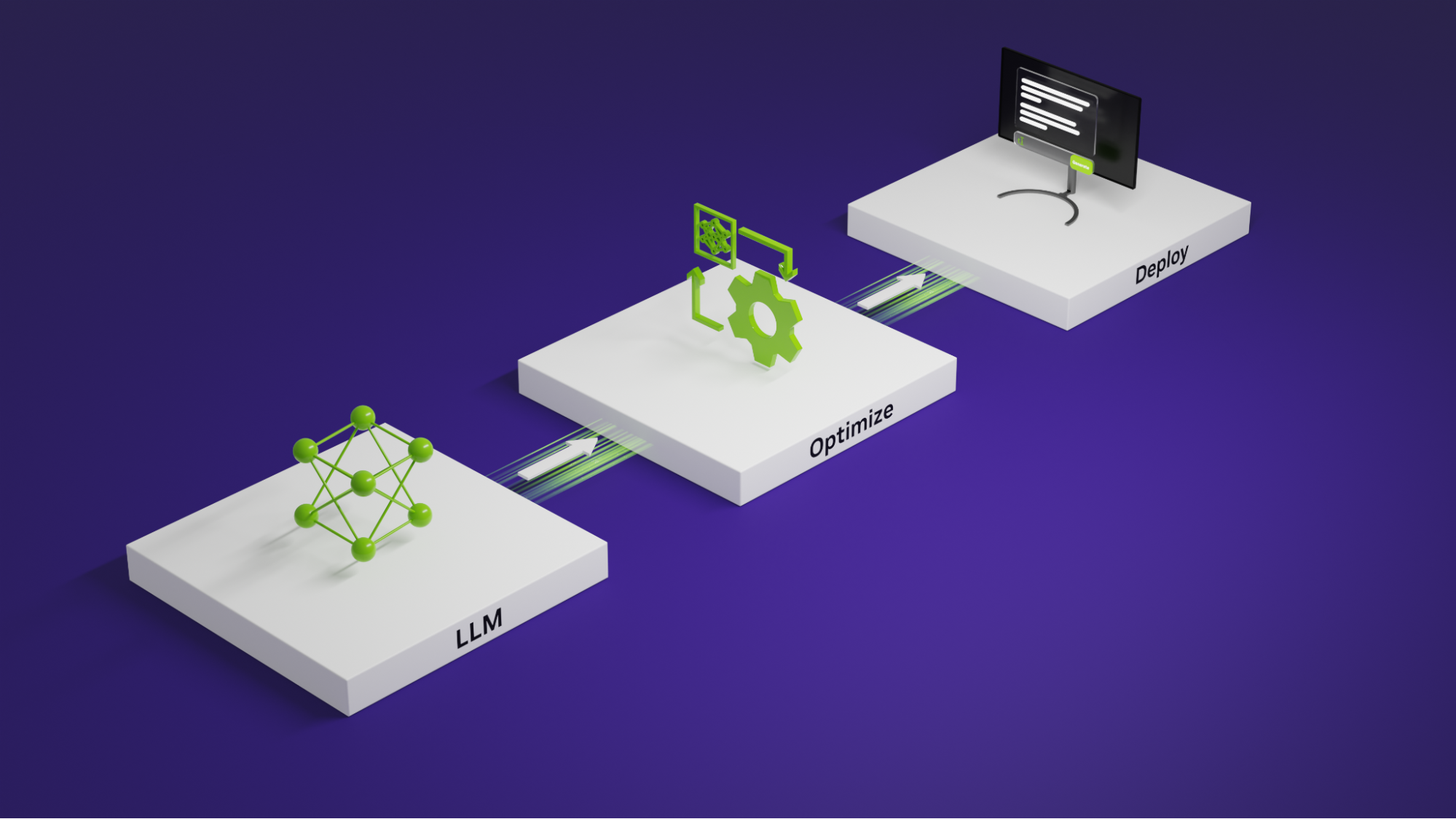

This work reexamines whether better visual understanding necessarily requires larger models. Instead of increasing model size, the authors propose scaling along the image-resolution dimension, a method they call scale scaling (S2). S2 runs a pretrained, frozen smaller vision model (for example, ViT-B or ViT-L) at multiple image resolutions to produce multi-scale representations.

Method: Scale Scaling (S2)

Starting from a model pretrained at a single image resolution, images are resampled to multiple scales. For larger scales, the image is partitioned into patches or sub-images of the original training resolution (224^2), each processed independently at that scale. Features from each scale are aggregated and concatenated with the original representation to form a multi-scale descriptor.

Key Findings

Surprisingly, across several pretrained visual representations (ViT, DINOv2, OpenCLIP, MVP), smaller models augmented with S2 consistently outperform larger models on tasks such as classification, semantic segmentation, depth estimation, MLLM benchmarks, and robotics manipulation. These smaller S2 models use far fewer parameters (0.28x to 0.07x) while having comparable GFLOPS. Notably, by scaling image resolution up to 1008^2, S2 achieved state-of-the-art performance on detailed visual understanding for MLLM on the V benchmark, surpassing both open-source and commercial MLLMs such as Gemini Pro and GPT-4V.

Further Analysis

We investigate when S2 is preferred over scaling model size. Although smaller S2 models outperform larger models in many downstream settings, larger models retain better generalization on hard examples. This raises the question of whether smaller models can match the generalization of larger ones. The authors find that features from larger models can be closely approximated by a single linear transformation applied to the multi-scale features of smaller models, implying that smaller models should have similar representational capacity. They hypothesize that weaker generalization of smaller models stems from pretraining at a single image scale. Experiments with ImageNet-21k pretraining on ViT show that pretraining with S2 improves generalization of smaller models, allowing them to reach or exceed the performance of larger models.

Summary

Scaling image resolution and aggregating multi-scale representations from pretrained, frozen smaller vision models can provide strong visual representations that rival or exceed those of much larger models on a range of tasks. Pretraining with S2 further narrows or eliminates the generalization gap in many settings.

Scaling the size of visual models has become the de facto standard for obtaining stronger visual representations. In this work, we discuss when larger vision models are no longer necessary. First, we demonstrate the power of scale scaling (S2), where a pretrained and frozen smaller vision model (for example ViT-B or ViT-L) run at multiple image scales can outperform larger models (for example ViT-H or ViT-G) on classification, segmentation, depth estimation, multimodal LLM benchmarks, and robotic manipulation. Notably, S2 achieves state-of-the-art performance for detailed visual understanding on the V benchmark for MLLMs, surpassing models such as GPT-4V. We study the conditions under which S2 is the preferred scaling method relative to model size. Although larger models generalize better on difficult examples, we show that features from large vision models can be well approximated by the multi-scale features of smaller models. This suggests that much, if not all, of the representation learned by current large pretrained models can be obtained from multi-scale smaller models. Our results indicate that multi-scale smaller models have comparable learning capacity to larger models, and pretraining small models with S2 can match or even exceed the advantages of larger models.

ALLPCB

ALLPCB