This article demonstrates how to deploy the ultralightweight inference framework, TinyMaix, on the MYIR MYD-LR3576 development platform.

TinyMaix is an ultralightweight neural network inference library, or TinyML library, designed for microcontrollers. It enables the execution of lightweight deep learning models on any resource-constrained MCU.

Key Features

- Core code is less than 400 lines (tm_layers.c, tm_model.c, arch_cpu.h)

- .text segment is under 3KB

- Low memory consumption

- Supports INT8, FP32, and FP16 models, with experimental support for FP8. It also supports model conversion from Keras H5 or TFLite.

- Features dedicated instruction optimizations for various chip architectures, including ARM SIMD/NEON/MVEI, RV32P, and RV64V.

- User-friendly API, requiring only model loading and execution.

- Supports fully static memory allocation (no malloc required).

- Can also be deployed on MPU platforms.

Compared to the RK3588, the RK3576 platform is a more cost-effective solution. It replaces four of the large A76 cores with A72 cores and reduces the GPU from a G610 MC4 to a G52MC3. While some video encoding/decoding capabilities and interfaces have been scaled back, the NPU performance remains the same at 6 TOPS.

To run TinyMaix, only a few simple steps are required.

First, ensure that cmake, gcc, and make are installed on your system:

git clone https://github.com/sipeed/TinyMaix.git

cd examples/mnist

mkdir build

cd build

cmake ..

make

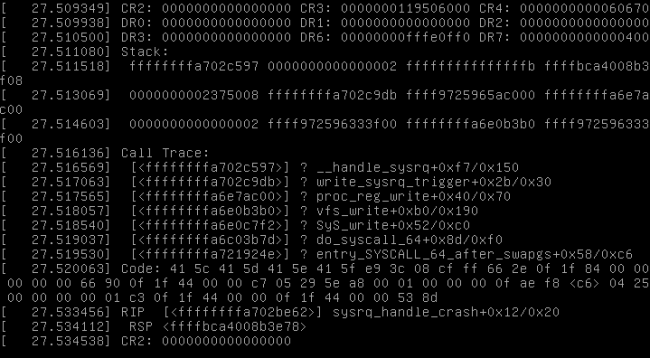

./mnist

The entire process completes in just 0.14 ms, demonstrating the powerful computing capabilities of the SoC platform.

The mbnet example uses a simple image classification model suitable for mobile devices. The model is MobileNetV1 0.25, which takes a 128x128x3 RGB image as input and produces predictions for 1000 classes.

mkdir build

cd build

cmake ..

make

./mbnet

Running the 1000-class classification consumes the following resources:

param 481.9 KB, ops 13.58 MOPS, buffer 96.0 KB

The VWW (Visual Wake Words) test primarily involves converting image information into an array format to serve as input for the network. The handwritten digit recognition example is also very simple to run:

./mnist

The library is highly encapsulated and compatible, making it easy to port to Linux and other platforms. When running on a high-performance SoC like the RK3576, its advantages are even more pronounced.

ALLPCB

ALLPCB