While many articles discuss the advantages of software decoupling, few detail specific implementation methods. The general approach to decoupling in embedded software development is to first layer the architecture and then decouple sub-modules. This article presents some of these methods.

1. System Layering

For embedded software development, the first step toward achieving decoupling is a well-designed layered architecture. Complex projects with ample resources must adopt layered design and modular architecture to meet business logic requirements, ensure system scalability, and maintain long-term serviceability.

Approach

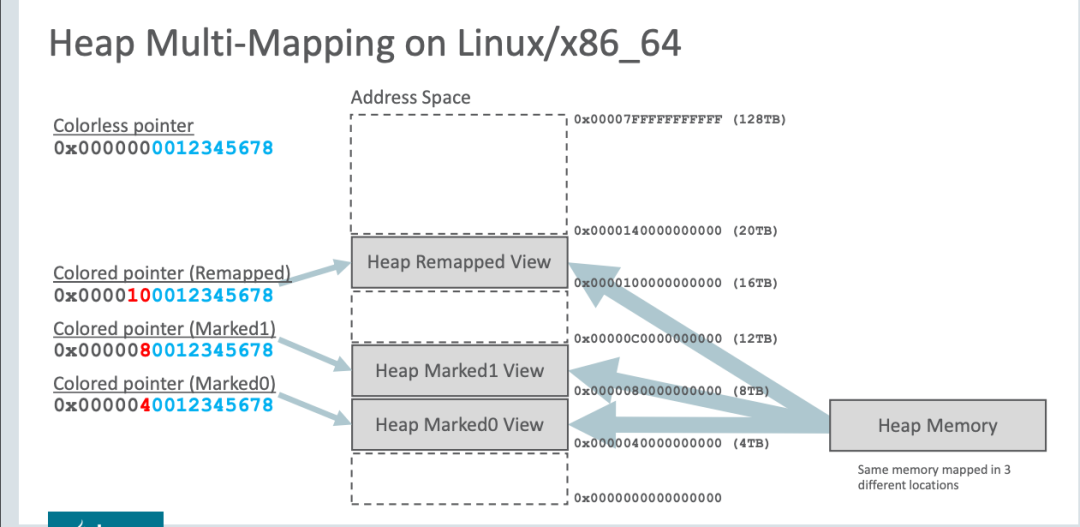

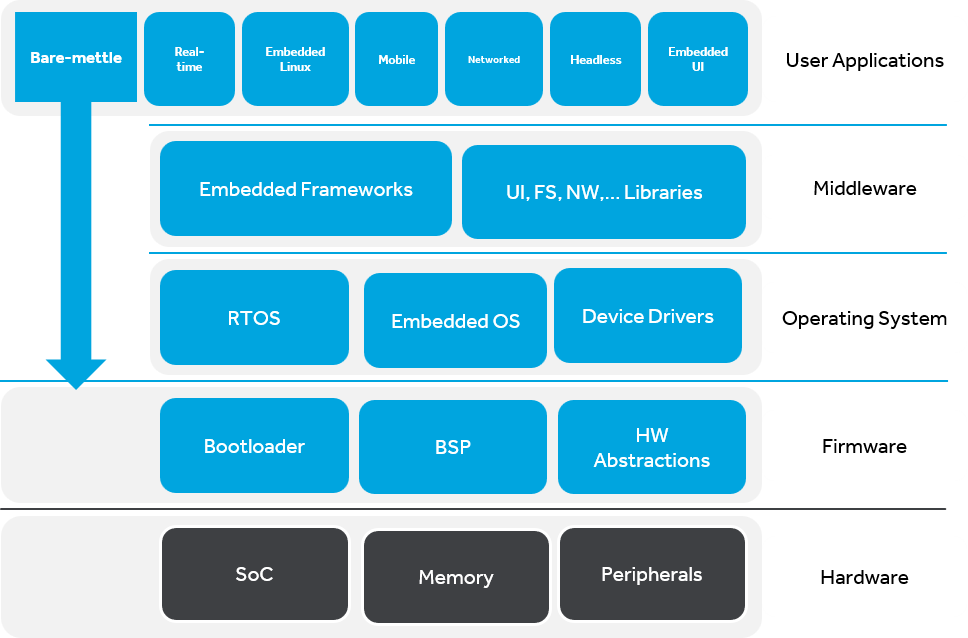

The most common layered architecture in embedded software, from top to bottom, is:

- Application Layer: Handles business logic, user interface, etc.

- Middleware Layer (Common Components): Contains general-purpose modules.

- Operating System Layer: Includes the RTOS kernel.

- Hardware Abstraction Layer (HAL): Encapsulates low-level hardware, providing a unified hardware operation interface for upper layers.

- Hardware Layer: Consists of physical components and peripheral resources.

To achieve separation of concerns, layering follows specific rules:

1. Each layer has a specific role and responsibility. For example, the application layer implements business logic without concerning itself with specific hardware interfaces. This separation of concerns helps build efficient roles and responsibilities.

2. Requests in a layered architecture must pass through each layer sequentially; skipping layers is not allowed.

3. Changes within one layer should theoretically not affect other layers. When significant changes are unavoidable, communication is essential, or technical measures should prevent compilation or execution to resolve impacts during the development phase.

Software architecture design requires a clear and organized separation of concerns so that each layer can be developed and maintained independently. Each layer is a set of modules providing high-cohesion services.

Even for resource-constrained bare-metal systems on microcontrollers that use a super-loop or an interrupt-foreground/background architecture (where interrupts handle urgent tasks and the main loop handles regular tasks), it is advisable to have at least two layers: a Hardware Abstraction Layer and a Business Layer.

Application

Layering can cause a slight performance decrease because a business request must traverse multiple layers, which can affect efficiency and increase system complexity. However, for most consumer electronics without strict timing requirements, these issues are not significant. A layered architecture is the most common approach in embedded software. The only consideration is to manage memory consumption reasonably to avoid extra overhead from overly complex inter-layer calls.

2. Sub-module Design

Layering provides macroscopic isolation, but practical development requires various design patterns to achieve loose coupling between modules. The main challenges in decoupling embedded software are the flexible and changing nature of upper-level business logic and ensuring driver compatibility when underlying hardware components are replaced.

2.1 Event-Driven Architecture

The event-driven pattern decomposes a system into "event producers" and "event consumers," decoupling components through an event queue. An event scheduler pulls events from the queue based on a scheduling policy and dispatches them to appropriate event handlers. Instead of periodically polling tasks, the system responds to external or internal events.

Approach

The event-driven pattern is often used in systems with high requirements for asynchronous responses and user interaction. It binds events to their handlers, either through a predefined relationship table (table-driven method) or by dynamically subscribing and unsubscribing. Key considerations include:

1. Design an event filtering mechanism to avoid unnecessary or duplicate events. Reducing event sources fundamentally lessens the system load.

2. For high-frequency events, consider event merging or delaying processing to handle the final result, similar to interrupt debouncing.

3. The efficiency of event handling is critical. High-priority events may require special handling, such as a separate queue for prioritized processing.

4. For long-running handlers, consider breaking them down into multiple steps to avoid timeouts.

5. In resource-constrained systems, use a static event pool instead of dynamic allocation. For systems with sufficient resources, an RTOS kernel queue or a custom circular queue can be used.

Below is a conceptual implementation:

// Define event type

typedef struct {

int eventType;

void* eventData;

} Event;

// Define event handler function

typedef void (*EventHandler)(Event*);

// Register event handler

void registerEventHandler(int eventType, EventHandler handler);

// Unregister event handler

void unregisterEventHandler(int eventType, EventHandler handler);

// Publish event

void publishEvent(int eventType, void* eventData);

// Example function to handle an event

void eventHandle_1(Event* event) {

// Process the event

}

void eventHandle_2(Event* event) {

// Process the event

}

int test(void) {

//...

// Register event handler functions

registerEventHandler(EVENT_1, eventHandle_1);

registerEventHandler(EVENT_2, eventHandle_2);

// Publish events

publishEvent(EVENT_1, eventData_1);

publishEvent(EVENT_2, eventData_2);

//...

return 0;

}

In this example,registerEventHandler registers handler functions, and publishEvent publishes events. This approach decouples event handling logic from event publishing logic, allowing them to be developed and tested independently.

If the event-handler combinations are fixed, a table-driven approach can be used:

typedef struct {

int event; // Event

pFun event_handle; // Corresponding handler function pointer

} event_table_struct;

static const event_table_struct event_table[] = {

{EVENT_1, handleEvent_1},

{EVENT_2, handleEvent_2},

};

Components communicate via events rather than direct calls, which decouples them and allows for independent development and testing. New events can be added later without affecting the overall structure.

2.2 Dataflow Architecture

For dataflow scenarios like data acquisition, preprocessing, and distribution, the Pipes and Filters pattern is suitable. This pattern decomposes the system into independent components (filters), each performing a specific data transformation or processing task. These components are connected by "pipes" to form a complete workflow. This makes the components loosely coupled, reusable, and independently maintainable.

Approach

The pipes in this architecture serve as communication channels between filters. A pipe takes input from one source and directs it to another, allowing data to be progressively transformed as it passes through a series of filters.

1. Define a standard interface for filters to ensure they all accept and produce data in the same format.

2. Create specific filters, each implementing a particular function. Consider their order and validate inputs and outputs.

3. Use a linked list or similar structure to connect the filters in sequence, forming a processing chain.

Application

Consider an IoT device providing location-based services. It receives NMEA satellite data, which is then decoded, filtered, and distributed to other modules for tasks like reporting to a server or triggering alarms for speeding or sudden acceleration.

typedef struct {

char* rawData; // Raw NMEA data

void* parsedData; // Parsed data

int flag; // Data flow flag, indicates if backend filters should process

} NmeaDataPacket;

// Receiver filter

void receiveNmeaData(NmeaDataPacket* packet) {

// Read NMEA data from the serial port

packet->rawData = readFromSerialPort();

}

// Parser filter

void decodeNmeaData(NmeaDataPacket* packet) {

if (packet->rawData == NULL) {

return;

}

// Parse NMEA string data into a struct

packet->parsedData = nmedDecode(packet->rawData);

}

// Filtering filter

// NMEA data filtering logic often needs expansion and adjustment

void filterNmeaData(NmeaDataPacket* packet) {

if (packet->parsedData == NULL) {

return;

}

// Filter data based on application requirements

// Note: This is a placeholder; actual logic depends on combined conditions

if (dataError) {

packet->parsedData = NULL; // Discard the data

packet->flag = xx; // Mark the reason for discarding

}

}

// Dispatcher filter

void dispatchNmeaData(NmeaDataPacket* packet) {

if (packet->parsedData == NULL) {

return;

}

// Distribute the cleaned, valid data to other modules

// The event-driven pattern can be used here

}

The filters above can be combined into a pipeline. Each filter must perform boundary checks on its input data.

// Use a linked list to connect filters in order, forming a processing pipeline

typedef struct FilterNode {

void (*filter)(NmeaDataPacket*);

struct FilterNode* next;

} FilterNode;

// Function to add a filter to the pipeline

void addFilter(FilterNode** head, void (*newFilter)(NmeaDataPacket*)) {

FilterNode* newNode = malloc(sizeof(FilterNode));

newNode->filter = newFilter;

newNode->next = NULL;

if (*head == NULL) {

*head = newNode;

} else {

FilterNode* current = *head;

while (current->next != NULL) {

current = current->next;

}

current->next = newNode;

}

}

Usage example:

// Main program initializes the data packet and processes it through the pipeline

int track_gnss_task(void) {

FilterNode* pipeline = NULL;

// Add the four filters to the linked list to form the pipeline

addFilter(&pipeline, receiveNmeaData);

addFilter(&pipeline, decodeNmeaData);

addFilter(&pipeline, filterNmeaData);

// Extension point: New filters can be added here without modifying other code

// For example, to identify GPS drift points based on NMEA processing algorithms

addFilter(&pipeline, dispatchNmeaData);

NmeaDataPacket packet;

FilterNode* current;

while (1) {

// Continuously process the data stream

// Assuming UART read is blocking, otherwise this is an infinite loop

memset(&packet, 0, sizeof(NmeaDataPacket));

current = pipeline;

while (current != NULL) {

current->filter(&packet);

current = current->next;

}

}

return 0;

}

While other organizational methods exist, this approach makes data stream processing intuitive. Each component can be maintained independently, and filters can be added or removed as needed. For non-dataflow scenarios with nested processing, this pattern can become complex; event-driven or state machine patterns are generally preferred.

2.3 Dependency Injection

While the previous patterns address business scenarios, dependency injection is a fundamental decoupling technique that targets logical relationships. The core idea is to move the responsibility of creating dependencies from within a component to an external entity. A module does not create its own dependencies; they are provided from the outside, which reduces coupling and allows components to be developed and tested independently.

Approach

Dependencies are passed to objects that need them, typically through constructor functions or setter methods, rather than having the objects create them internally. The caller provides a function pointer that defines the action the module will execute. This makes it easier to replace dependencies, facilitates unit testing, and improves code reusability.

// Dependency Injection demonstration

typedef struct {

void (*userFunction)();

} Dependency;

// Define a component

typedef struct {

Dependency* dependency;

} Component;

// Function that uses the dependency interface

void useDependency(Component* component) {

//...

if(component->dependency->userFunction != NULL) {

component->dependency->userFunction();

}

}

// Implementation of the dependency interface

void dependencyCustomerHandle() {

// Custom implementation logic

}

int test(void) {

// Create a dependency instance

Dependency dependency;

dependency.userFunction = dependencyCustomerHandle;

// Create the component

Component component;

component.dependency = &dependency;

// Use the component. This will execute the injected dependencyCustomerHandle.

useDependency(&component);

return 0;

}

As shown, the dependency is passed as a parameter, allowing the

useDependency component to execute the

dependencyCustomerHandle function provided by the upper layer. This keeps the framework unchanged while allowing the upper layer to inject different parameters as needed, decoupling it from the lower layer.

Here is another example showing how to control an LED on different platforms:

// LED driver module

typedef struct {

void (*on)(void);

void (*off)(void);

} led_driver_interface_t;

typedef struct {

led_driver_interface_t *driver;

} led_controller_t;

void led_controller_init(led_controller_t *ctrl, led_driver_interface_t *driver) {

if (ctrl && driver) {

ctrl->driver = driver;

}

}

void led_controller_turn_on(led_controller_t *ctrl) {

if (ctrl && ctrl->driver && ctrl->driver->on) {

ctrl->driver->on();

}

}

void led_controller_turn_off(led_controller_t *ctrl) {

if (ctrl && ctrl->driver && ctrl->driver->off) {

ctrl->driver->off();

}

}

The LED on/off functions are encapsulated, but their implementation is not defined; it must be provided by the caller.

static void gpio_led_on(void) {

// Control via GPIO, PWM, etc.

}

static void gpio_led_off(void) {

// Control via GPIO, PWM, etc.

}

led_driver_interface_t gpio_led_driver = {

.on = gpio_led_on,

.off = gpio_led_off,

};

int test(void) {

led_controller_t led_ctrl;

// Dependency Injection

led_controller_init(&led_ctrl, &gpio_led_driver);

// Execute actions

led_controller_turn_on(&led_ctrl);

led_controller_turn_off(&led_ctrl);

return 0;

}

If the hardware changes how the LED is controlled, only the caller's gpio_led_driver configuration needs to be updated. The framework remains completely unchanged. This is a common practice in systems like Linux with its device tree.

3. Non-Pattern Approaches

The goal of decoupling is to support flexible adjustments and minimize invasive changes when requirements change, without affecting stable versions. Besides formal design patterns, other simple and effective methods can be used depending on hardware resources and coding standards. Proper software version control is also crucial.

- Conditional Compilation and Macros: Isolate custom code using

#if defined CUSTOM_FEATUREto ensure the standard version is unaffected. Macro definitions can be passed through the build system (e.g., Makefile) or defined in a centralized configuration header file. - Injection Mechanisms: Insert hook functions into standard workflows to allow clients to inject custom logic. This is similar to a simplified version of dependency injection and is suitable for adding custom code when developing with an SDK.

// 1. Define a function pointer, initially NULL, to support interface assignment

pfun userFunction = NULL;

void userFunctionInit(pfun init) {

userFunction = init;

}

// 2. Upper layer calls userFunctionInit to configure it.

// 3. Insert code into the original project --start--

...

if(userFunction != NULL) {

userFunction();

}

...

// Insert code --end--

- Callback Functions: Callbacks allow one module to notify another when a specific event occurs without creating a direct dependency. They are a common means of implementing asynchronous operations and non-blocking I/O.

- Scripted or Automated Configuration: The behavior of a component and its resources can be determined by external configuration files, enabling dynamic replacement and extension. Alternatively, the software can automatically select the correct module at runtime based on specific rules (e.g., to support multiple peripherals), though this can increase code size.

- Object-Oriented Programming: Even in a procedural language like C, object-oriented concepts can be emulated to achieve higher levels of abstraction, enhancing code maintainability and extensibility. When code space is sufficient, adopting an object-oriented approach in embedded C can significantly improve code reuse.

4. Conclusion

In embedded products like IoT devices, where requirements are highly fragmented, a single codebase often needs to support multiple application scenarios, making code decoupling essential.

A fundamental rule for decoupling is to clearly separate a complex module into three parts: external input, internal execution, and result output. It should interface with other modules cleanly, with simple and stable external APIs that hide internal implementation details. Combining this with mature design patterns can make coding easier.

ALLPCB

ALLPCB