Overview

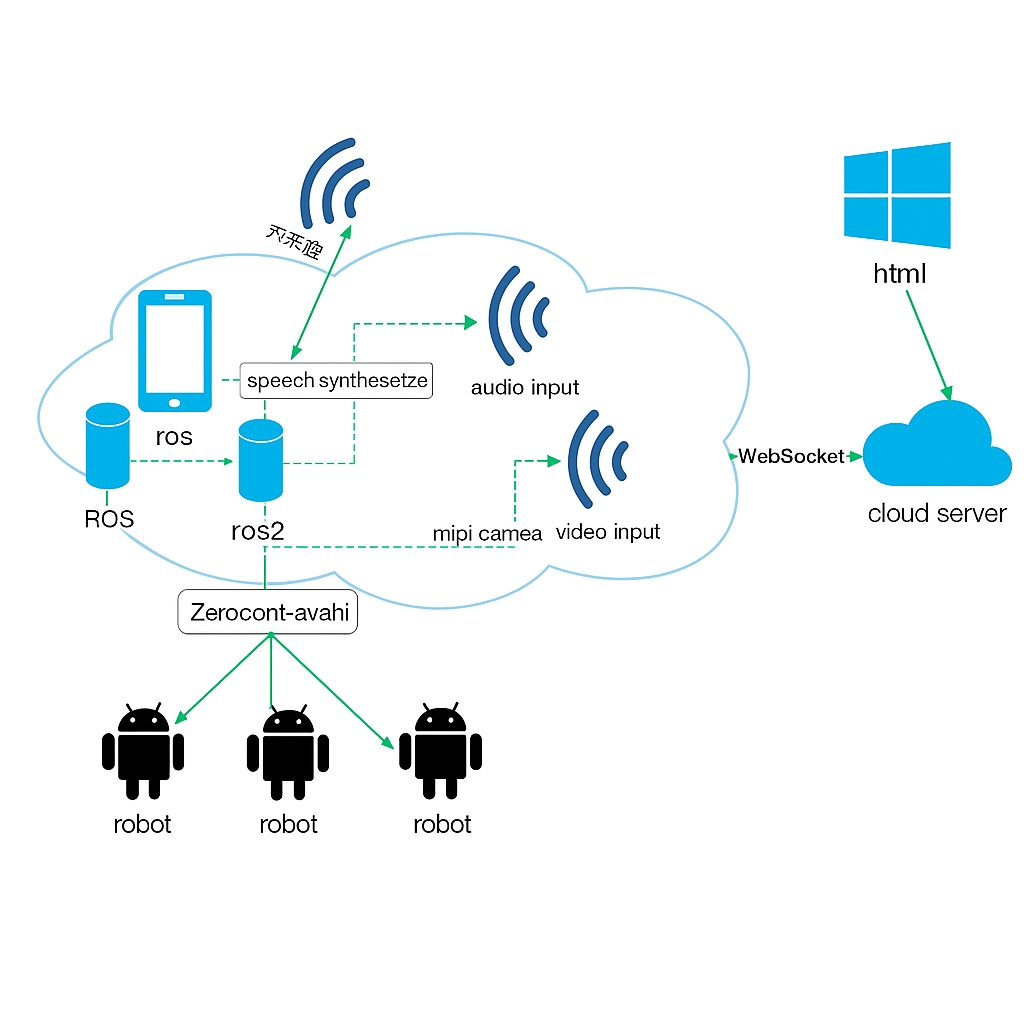

This project implements an intelligent care system for elderly and monitored individuals using an RDK X3 development platform. The system integrates IoT technology to connect local devices and robots, uses Avahi for local device discovery and service sharing, and employs a ROS1 bridge to enable communication between different ROS versions so voice commands can control a robot. A cloud backend hosts the web frontend built with Vue 3, enabling synchronized local and cloud interaction and laying the foundation for expanded remote control capabilities.

Core features include emergency alerts, fall detection, intelligent call requests, automated medication and meal delivery, real-time monitoring, and manual robot control.

Features and Innovations

Compared with existing smart healthcare and home products, this care system offers several notable features:

- Targeted assistance for specific user groups: Designed for elderly users, the system uses IoT-based interactions that minimize operational complexity. Simple gestures such as a "V" sign can request medication or meal delivery, which is helpful for users with limited mobility and conditions like Parkinson's disease.

- Fast intelligent alerts: When a fall is detected, the system triggers an immediate alarm and synchronizes a warning to the cloud platform, notifying family members and nearby contacts to support timely assistance.

- Integrated multi-service platform: The system provides real-time monitoring, voice interaction, intelligent alerts, gesture control, and environment monitoring in one package. Video feeds and environmental data are uploaded to the cloud, allowing relatives and caregivers to monitor conditions remotely. In emergencies, the cloud can trigger alarms and enable remote robot control.

Functional Design

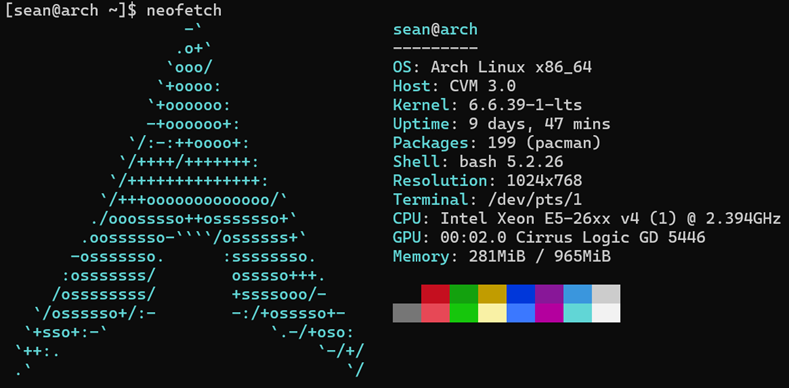

Design Environment

The solution is built on the RDK X3 processor and development board with a voice processing module. The RDK X3 development board is a high-performance AI compute platform employing heterogeneous computing architecture to perform AI inference efficiently at low power. The board includes a variety of peripherals and communication interfaces for connecting external devices and cloud services, and supports common AI frameworks such as TensorFlow and PyTorch for rapid model deployment.

Design Components

The system uses the RDK X3 platform and associated modules, with server software implemented in Node.js and a Vue 3 frontend. Key functions include:

- Emergency alarm: When a fall is detected, the camera uses human pose detection to automatically send an alert signal to family members and medical staff.

- Gesture-based requests: The system recognizes specific gestures captured by the camera; a simple hand gesture can request medication, order a meal, or call for a doctor, with voice feedback confirming the request.

- Voice interaction: The system recognizes spoken questions such as "How is the weather today" and replies via synthesized voice, providing conversational assistance.

- Real-time monitoring: The web interface displays live camera feeds so caregivers can view the room remotely and receive alerts. Users can also send requests via gestures or pose detection for remote intelligent management.

The system integrates IoT, frontend design, and server technology to improve quality of life through continuous monitoring and to streamline medical workflows by automatically transmitting patient data to reduce caregiver workload.

System Implementation

Design Approach

The implementation combines the RDK X3 platform with IoT technologies to deliver functions such as automated medication and meal delivery, emergency calls, and fall detection.

1. MI PI camera module

The design uses an OV5647 camera sensor with 5 MP resolution and 1080p support. The camera supports automatic exposure and white balance adjustment and transmits data over an I2C interface.

- Gesture recognition: A deep learning hand gesture detection model is used. The processing pipeline includes image capture, preprocessing, CNN-based feature extraction, and model inference to recognize gestures such as "V" (medication), "open palm" (meal), and "OK" (call doctor). The system provides synchronized web and voice feedback.

- Fall detection: Using a hobot_falldown_detection model, the camera captures video, preprocesses frames, applies a CNN to locate the person, and analyzes pose to determine if a fall has occurred. Confirmed events trigger a web popup and voice alert.

2. Audio HAT board module

The system uses an Audio Driver HAT REV2 board supporting 4-microphone capture and stereo playback.

- Speech recognition: An HBAudioCapture class handles audio capture and forwards data to recognition algorithms, covering initialization, capture, and processing.

- Text-to-speech: A HobotTTSNode class receives text instructions, converts text to PCM audio, and drives playback. Publishing to the /tts_text topic triggers speech output.

3. Device communication module

- Host and controller: A Raspberry Pi host communicates over USB serial to an STM32 controller on the robot chassis to send motion commands.

- Robot and local endpoint: The Raspberry Pi and robot chassis form the robot, which shares ROS nodes with the RDK X3 using Avahi services to enable remote control.

- Local communication: The RDK X3 uses a ros1_bridge to connect ROS1 and ROS2, allowing voice commands to control the robot.

- Cloud interaction: The local endpoint communicates bidirectionally with a cloud server via WebSocket to upload temperature/humidity and gesture data in real time; the cloud renders the web frontend.

4. Web display module

The web frontend is hosted on a cloud server and provides the following core functions:

- Central display of the live camera feed, with an image forwarding program (transfer.py) relaying video data.

- A sidebar logging voice interaction history.

- Panels showing temperature and humidity, gesture commands, and fall alerts, with data streamed from the RDK X3 in real time.

5. Temperature and humidity sensor module

A DHT11 sensor measures temperature and humidity using a single-wire protocol to transmit 40-bit data. The Hobot.GPIO library parses, verifies, and stores the measurements, which are then sent to the cloud via WebSocket.

6. Voice interaction module

An AudioSmartToTTS node subscribes to /audio_smart to receive captured audio, extracts command words, queries the cloud server via WebSocket for a reply, and publishes to /tts_text for speech output. The audio HAT improves recognition and playback quality.

7. Robot control module

- Voice control: Microphone capture is processed by speech algorithms, which publish /audio_smart messages. A hub script forwards commands and controller.py issues movement commands to the robot chassis.

- App control: A mobile app provides pan, rotate, and zoom gestures to control the view. Devices connect automatically via ROS and Avahi, allowing voice or touch control of the robot.

8. Gesture and fall detection robustness

- Gesture recognition: When a target gesture is detected, the system provides audio confirmation, displays details on the web UI, and commands the robot to execute the task. The code separates initialization, data processing, and state reset stages.

- Fall detection: Upon detecting a fall, the system issues a voice alarm and a web popup. A 5-second timer helps avoid false positives, and the state resets automatically after the condition clears.

Future Directions

Planned system upgrades include:

- Health monitoring: Add heart rate and blood pressure sensors for real-time health risk alerts.

- Intelligent consultation: Use conversational voice to obtain basic medical guidance and improve patient-provider communication.

- Remote medical services: Enable video calls to support remote consultations.

- Environmental automation: Automatically adjust lighting and temperature.

- Person tracking: Add a tracking robot to eliminate monitoring blind spots.

Open Source

ALLPCB

ALLPCB