1 Introduction

In recent years, breakthroughs in artificial intelligence (AI) have brought new momentum to embedded systems. The integration of AI technology enables embedded systems to handle complex tasks more intelligently, such as image recognition, speech recognition, and natural language processing. This integration not only raises the intelligence level of embedded devices but also expands their application scope, enabling deeper use in smart home, intelligent transportation, and smart healthcare. Embedding AI has become an important trend for future embedded systems. To begin edge deployment, a classic machine learning example—handwritten digit recognition—is a suitable starting point. Handwritten digit recognition demonstrates how to run complex AI algorithms on resource-constrained devices and highlights the benefit of moving intelligent processing closer to the data source, reducing dependence on cloud computing.

2 Fundamentals

2.1 Neural Network Model Overview

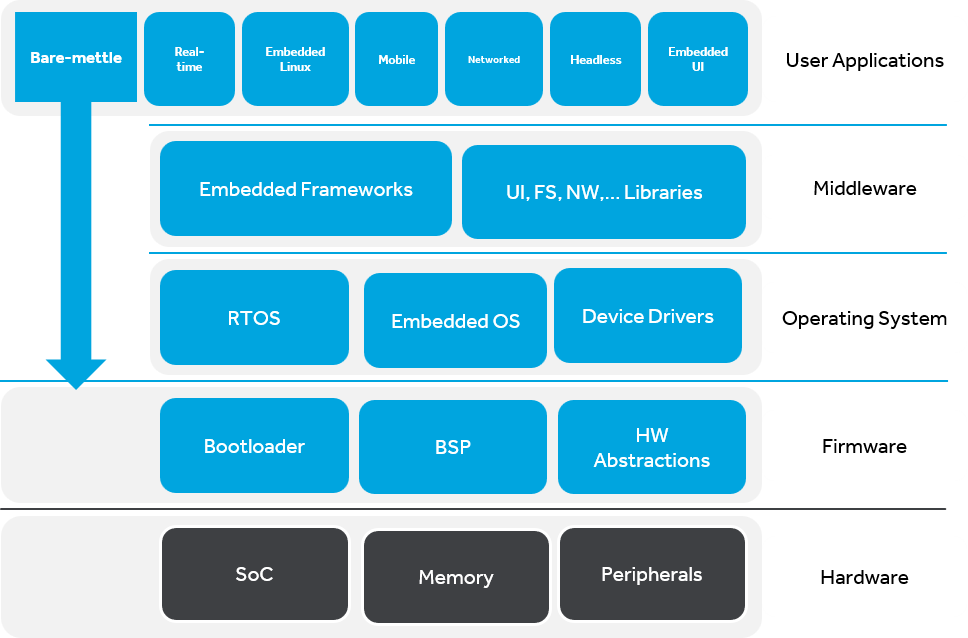

Neural network model design is inspired by the structure of the human brain. The brain consists of many interconnected neurons that transmit information via electrical signals to help us perceive and process the world. Artificial neural networks (ANNs) mimic this process by using software-implemented neurons or nodes to solve complex problems. These nodes are organized into layers and perform mathematical operations to process inputs. A typical neural network has three main parts:

- Input layer: Receives raw data from the outside world, such as images, audio, or text. Input nodes perform initial processing and pass data to subsequent layers.

- Hidden layer: Located between the input and output layers, there can be one or more hidden layers. Each hidden layer analyzes and transforms incoming data, progressively extracting features until an abstract representation is formed. The number and width of hidden layers determine the network depth and complexity.

- Output layer: Produces the network's final prediction. Depending on the task, the output layer may contain a single node, for example in binary classification, or multiple nodes for multi-class classification.

To visualize how neural networks operate, one can use online tools such as A Neural Network Playground.

When a neural network contains multiple hidden layers, it is called a deep neural network (DNN), which is the basis of deep learning. These networks often contain millions or more parameters, enabling them to capture highly complex nonlinear relationships within data. In a deep neural network, each connection between nodes has a weight that measures the strength of the relationship; positive weights indicate excitation and negative weights indicate inhibition. Higher weights imply greater influence on connected nodes. In theory, sufficiently deep and wide networks can approximate arbitrary input-output mappings, but this capability usually requires large amounts of training data. Compared with shallow networks, deep networks typically need millions of samples to achieve good generalization.

2.3 Common Neural Network Types

Various network architectures have been developed for different application scenarios and technical needs:

- Feedforward Neural Network (FNN): The most basic form, where data flows in a single direction from the input layer through hidden layers to the output layer, without feedback paths.

- Convolutional Neural Network (CNN): Particularly effective for image recognition because its filter design can capture spatial structure in images.

- Recurrent Neural Network (RNN): Suitable for sequence data, such as sentences in natural language processing or time series analysis. RNNs have memory capability, allowing current states to depend on previous states.

- Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU): Improved RNN variants designed to mitigate the vanishing gradient problem, enabling better modeling of long-range dependencies.

- Generative Adversarial Network (GAN): Composed of two sub-networks, a generator that creates new data samples and a discriminator that assesses their realism. The two networks compete and evolve together to generate high-quality synthetic data.

2.4 Model Optimization Techniques

When deploying deep learning models to resource-constrained environments, model optimization techniques are crucial. These methods aim to reduce model size, lower computational complexity, and improve inference speed while minimizing impact on accuracy. Common optimization techniques include pruning, quantization, and distillation.

3 Deployment

3.1 Preparation

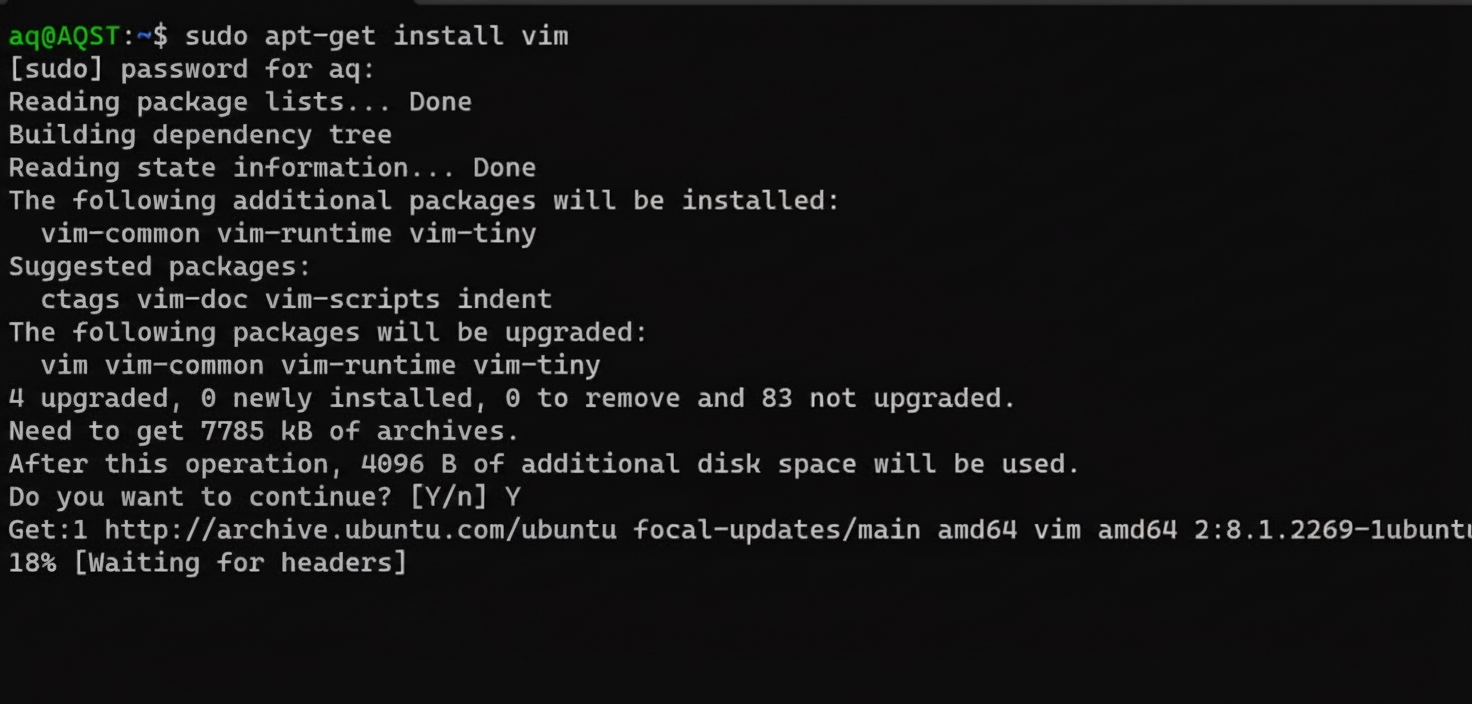

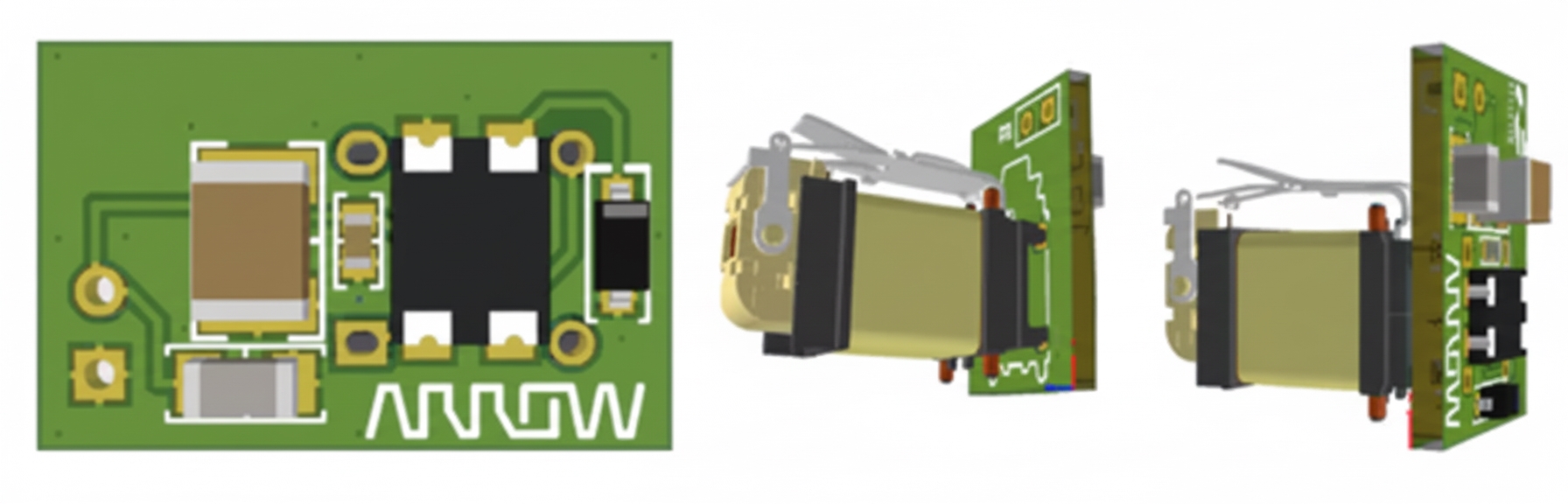

Before deployment, confirm the target module's supported operators, quantization formats (int4, int8, fp16, mixed precision), available memory, and other hardware parameters to gain a comprehensive understanding of the board. Models are often converted to an ONNX intermediate file and then transformed into the hardware-specific format required by the device.

ALLPCB

ALLPCB