Introduction

Traditional industries are undergoing digital transformation, and artificial intelligence is changing many sectors. In healthcare, artificial intelligence plays an important role in diagnosis and patient care.

Traditional medical devices have limited capabilities; clinicians are often unable to accurately assess conditions, and patient status may not be monitored effectively during care.

Edge AI Overview

Edge AI refers to deploying and running artificial intelligence on edge devices using machine learning algorithms. Machine learning is a broad field that has seen significant advances in recent years.

Edge AI combines edge computing and AI to run machine learning tasks directly on connected edge devices. Edge AI can perform tasks such as object detection, speech recognition, fingerprint detection, fraud detection, and autonomous driving.

Edge AI offers advantages such as low latency, high bandwidth, and the ability to handle large volumes of data. By applying AI tools, clinicians can detect and interpret conditions more accurately, improve diagnostic quality through image recognition, and optimize workflows to increase team efficiency.

Remote Patient Monitoring

Elderly or critically ill patients require continuous monitoring and care. An edge computing device with a camera can use visual recognition algorithms to monitor patient behavior in real time and collect behavioral data. Neural network models can be trained on this data to make predictions; if unsafe behavior such as a fall is detected, the system can notify medical staff immediately.

Advantages of AI-driven medical monitoring include:

- Continuous real-time monitoring with faster and more accurate detection of falls or other incidents

- Reduced need for 24-hour human supervision, lowering labor requirements

- Ability to analyze causes of incidents, such as limited bed area or equipment positioned too far from the bed

AI Frameworks Suitable for Medical Device Prediction

alwaysAI

alwaysAI is a machine vision recognition framework that can be deployed for inference on NVIDIA Jetson series edge development boards. The framework includes a catalog of pre-trained models, low-code model training tools, and a developer API to support building vision applications.

Roboflow

Roboflow offers end-to-end computer vision solutions for healthcare organizations by building visual models for various clinical problems. Typical workflow steps include:

- Creating datasets and applying computer vision methods to detect and classify objects in specific scenarios

- Annotating required data, such as images from ultrasound, X-ray, endoscopy, MRI, and other modalities

- Uploading datasets to Roboflow to support model training and improve prediction accuracy

Roboflow hosts public datasets including BCCD, a blood cell object detection dataset, which can be deployed to edge devices such as NVIDIA Jetson.

Roboflow supports annotation, training of custom YOLOv5 models, and workflows for inference on edge devices.

Digital Pathology Diagnostics

Traditional pathology diagnosis relies on manual analysis of microscope images or specialized equipment to identify cell types and distribution, requiring expert visual inspection.

Limitations include:

- High subjectivity and lack of uniform standards

- Low diagnostic efficiency

- Limited accuracy in observed pathological images

- Inconvenient storage and retrieval of pathology results

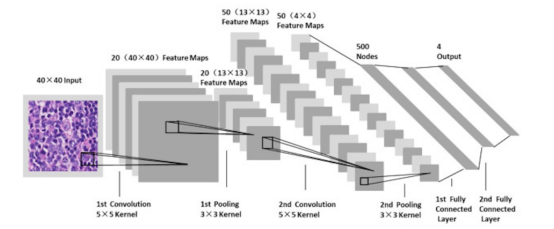

Applying deep learning to digital pathology images improves the efficiency and accuracy of disease diagnosis, target detection, and region segmentation. It reduces dependence on specialized equipment and can lower clinical costs by leveraging digital pathology images.

Edge AI uses machine learning algorithms to provide automated whole-slide imaging (WSI) analysis for images.

Pathology diagnostic workflow with AI:

- Glass slides are scanned into whole-slide digital images by a scanner.

- WSI systems provide fast viewing of pathology images with digital annotation, rapid navigation and zoom, and computer-aided viewing and analysis.

- WSI images have very high pixel resolution, which can overwhelm processing and memory resources, making full-pixel WSI difficult to run and analyze on typical systems.

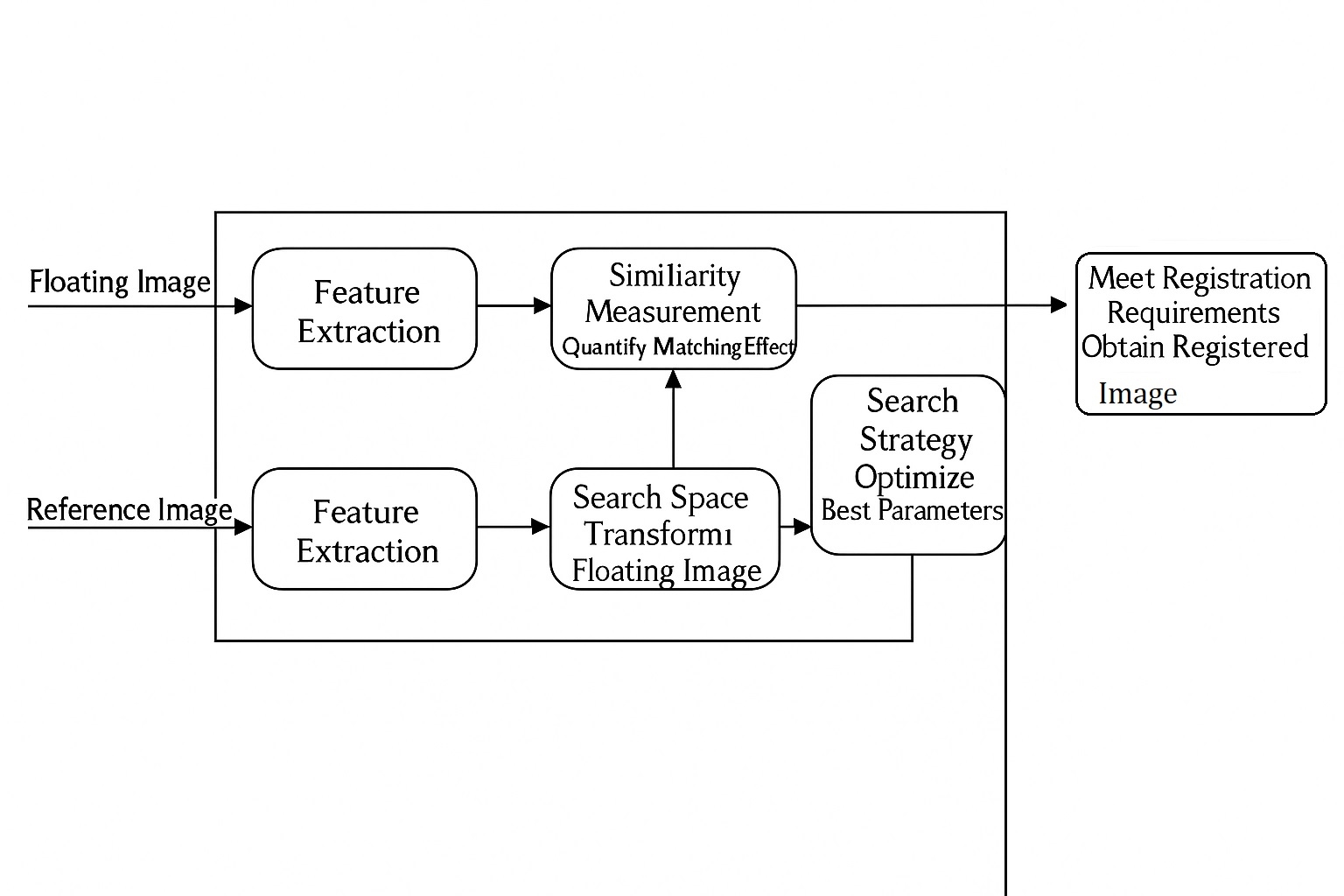

- Using convolutional neural networks and grid-based sliding-window detection and classification models, high-resolution images are partitioned into local tiles. Each tile is analyzed to extract features, and the results are integrated to reduce memory usage and improve prediction efficiency.

Case Studies

Grundium Ltd: Digital Pathology Whole-Slide Imaging and Analysis Platform

At GTC 2021, Grundium Ltd demonstrated how NVIDIA Jetson platform computing can be used to redesign whole-slide scanners. Deep learning-based image analysis can be interleaved with the scanning process so that results are available immediately after scanning, improving throughput and diagnostic accuracy.

Portable Brain CT Scanner with AI Diagnosis

An NVIDIA blog described a lightweight brain scanner powered by NVIDIA Jetson AGX Xavier that offers 32 TOPS of compute and performs inference at the edge. The analysis system evaluates brain signals to support further diagnosis, enabling stroke diagnosis within minutes.

Developing AI-Driven Digital Health Applications on NVIDIA Jetson

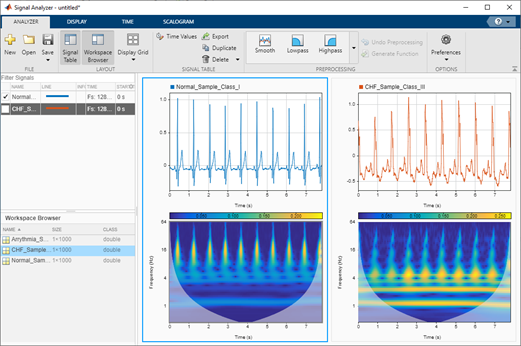

Data scientists and engineers use NVIDIA GPUs to prototype AI algorithms for biomedical applications and deploy them on embedded IoT and edge AI platforms such as NVIDIA Jetson. Tools such as MathWorks GPU Coder can be used to deploy prediction pipelines on Jetson devices.

One solution example trains a classifier to distinguish arrhythmia (ARR), congestive heart failure (CHF), and normal sinus rhythm (NSR).

ALLPCB

ALLPCB