Overview

NumPy is a widely used scientific computing package in the Python ecosystem. It provides efficient multidimensional array operations and a range of higher-level mathematical functions, enabling rapid construction of computational workflows for models. Although using NumPy to write models is no longer mainstream, it remains a useful way to understand underlying architectures and learning principles.

Recently, a postdoctoral researcher from Princeton published a large open-source repository of machine learning models implemented in NumPy. The codebase exceeds 30,000 lines, contains more than 30 models, and includes associated papers and test results.

Project address: https://github.com/ddbourgin/numpy-ml

Scope and Structure

The repository contains about 30 main machine learning models and roughly 15 utilities for preprocessing and computation. There are 62 .py files in total. Average code per model exceeds 500 lines; the neural network layer.py file approaches 4,000 lines. The author, David Bourgin, implemented most mainstream models by hand using NumPy, explicitly computing gradients to emphasize conceptual and mathematical clarity.

Bourgin notes that he learned a great deal from autograd-style repositories, but chose to make all gradient computations explicit to highlight the math. The trade-off is that every new differentiable function requires manually deriving its gradient.

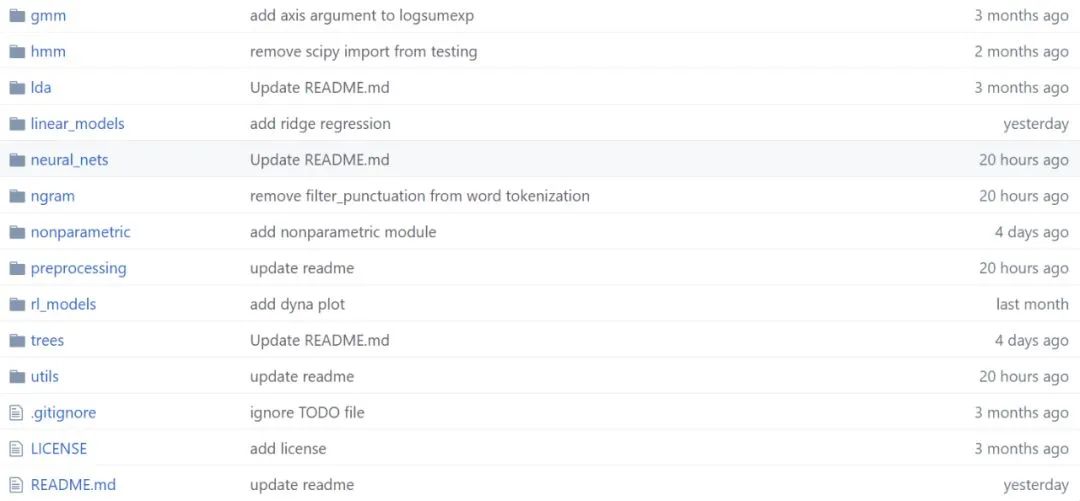

Repository Layout

The project groups implementations into folders by model type or utility. Within each code collection, the author provides references such as example outputs, papers, and links used during implementation. The following image shows the project file structure.

For example, when implementing neural network layers, the author includes reference papers and resources.

Because the codebase is large, some bugs are expected. Contributions and pull requests are welcome. Since implementations rely mainly on NumPy, the environment setup is straightforward.

Implemented Models and Modules

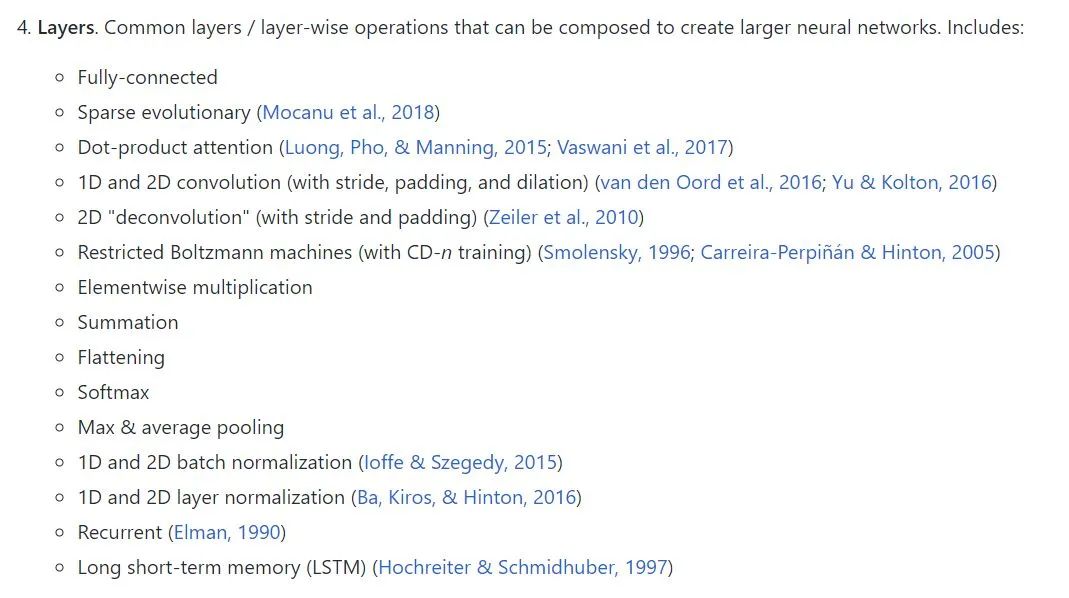

The repository is organized into two main categories: classical machine learning models and mainstream deep learning models. Shallow models include complex methods such as hidden Markov models and boosting, as well as classical methods like linear regression and nearest neighbors. Deep models are constructed from modules covering layers, loss functions, and optimizers, allowing rapid assembly of various neural networks. The project also includes preprocessing components and utility tools.

- Gaussian mixture models

- Hidden Markov models

- Latent Dirichlet allocation (topic model)

- Neural networks

- Tree-based models

- Linear models

- n-gram sequence models

- Reinforcement learning models

- Nonparametric models

- Preprocessing

- Tools

ALLPCB

ALLPCB