Overview

As end-to-end autonomous driving architectures emerge, traditional rule-based simulation testing faces two main challenges: insufficient realism and difficulty generalizing scenarios. This article analyzes Kangmou's dual-modal simulation testing solution: on one hand it relies on aiSim to provide deterministic, physics-level sensor modeling; on the other, World Extractor implements automated world reconstruction based on 3DGS and NeRF. It focuses on how the two combine via a hybrid rendering approach to retain real-world visual fidelity while enabling dynamic traffic flow generalization, building a digital twin environment suitable for closed-loop validation.

01 End-to-end testing challenges

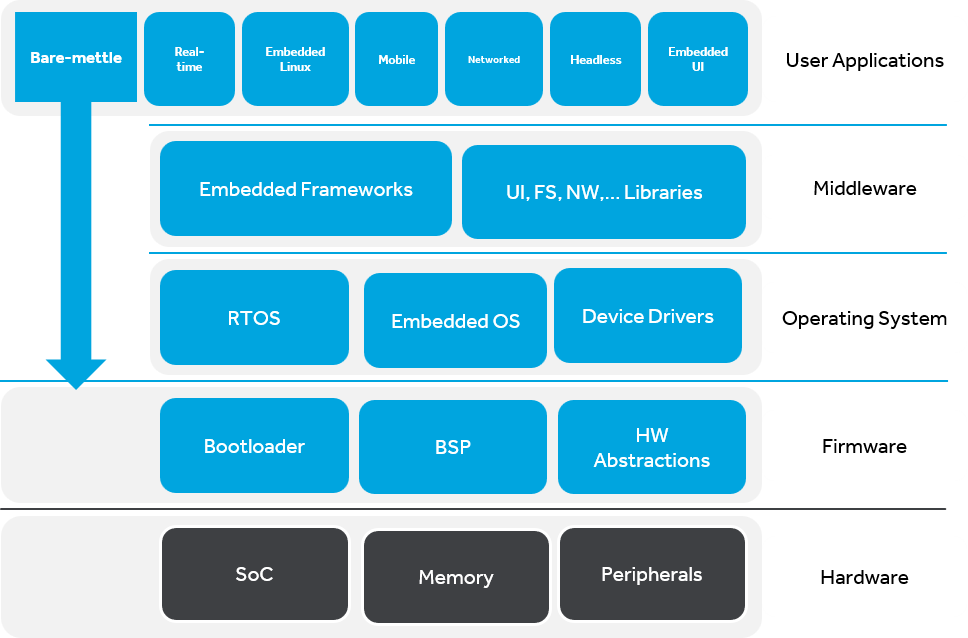

The core tension in autonomous driving simulation testing has long been between realism and controllability. Kangmou built two technical paths that are independent yet complementary, forming a complete toolchain ecosystem:

- Model-based (Physical-driven): Centered on the aiSim simulation platform, using high-precision 3D meshes and a physical material system to provide deterministic simulation at ISO 26262 ASIL D level. This route focuses on closed-loop validation, sensor model research, and constructing extreme edge-case scenarios.

- Data-driven: Centered on the World Extractor toolchain, using 3DGS and NeRF techniques to automatically reconstruct collected real-world data into high-fidelity digital worlds. This route focuses on reducing the Sim-to-Real gap for perception models.

These two routes converge at the rendering endpoint via a hybrid rendering architecture, delivering a testing capability that combines static environmental realism with controllable dynamic targets.

02 aiSim: deterministic high-fidelity engine

aiSim is an independent, physics-based high-performance full-stack simulation platform that integrates dynamics simulation, weather and environment systems, physical sensor models, scene editing, and other functions required for autonomous driving testing. It is the world's first simulation platform certified to ISO 26262 ASIL D. Its core value is providing deterministic, high-fidelity outputs for end-to-end autonomous systems and enabling effective closed-loop testing.

Proprietary rendering pipeline and determinism

Unlike solutions based on game engines such as Unreal Engine or Unity, aiSim uses a proprietary Vulkan API based rendering pipeline.

Determinism: Ensures that the rendering result of the same frame scene is identical across different hardware architectures (from workstations to large cloud clusters) at all sensor data layers, including pixels, point clouds, and dynamics. This is crucial for regression testing.

Ray tracing: Supports multipath reflection simulation and Gaussian beam models for LiDAR and radar, and computes reflectance based on physical material properties (PBR) rather than simple geometric projection.

Physics-level sensor modeling

aiSim models sensors down to their physical characteristics:

- Camera: Full-chain simulation from aperture and distortion (F-theta / Mei / Ocam) to CFA and ISP post-processing.

- LiDAR: Radiometric rather than photometric modeling, accounting for material reflectance at 905 nm, atmospheric attenuation including Mie scattering in rain and fog, and rolling shutter effects.

- Radar: Ray-traced multipath simulation that supports outputs such as RCS, Doppler velocity, and point-cloud-level data.

03 World Extractor: automated reconstruction

To address the long cycle times and high costs of manual modeling, World Extractor provides an end-to-end automated toolchain that converts the real world into digital assets.

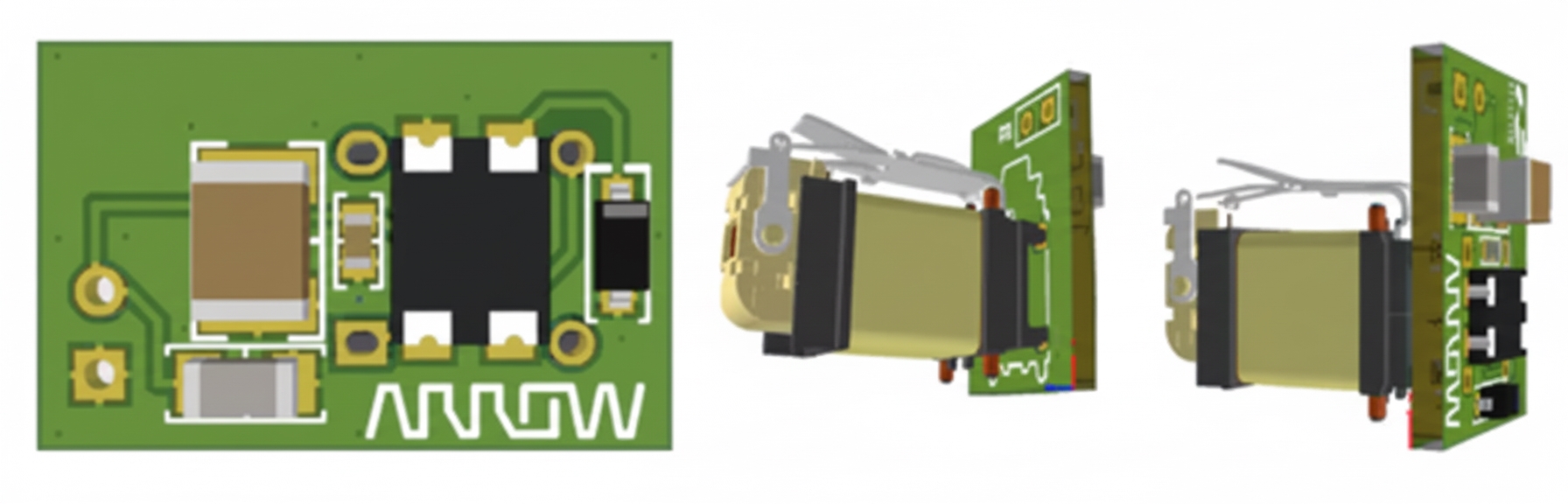

Strict hardware capture standards

High-quality reconstruction starts with high-quality data. Kangmou defines strict sensor deployment specifications to support neural rendering:

- Coverage: Cameras must provide 360° coverage with adjacent view overlap greater than 10% to ensure feature-point matching.

- Synchronization accuracy: Time synchronization between multiple sensors (camera/LiDAR) and GNSS/INS must be under 1 ms, with position errors controlled to centimeter level using RTK/PPK.

- Recommended configuration: Sony IMX490/728 sensors and 128-line LiDAR to ensure high dynamic range and point-cloud density.

Automated processing and 3DGS training

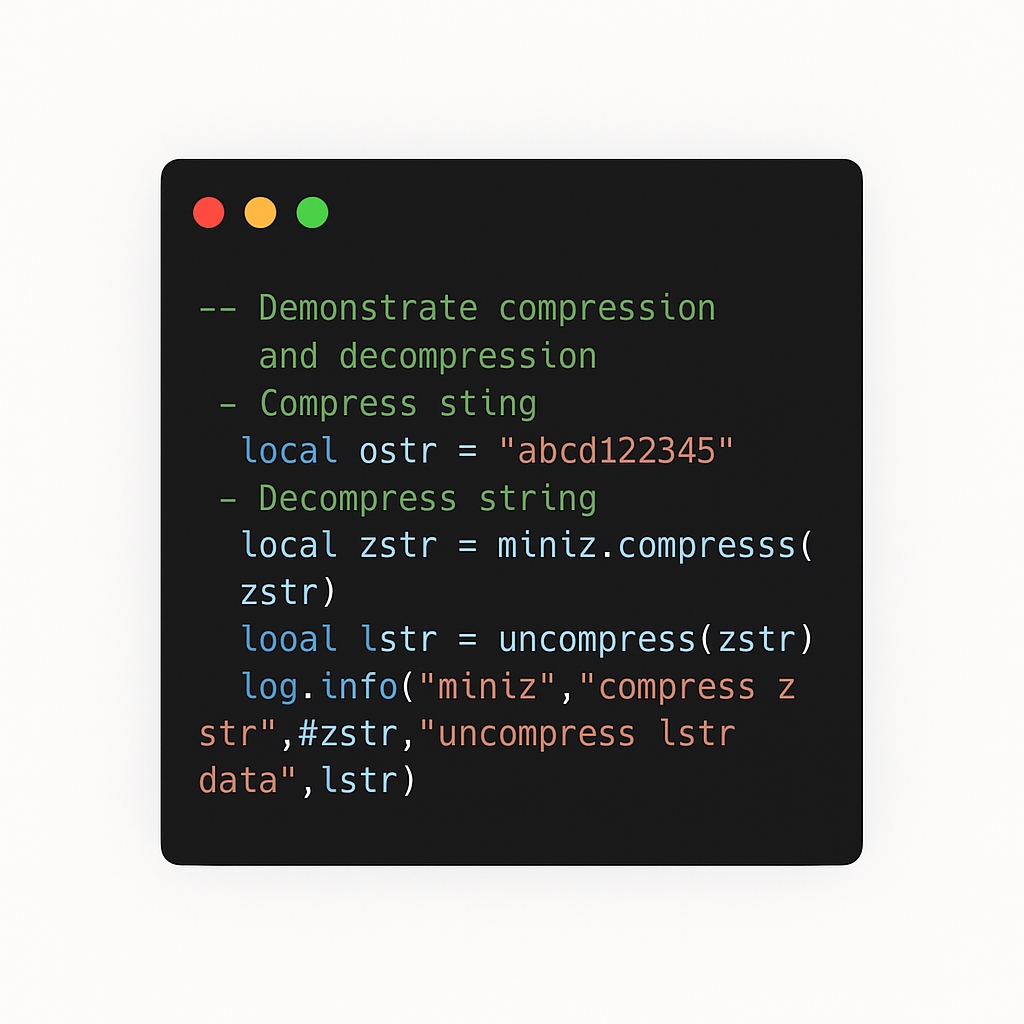

Collected data is desensitized and cleaned before entering automated labeling and training pipelines.

Dynamic object removal

Removing dynamic objects is key to reconstructing a clean static world. The toolchain uses automated labeling algorithms combining 2D segmentation and 3D bounding boxes to identify and remove moving vehicles and pedestrians, retaining static elements such as parked vehicles, buildings, road surfaces, and vegetation.

New training paradigm: NeRF2GS

To address geometry collapse and poor extrapolation in traditional 3DGS under sparse views, Kangmou proposes a NeRF2GS workflow:

- Step 1 (Teacher): Train a NeRF model first. Use NeRF's advantage in geometric continuity and apply depth regularization with noisy LiDAR point clouds.

- Step 2 (Student): Use NeRF-generated high-quality depth maps and normal maps as supervision signals to initialize and train the 3DGS model.

Advantage: This approach significantly corrects geometric errors in weak-texture regions such as road surfaces and sky, ensuring flat roads, clear lane markings, and artifact-free novel view synthesis.

Large-scale block-based training

For city-scale scenes (>100,000 m2), a BEV space block-based strategy is used, supporting multi-GPU parallel training. Overlap regions eliminate seams between blocks.

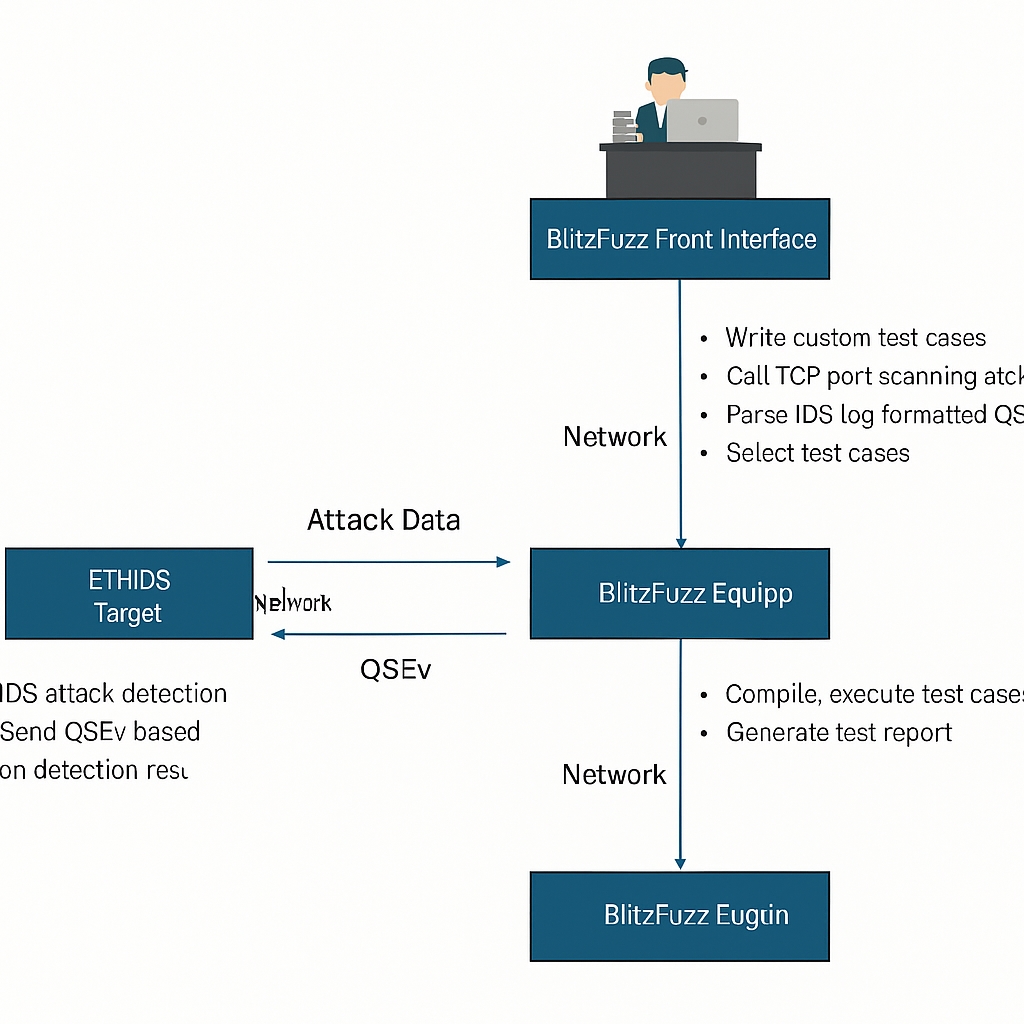

04 Hybrid rendering for closed-loop testing

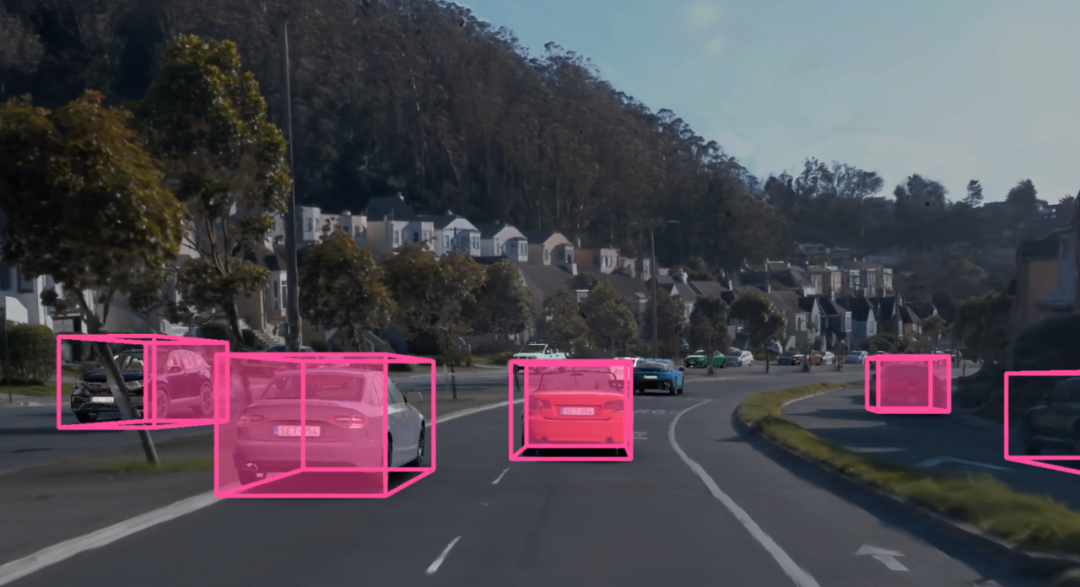

This is the core technical barrier of the solution. Pure 3DGS/NeRF, while visually realistic, are essentially "3D video" and are difficult to modify. To achieve closed-loop testing, Kangmou adopts a hybrid rendering approach.

Why static 3DGS plus dynamic OpenSCENARIO?

Existing 4DGS techniques can reproduce dynamic scenes but lack interactivity—you cannot control braking or lane changes of vehicles captured in recordings. Kangmou's strategy is to decouple background and foreground:

- Background: Use static 3DGS models generated by World Extractor to ensure absolute realism of environment textures, lighting, and geometry.

- Foreground: Use aiSim's physics engine to generate dynamic mesh objects such as vehicles and pedestrians. These objects are driven by OpenSCENARIO format, enabling generalization and interaction.

This combination satisfies perception algorithms' need for out-of-distribution data while meeting control algorithms' requirements for interactive testing.

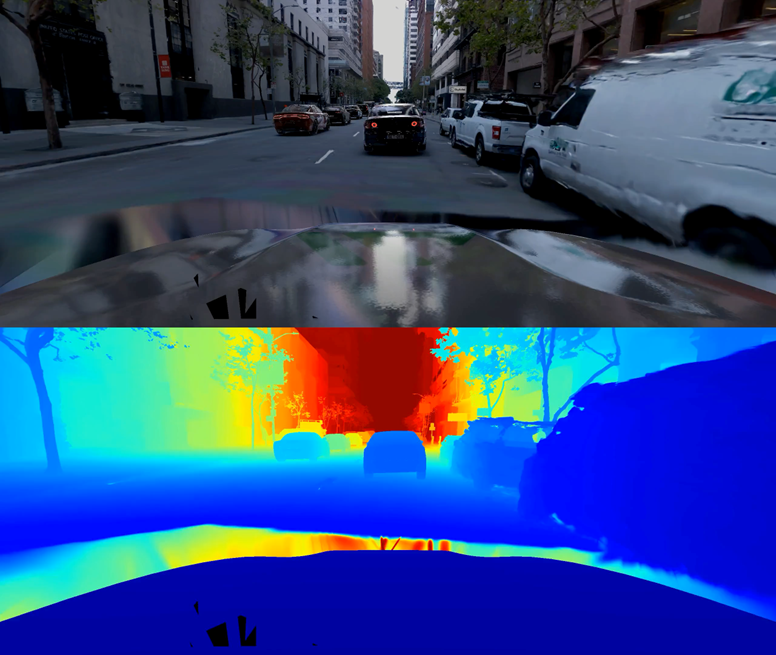

Depth compositing techniques

To ensure a virtual vehicle drives correctly on a real road and is occluded by a real tree requires precise depth compositing:

- The system computes the background depth map of the 3DGS model and the aiSim foreground Z-buffer in real time.

- Occlusion handling: Precisely handles occlusion relationships such as a virtual vehicle behind a real tree, with anti-aliased edges.

- Lighting blending: Extracts environment lighting from the neural field (IBL) to illuminate virtual objects so shadows and reflections match the background.

Multimodal consistency

For LiDAR simulation, Kangmou does not use simple depth projection. Instead, it ray-traces against 3D Gaussian spheres:

- Mechanism: 3D Gaussian spheres are used as proxy geometry to build acceleration structures (BVH). LiDAR rays intersect these Gaussians directly.

- Multimodal outputs: The system recovers not only distance but also intensity by decoding neural features, synthesizing point clouds with realistic reflectance characteristics.

This approach ensures camera and LiDAR maintain strict temporal and spatial synchronization.

05 Scene generalization and engineering deployment

Based on the above architecture, Kangmou achieves a transition from reproduction to generalization.

Dynamic traffic flow generalization

In reconstructed high-fidelity static maps, testers can configure traffic flow freely via OpenSCENARIO. For example, in a reconstructed San Francisco segment, algorithms can generate congestion, cut-in, or accident scenarios. This significantly expands the coverage of the ODD and addresses the scarcity of corner cases that are hard to encounter in real road tests.

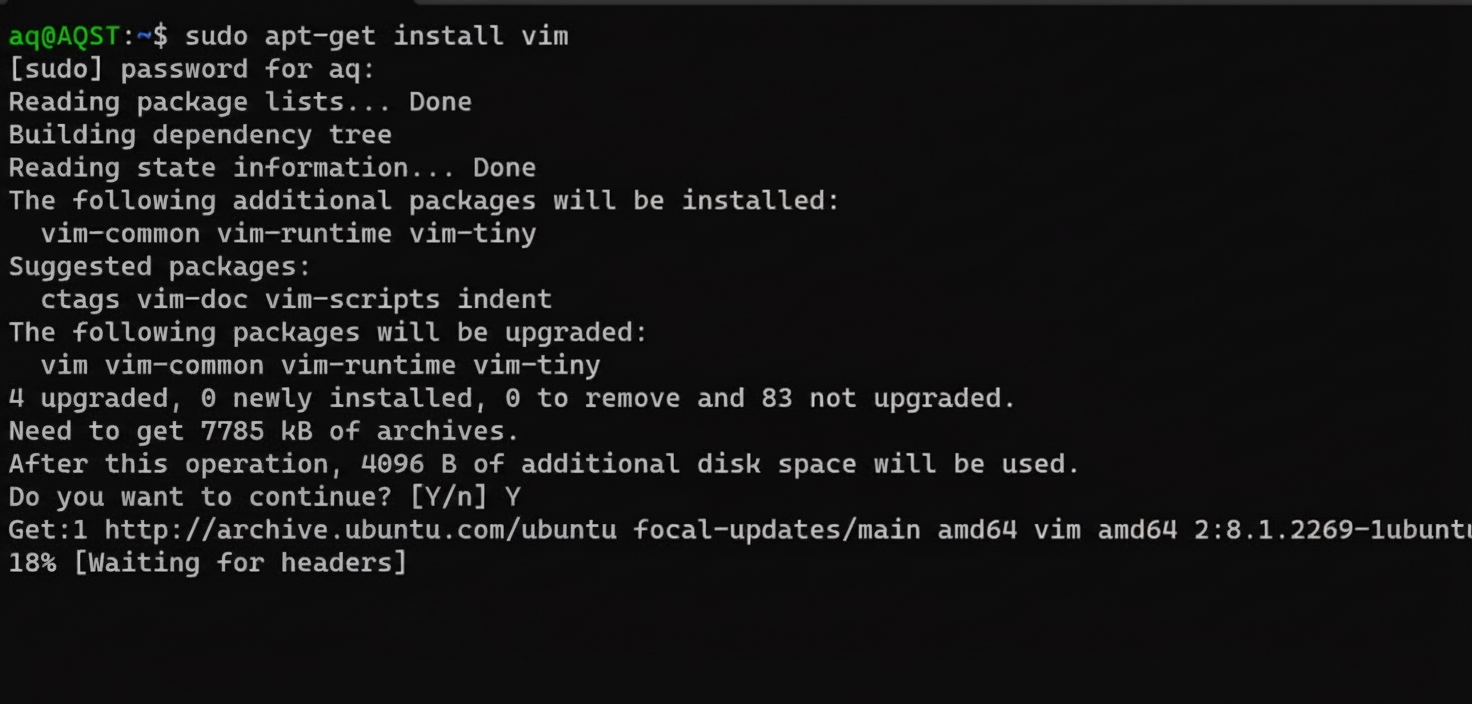

HiL integration and real-time performance

The toolchain has been validated with multiple OEMs and Tier 1 suppliers and supports hardware-in-the-loop integration with mainstream domain controllers:

- Video injection: Support for injection via HDMI/DP or GMSL capture cards to feed mixed-rendered video streams directly into domain controllers such as NVIDIA Orin, NVIDIA Thorn, and Horizon J6.

- Real-time capability: Single-node deployments (for example, a 4-GPU simulation workstation) can achieve high-frame-rate real-time simulation for configurations like 12 cameras plus LiDAR. Distributed cluster deployments can further increase performance and rendering quality.

Conclusion

Dual-modal simulation testing solution is not a simple stack of tools but an engineering decomposition of autonomous driving testing challenges. Through NeRF2GS, real-world scenes are brought into the simulator; through the aiSim physics engine, those worlds are made dynamic. This hybrid rendering mode—static high-fidelity environments with fully generalized dynamic scenes—provides a data foundation for end-to-end closed-loop validation from perception to planning and control, and reduces reliance on high-cost road testing.

ALLPCB

ALLPCB