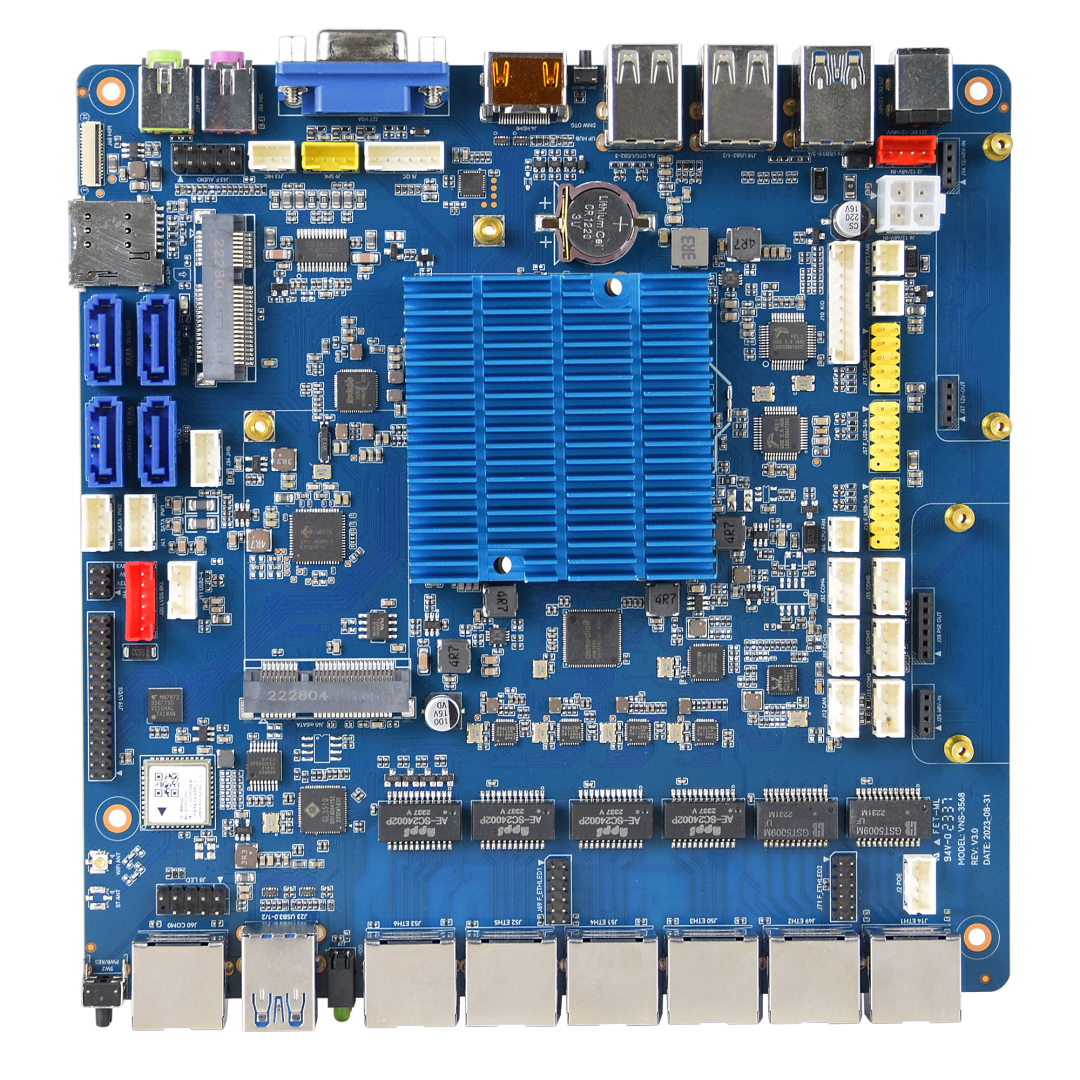

In many embedded systems, embedded machine learning refers to deploying machine learning models on resource-constrained devices such as microcontrollers, IoT devices, and smart sensors. These devices typically have limited computing power, storage, and power budgets. This article outlines the application characteristics of embedded machine learning and common development software and environments.

Characteristics and advantages of embedded machine learning

Embedded devices often require very low power consumption to extend battery life, which is especially important for edge devices. Embedded machine learning must enable local, real-time data processing and decision making to reduce latency. Because these devices usually have limited compute capacity (for example low-frequency processors), storage, and memory, models must be highly optimized. Many embedded ML applications run at the edge to avoid sending data to the cloud, reducing network bandwidth requirements and privacy risks. As a result, embedded ML is frequently optimized for specific application scenarios such as voice recognition, image classification, or equipment fault detection.

By performing data processing locally, embedded ML can provide very low response times and is suitable for applications that require real-time reaction, such as autonomous driving or industrial automation. Edge processing also reduces the need to transmit data to cloud services, which can lower the risk of data leakage and reduce dependence on cloud infrastructure—this can reduce operating costs at scale. Additionally, embedded devices can run ML models offline without network connectivity, which is valuable in remote or edge environments.

However, limited compute resources make running complex or large models difficult. Models typically require pruning, quantization, and other optimizations to run efficiently in constrained environments, which increases development complexity and time. Remote updates or model replacement can be challenging for field-deployed devices, requiring robust firmware update mechanisms. Storage constraints also mean embedded ML often works with smaller datasets or simplified processing pipelines, which can affect model performance.

Overall, embedded ML offers real-time, privacy-preserving, and low-power solutions while posing challenges due to resource limits. Advances in hardware and more efficient model optimization techniques are rapidly expanding embedded ML application scenarios.

Common machine learning software: features, advantages, and limitations

There are many machine learning frameworks and libraries, each with distinct characteristics, strengths, and weaknesses. The following summarizes several mainstream options.

1. TensorFlow

TensorFlow is an open-source machine learning framework developed by Google, primarily for building and training deep learning models. It supports multiple programming languages, most commonly Python, and also offers C++, Java, and Go interfaces. TensorFlow is widely used in industry and academia.

Earlier TensorFlow versions used static computation graphs, allowing the entire graph to be defined before execution, which aids optimization and deployment. Since TensorFlow 2.0, eager execution is supported, making code more intuitive and easier to debug. TensorFlow supports multiple hardware backends such as CPUs, GPUs, and TPUs, and spans environments from mobile devices to large servers. It has a rich ecosystem of tools and libraries, including TensorBoard for visualization, TensorFlow Lite for mobile and embedded deployment, and TensorFlow Serving for production deployment. Compilation and optimization tools like XLA can improve runtime performance.

TensorFlow benefits from a mature ecosystem, extensive documentation, and large community support. It is well suited for large-scale, enterprise-grade applications and offers comprehensive solutions from development to deployment. The learning curve can be steeper compared with some frameworks, and earlier 1.x static graph concepts were less friendly to beginners, although 2.x has improved usability.

2. PyTorch

PyTorch is an open-source deep learning framework developed by Meta AI Research, known for its dynamic computation graph capability and strong adoption in the research community. It is primarily Python-based and also provides a C++ interface.

PyTorch constructs computation graphs dynamically at runtime, which simplifies debugging and model modification. Its syntax is concise and Pythonic, making it well suited for rapid prototyping. PyTorch provides robust GPU acceleration and supports conversion to deployable formats via TorchScript. A vibrant ecosystem and active research community mean many new models and research prototypes appear first in PyTorch.

PyTorch is praised for flexibility and ease of learning, especially for developers with Python experience. Production deployment support historically lagged behind TensorFlow, but improvements like TorchScript have narrowed the gap. The PyTorch ecosystem is smaller than TensorFlow's, and frequent updates can sometimes introduce compatibility or stability challenges.

3. Other frameworks

scikit-learn is a Python library for traditional machine learning built on SciPy and NumPy, providing classification, regression, clustering algorithms, and data preprocessing and evaluation tools.

Keras is a high-level neural network API originally created by Fran?ois Chollet to facilitate rapid model construction and experimentation. Keras was initially backend-agnostic (supporting TensorFlow, Theano, CNTK), and it is now integrated into TensorFlow as its high-level API.

Apache MXNet is a flexible and efficient open-source deep learning framework supported by multiple contributors; it has been adopted in some cloud services and supports multiple language interfaces including Python, Scala, C++, R, and Java.

Choosing the right software depends on project requirements, developer familiarity, deployment environment, and resource constraints. For deep learning, TensorFlow and PyTorch are primary choices with distinct advantages. For traditional machine learning tasks, scikit-learn is commonly used. Keras suits rapid prototyping and beginners, while MXNet may be advantageous in specific scenarios.

Model, software, and hardware combinations for ML development environments

A machine learning development environment encompasses the software and hardware used to design, build, train, debug, and deploy models. These environments can be local desktop setups or cloud-based platforms. The following are common development environments with their characteristics, advantages, and limitations.

1. Jupyter Notebook

Jupyter Notebook is an open-source interactive notebook environment that allows code execution, data visualization, and inline documentation in a browser. It is widely used in data science and machine learning.

Jupyter supports interactive development where code and results coexist in a single document, making rapid iteration and experimentation convenient. While Python is the dominant language, Jupyter also supports R, Julia, and others. Extensions add features such as enhanced visualization and version control. Notebook files (.ipynb) are easy to share and can be rendered in web interfaces.

Jupyter is user-friendly for beginners and professionals, offering strong visualization capabilities via libraries like Matplotlib and Seaborn. Its limitations include constrained performance for large-scale or distributed computing, more challenging code management and version control for large projects, and dependency management complexities for projects with many external libraries.

2. Google Colab

Google Colab is a cloud-based environment built on Jupyter Notebook, provided by Google. It offers free access to GPUs and TPUs for machine learning experiments.

Colab runs on Google servers, so no local software installation is required. The platform provides free GPU/TPU acceleration suitable for training many deep learning models and integrates with Google Drive for storage and sharing. Colab supports real-time collaboration and easy sharing of notebooks.

Colab requires minimal setup and is suitable for small-scale deep learning experiments and collaborative work. Limitations include constrained resources in the free tier, potential interruptions during prolonged training, less convenient file management for large projects compared to local tools, and dependence on stable internet connectivity.

3. Other environments

Anaconda is an open-source Python and R distribution designed for data science and machine learning; it bundles many analysis tools and includes Jupyter Notebook.

Visual Studio Code is an open-source code editor from Microsoft; with Python extensions it can serve as a powerful machine learning development environment.

AWS SageMaker is a managed machine learning service from Amazon Web Services for building, training, and deploying models at scale.

PyCharm is a professional Python IDE from JetBrains that offers comprehensive coding, debugging, and testing tools commonly used in Python development and machine learning.

Each environment has trade-offs; the right choice depends on project needs, skill level, project scale, and hardware/resource requirements.

Conclusion

Embedded machine learning enables edge devices to perform complex data analysis and decision making with low power and real-time performance. Continued hardware improvements and more efficient development tools are expanding embedded ML applications across smart cities, medical devices, autonomous systems, and industrial IoT. As development environments become more accessible and open-source tools proliferate, integrating machine learning into embedded systems is becoming more feasible for developers and engineers.

ALLPCB

ALLPCB