As artificial intelligence technology advances, the AI engineer role is increasingly sought after. To succeed in interviews, candidates should demonstrate both strong technical skills and problem-solving ability. Common interview topics include probability and statistics, machine learning algorithms, deep learning frameworks, model optimization, and recurrent neural networks.

1. What is a major problem when using a fully connected network to process sequential data?

- A. Requires a large amount of training data

- B. Data must be high-dimensional

- C. Cannot effectively capture temporal dependencies in the data

- D. Can only process static image data

Answer: C

Explanation: Due to their structure, fully connected networks struggle to capture temporal dependencies present in sequential data, which is a key limitation for sequence modeling.

2. If you use a fully connected layer to handle long sequences, what problem are you most likely to encounter?

- A. Computation becomes too fast

- B. Input dimensionality becomes too high

- C. Model becomes too simple

- D. Data preprocessing becomes easier

Answer: B

Explanation: Flattening long sequences into vectors for fully connected layers causes input dimensionality to increase sharply, raising computational cost and risk of overfitting.

3. What is the main limitation of fully connected layers when handling variable-length sequences?

- A. They can automatically adjust input length

- B. They require consistent input lengths

- C. They can handle sequences of any length

- D. They require no preprocessing steps

Answer: B

Explanation: Fully connected layers typically require fixed-length inputs, so variable-length sequences must be normalized to the same length before being input to the network.

4. One-hot encoding is mainly used to handle which type of data?

- A. Continuous numerical data

- B. Categorical data

- C. Time series data

- D. Image data

Answer: B

Explanation: One-hot encoding is commonly used to convert categorical data into a form suitable for machine learning algorithms.

5. After applying one-hot encoding, how are relationships between original categories treated?

- A. They have numerical order relationships

- B. They are independent with no numerical order

- C. They form a continuous numerical range

- D. They have intrinsic weight differences

Answer: B

Explanation: One-hot encoding represents each category as an independent binary feature, removing any implied ordinal relationship between categories.

6. What is a significant drawback of one-hot encoding?

- A. Reduces data dimensionality

- B. Improves algorithm performance

- C. Produces a very sparse dataset

- D. Improves storage efficiency

Answer: C

Explanation: One-hot encoding results in highly sparse feature matrices where most elements are zero, which can increase storage requirements and reduce efficiency for some algorithms.

7. For features with many categories, one-hot encoding can cause what problem?

- A. Dataset dimensionality decreases

- B. Dataset dimensionality increases significantly

- C. Data processing speed increases

- D. Data becomes denser

Answer: B

Explanation: When the number of categories is large, one-hot encoding expands the feature space and increases processing difficulty.

8. Which of the following is NOT an advantage of one-hot encoding?

- A. Avoids incorrect weighting between categories

- B. Makes data easier for machine learning algorithms to handle

- C. Automatically reduces dataset dimensionality

- D. Simplifies representation of categorical data

Answer: C

Explanation: One-hot encoding does not reduce dimensionality; on the contrary, it tends to increase it.

9. What is an alternative to one-hot encoding in deep learning?

- A. Embedding layer

- B. Pooling layer

- C. Normalization layer

- D. Convolutional layer

Answer: A

Explanation: Embedding layers map categorical inputs into continuous vector spaces, reducing dimensionality while preserving category information.

10. What problems arise when using DNN fully connected layers for sequential tasks?

Using fully connected (dense) layers from deep neural networks to handle sequential data has several limitations:

- Lack of sequence modeling: Fully connected layers cannot capture temporal dependencies. For sequence tasks such as speech recognition, natural language processing, or time series forecasting, the order of data points matters. Feeding sequence data directly into a fully connected network prevents the model from effectively learning those dependencies.

- Curse of dimensionality: For long sequences, flattening the sequence into a vector for fully connected layers produces very high-dimensional inputs. This increases the number of parameters, which can lead to overfitting and substantially higher computational cost.

- Inability to handle variable-length sequences: Fully connected layers usually require fixed-length inputs, which means variable-length sequences must be normalized to a uniform length before being processed.

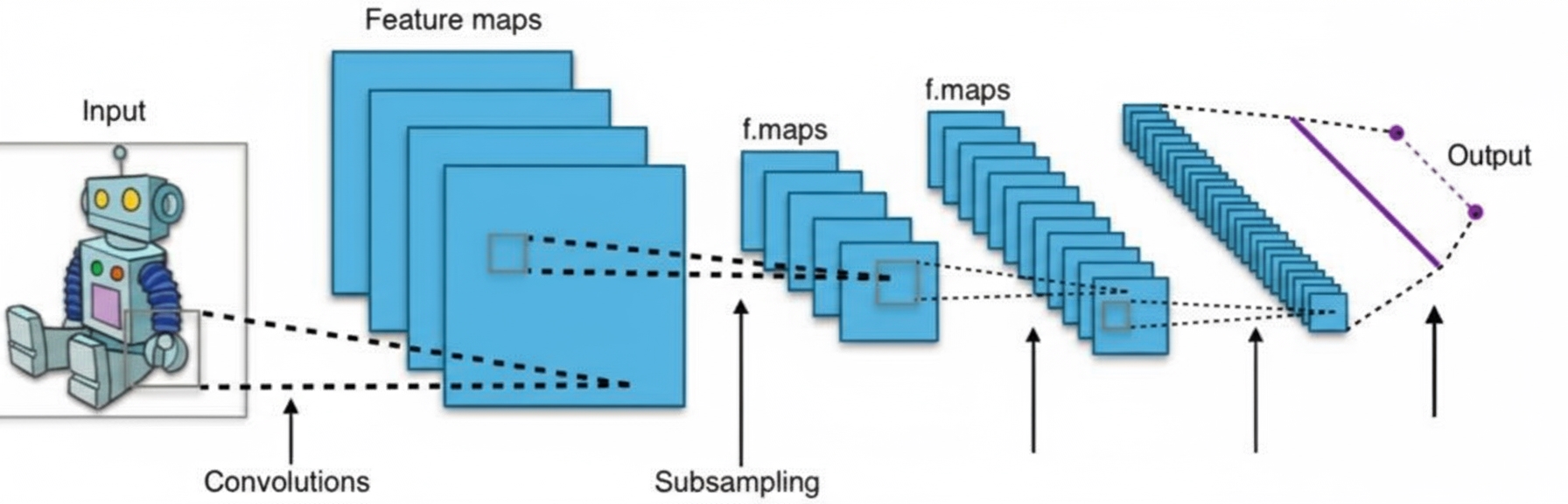

To address these issues, researchers use other network architectures such as recurrent neural networks (RNNs), especially long short-term memory (LSTM) and gated recurrent unit (GRU) networks, which are designed for sequential data and can capture long-term dependencies. One-dimensional convolutional architectures (1D convolution) from convolutional neural networks are also applied to sequences and can perform well on certain tasks.

11. How to understand one-hot encoding?

Basic concept

- Definition: In one-hot encoding, each category value is converted into a separate binary column, with a 1 indicating the presence of that category and 0s elsewhere. For example, with three categories (red, green, blue), each category becomes its own feature column, and a sample has exactly one 1 among those columns.

- Example: A color attribute taking values "red", "green", "blue" could be encoded as "red" -> [1, 0, 0], "green" -> [0, 1, 0], "blue" -> [0, 0, 1].

- Advantages:

- Avoids incorrect ordinal interpretation: Numeric encodings like red=1, green=2, blue=3 can imply an order. One-hot encoding prevents algorithms from inferring spurious ordinal relationships.

- Algorithm compatibility: Many machine learning models, particularly linear models and tree-based models, can work directly with one-hot encoded features.

- Disadvantages:

- Dimensionality expansion: Features with many categories produce a large number of new columns, which can create storage and computational challenges. For example, a postal code with thousands of unique values becomes thousands of features after one-hot encoding.

- Sparsity: One-hot encoded data is typically highly sparse, with most entries being zero, which can complicate storage and processing.

To mitigate these limitations, embedding techniques are often used instead of one-hot encoding in deep learning, allowing a reduction in dimensionality while preserving category information.

ALLPCB

ALLPCB