Overview

This article explains basic memory concepts and algorithms for memory management in operating systems.

1 Basic concepts of memory

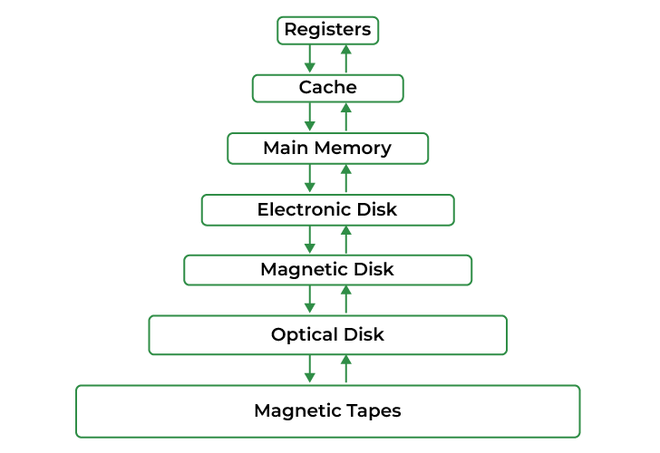

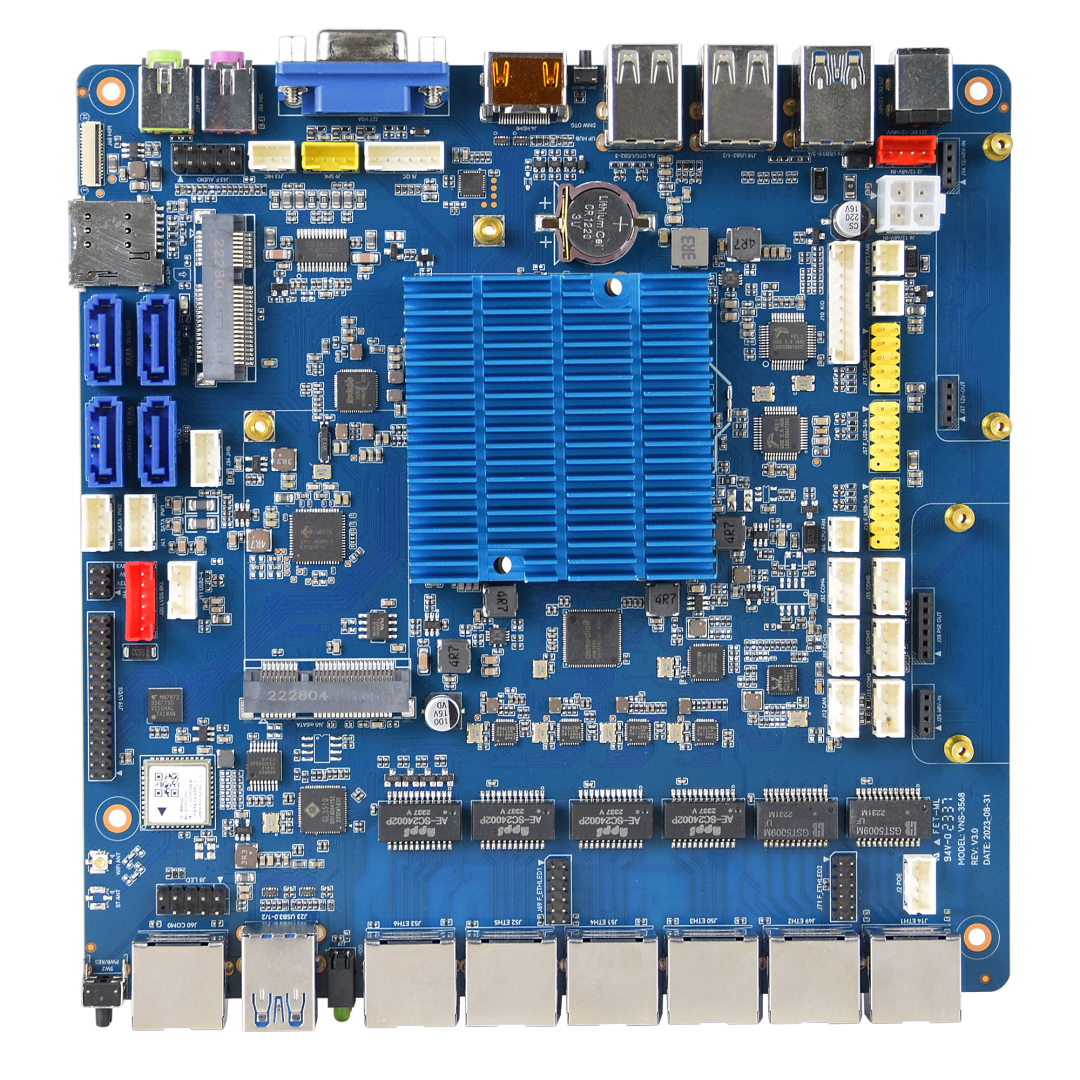

Memory is one of the most important resources in a computer system after the processor. It stores programs and data that are currently executing. Relative to the CPU, the storage directly addressable by the CPU is called memory; storage that the CPU can only access via drivers is called external storage.

2 ROM, RAM and Flash

Memory is typically implemented with semiconductor storage cells and is divided into read-only memory (ROM) and random access memory (RAM). ROM can generally only be read and not written, and its contents persist after power loss. RAM supports both reads and writes but loses its contents on power loss. The term "memory" normally refers to RAM. In embedded systems, ROM has traditionally been used to store bootloaders, operating systems, or program code, or as a substitute for a disk. In recent years, flash memory has largely replaced ROM in embedded systems because it combines the nonvolatile property of ROM with erasable and programmable characteristics, and it supports fast reads.

3 Two memory management approaches

The memory management module governs the system's memory resources and is a core part of the operating system. It handles initialization, allocation, and deallocation of memory. Based on whether allocated memory must be contiguous, two broad approaches exist:

- Contiguous memory management

- Noncontiguous memory management

Most modern operating systems use noncontiguous memory management. However, because allocation granularity can be large, embedded systems with limited memory often use contiguous management. This article focuses on partitioned contiguous memory management commonly used in embedded systems.

4 Partitioned memory management

Partitioned management can be either fixed partitioning or dynamic partitioning.

Fixed partitions

Memory is predivided into a number of fixed-size regions. Partition sizes may be equal or unequal. Fixed partitions are easy to implement but can waste space due to internal fragmentation, and the fixed number of partitions limits concurrent processes.

Dynamic partitions

Memory is allocated to processes dynamically according to their actual needs.

5 Dynamic partition memory management

Operation

Dynamic partition management commonly uses a free-list method based on a doubly linked list of free partitions. At initialization, the entire memory block is treated as one large free partition and added to the free list. When a process requests memory, an appropriate free partition is found in the free list. If the partition is larger than required, a block of the requested size is split off and assigned to the process; the allocated portion is removed from the free list, and the remaining fragment stays on the free list.

Data structures

There are various data structure implementations for the free-list method. A simple approach stores the size of each free partition along with pointers to the previous and next partitions so that free partitions form a doubly linked list.

Memory allocation algorithms

- First Fit

- Next Fit

- Best Fit

- Worst Fit

- Two-Level Segregated Fit (TLSF)

- Buddy systems

In TLSF, the first-level lists classify free blocks by power-of-two size classes. The second-level lists linearly subdivide each size class into ranges. For example, the 2^5 class can be divided by 2^3 (=8) into four ranges: [2^5, 2^5+8), [2^5+8, 2^5+16), [2^5+16, 2^5+24), [2^5+24, 2^5+32). The 2^16 class can be divided by 2^14 into four subranges: [2^16, 2^16+2^14), [2^16+2^14, 2^16+2*2^14), [2^16+2*2^14, 2^16+3*2^14), [2^16+3*2^14, 2^16+4*2^14). To enable fast lookup, each level maintains a bitmap to indicate whether a given list contains free blocks. For example, a first-level bitmap bit pattern ending with 010 indicates that the corresponding size class has free blocks; a second-level bitmap value of 0100 indicates that a specific subrange contains free blocks.

ALLPCB

ALLPCB