Introduction

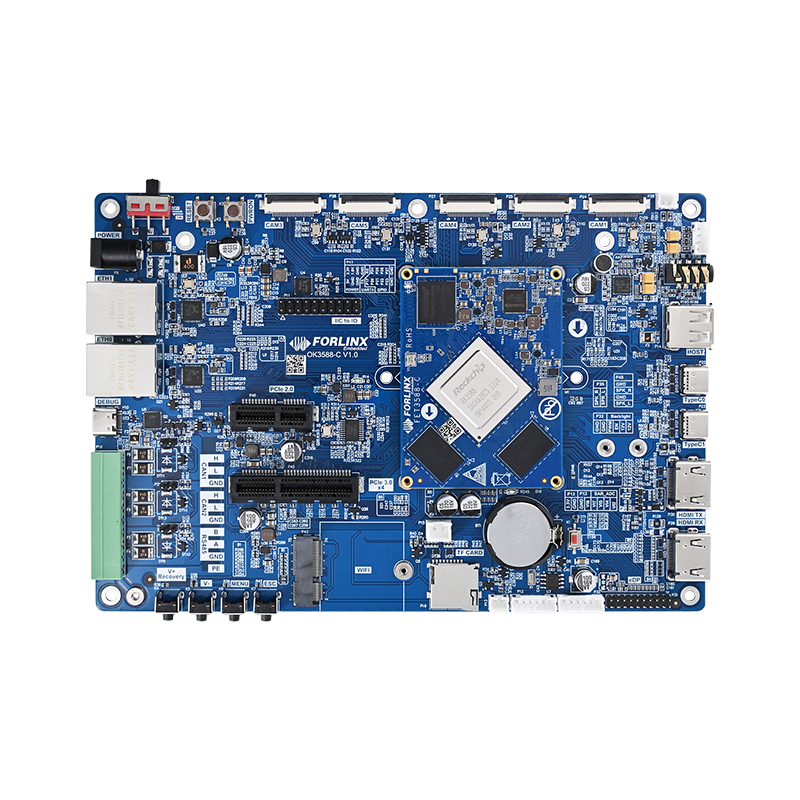

DeepSeek, a China-developed data-driven AI model, has drawn attention for its inference capability and efficient text generation. Rockchip's RK3588 flagship SoC, with heterogeneous multi-core compute and strong CPU, GPU, and NPU performance, is a suitable platform for embedded AI applications. Integrating DeepSeek with an ELF 2 development board based on RK3588 enables running large models at the edge, addressing latency and privacy requirements while supporting a development-to-deployment workflow.

Environment setup

1.1 Anacondanstallation and usage

1.1.1 About Anaconda

Anaconda is an open-source package and environment management system for scientific computing, data analysis, and large-scale data tasks, primarily for Python. It bundles commonly used scientific and data-analysis packages and provides tools to manage installation, updates, and isolated environments.

Main features and uses:

- Package management

- Environment management

- Cross-platform support

- Integrated development environment (IDE)

- Large-data support

Anaconda simplifies development and deployment for scientific computing and data analysis.

1.1.2 Anaconda installation

The provided virtual machine already includes Python 3.10 and RKNN-Toolkit 2.1.0 for model conversion and quantization. If you need different Python versions or tools, install Anaconda inside the virtual machine to avoid environment conflicts and achieve full isolation.

The virtual machine uses the development environment from the ELF 2 development board package (path: ELF 2 development board package\\08-development environment). You can download the Linux Miniconda distribution from a mirror.

Alternatively, the ELF 2 development board package contains an installer script named Miniconda3-4.7.12.1-Linux-x86_64.sh under 06-common tools\\06-2 Environment setup tool\\AIinstallation script. Upload that script to the virtual machine and run it to install Miniconda.

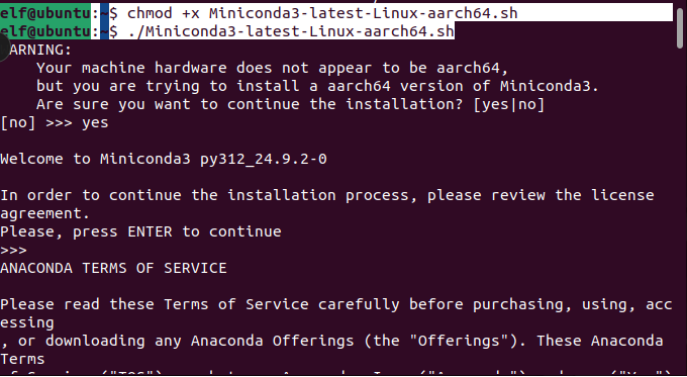

Grant execution permission and run the installer:

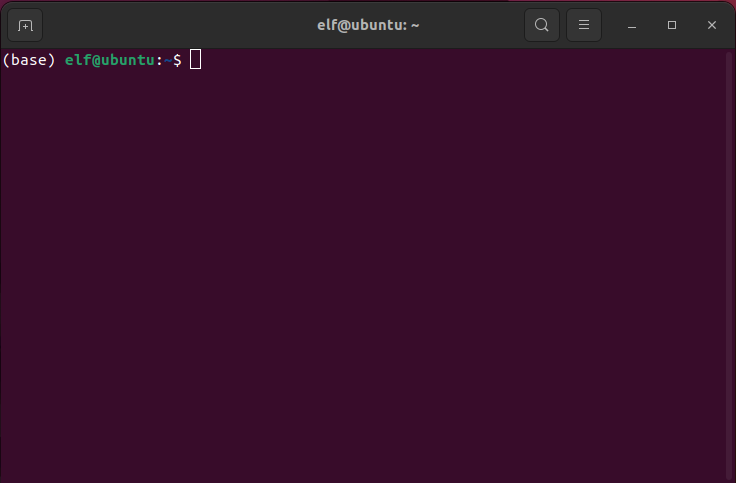

elf@ubuntu:~$ chmod +x Miniconda3-4.7.12.1-Linux-x86_64.shelf@ubuntu:~$ ./Miniconda3-4.7.12.1-Linux-x86_64.shAfter installation, reopen the terminal. A "(base)" prompt indicates successful installation.

1.1.3 Basic Anaconda usage

The conda commands shown are cross-platform and can be used on Linux and Windows. The following examples use the virtual machine environment.

1. List environments:

conda env list

2. Create a new environment:

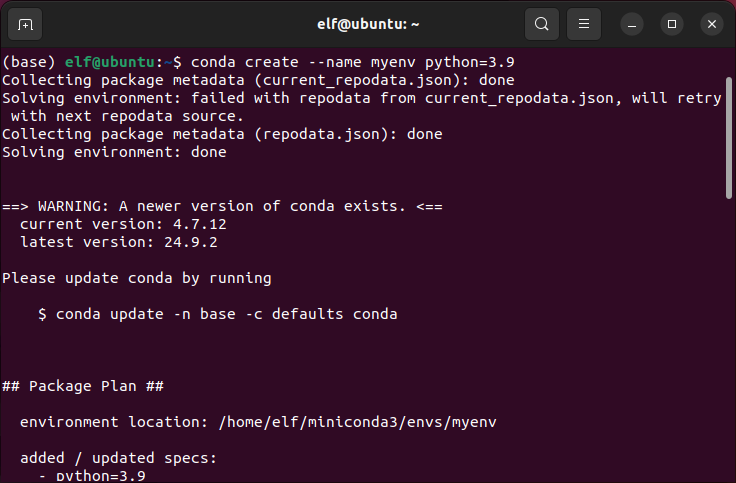

conda create --name <Virtual environment name> python=<版本号>Example: conda create --name myenv python=3.9

3. Activate an environment:

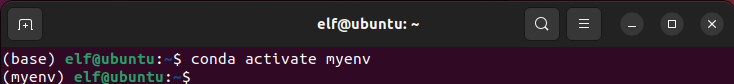

conda activate <Virtual Environment Name>Example: conda activate myenv

4. Deactivate an environment:

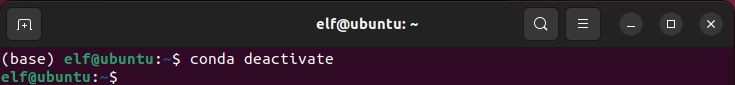

conda deactivate

5. Install packages into an environment:

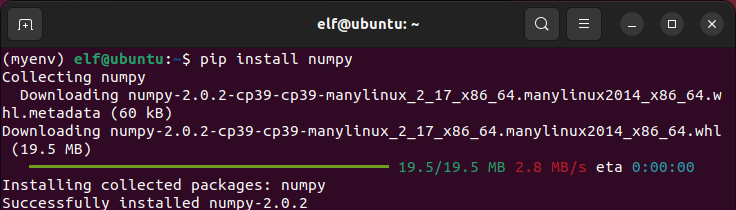

conda install <name>Example: conda install numpy or pip install numpy

6. Remove an environment:

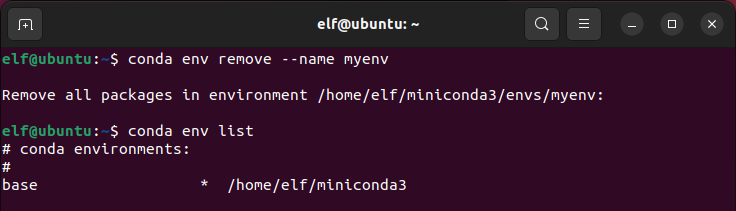

conda env remove --name <Virtual Environment Name>Example: conda env remove --name myenv

1.1.4 Create the deployment environment

List environments:

elf@ubuntu:~$ conda env list

Create a new deployment environment:

elf@ubuntu:~$ conda create --name RKLLM-Toolkit-pyth3.10 python=3.10Activate the environment:

elf@ubuntu:~$ conda activate RKLLM-Toolkit-pyth3.101.2 RKLLM-Toolkit installation and usage

1.2.1 About RKLLM-Toolkit

RKLLM-Toolkit provides model conversion and quantization. It allows converting Hugging Face or GGUF large language models into RKLLM format for execution on Rockchip NPUs.

1.2.2 RKLLM-Toolkit installation

Install RKLLM-Toolkit within the virtual environment to convert the DeepSeek R1 large language model to RKLLM format and to build the runtime executable for the board. The released RKLLM toolchain archive contains a wheel package for RKLLM-Toolkit.

Alternatively, the ELF 2 development board package includes rknn-llm-main.zip under 03-Routine source code\\03-4 AIRoutine source code.

Place the downloaded rknn-llm-main.zip into /home/elf/work/deepseek and unzip it:

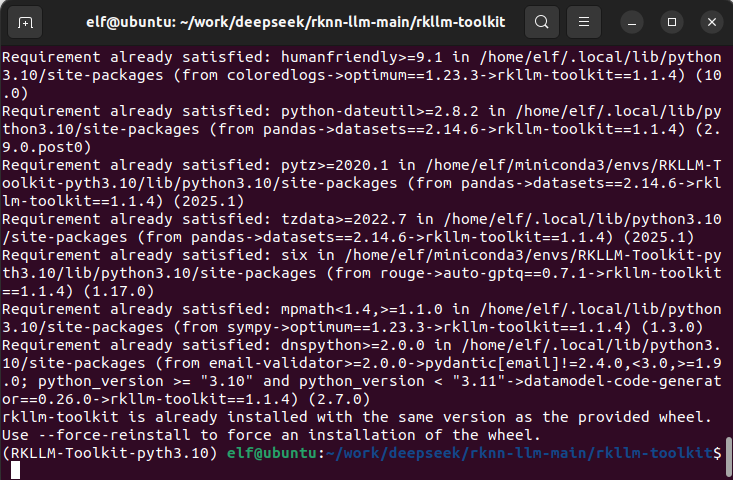

(RKLLM-Toolkit-pyth3.10) elf@ubuntu:~/work/deepseek$ unzip rknn-llm-main.zipChange into rknn-llm-main/rkllm-toolkit/ and install RKLLM-Toolkit:

(RKLLM-Toolkit-pyth3.10) elf@ubuntu:~/work/deepseek$ cd rknn-llm-main/rkllm-toolkit/(RKLLM-Toolkit-pyth3.10) elf@ubuntu:~/work/deepseek/rknn-llm-main/rkllm-toolkit$ pip install rkllm_toolkit-1.1.4-cp310-cp310-linux_x86_64.whl -i https://mirrors.tuna.tsinghua.edu.cn/pypi/web/simple some-packageIf there are package version conflicts, retry the install command as shown above.

1.3 Cross-compilation toolchain

Use the cross-compiler gcc-arm-10.2-2020.11-x86_64-aarch64-none-linux-gnu. Cross-toolchains are typically backward-compatible but not forward-compatible, so avoid versions older than 10.2.

The ELF 2 development board package also includes gcc-arm-10.2-2020.11-x86_64-aarch64-none-linux-gnu.tar.xz under 03-Routine source code\\03-4 AIRoutine source code.

Porting process

2.1 Model conversion

1. Download DeepSeek-R1 weights

On the Ubuntu virtual machine, obtain the DeepSeek-R1-Distill-Qwen-1.5B weights from the model source. Visible text for reference: deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B at main (huggingface.co)

The ELF 2 development board package may also include DeepSeek-R1-Distill-Qwen-1.5B.zip under 03-Routine source code\\03-4 AIRoutine source code.

2. Convert the model

Use RKLLM-Toolkit for conversion and quantization. RKLLM-Toolkit converts Hugging Face or GGUF models to RKLLM format for loading on Rockchip NPUs.

Change into rknn-llm-main/examples/DeepSeek-R1-Distill-Qwen-1.5B_Demo/export and generate data_quant.json for quantization. Use the fp16 model outputs as calibration data. A default data_quant.json is included in the directory.

(RKLLM-Toolkit-pyth3.10) elf@ubuntu:~/work/deepseek$ cd rknn-llm-main/examples/DeepSeek-R1-Distill-Qwen-1.5B_Demo/export(RKLLM-Toolkit-pyth3.10) elf@ubuntu:~/work/deepseek/rknn-llm-main/examples/DeepSeek-R1-Distill-Qwen-1.5B_Demo/export$ python generate_data_quant.py -m /home/elf/work/deepseek/DeepSeek-R1-Distill-Qwen-1.5B/home/elf/work/deepseek/DeepSeek-R1-Distill-Qwen-1.5B is the path to the weights.

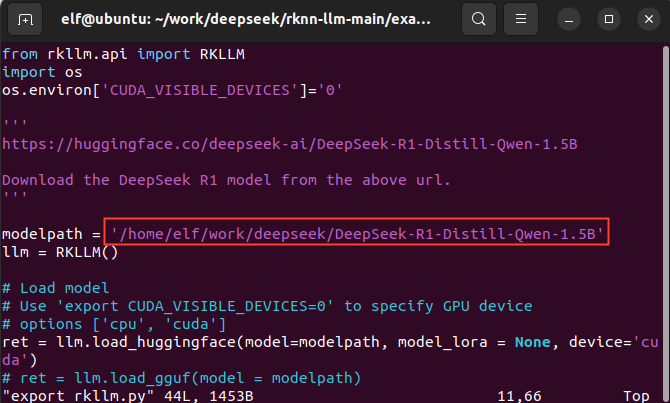

Edit export_rkllm.py to specify the weights path:

(RKLLM-Toolkit-pyth3.10) elf@ubuntu:~/work/deepseek/rknn-llm-main/examples/DeepSeek-R1-Distill-Qwen-1.5B_Demo/export$ vi export_rkllm.py

Export the RKLLM model:

(RKLLM-Toolkit-pyth3.10) elf@ubuntu:~/work/deepseek/rknn-llm-main/examples/DeepSeek-R1-Distill-Qwen-1.5B_Demo/export$ python export_rkllm.pyConverted model output: rknn-llm-main/examples/DeepSeek-R1-Distill-Qwen-1.5B_Demo/export/DeepSeek-R1-Distill-Qwen-1.5B_W8A8_RK3588.rkllm.

2.2 Cross-compilation

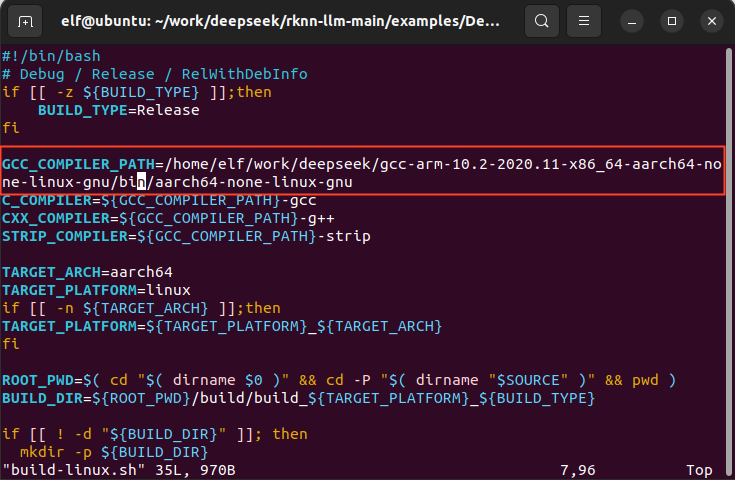

Change into rknn-llm-main/examples/DeepSeek-R1-Distill-Qwen-1.5B_Demo/deploy and set the cross-compiler path in build-linux.sh. Update the path to match your local installation.

(RKLLM-Toolkit-pyth3.10) elf@ubuntu:~/work/deepseek/rknn-llm-main/examples/DeepSeek-R1-Distill-Qwen-1.5B_Demo/deploy$ vi build-linux.sh

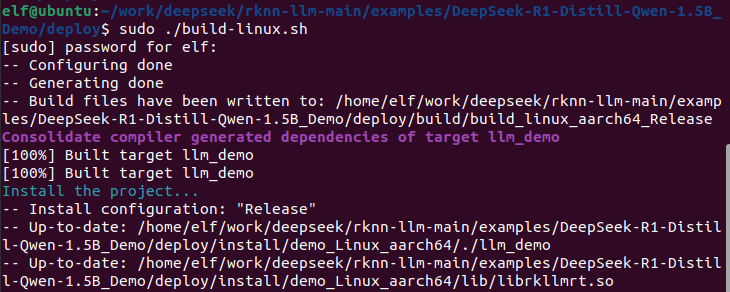

After editing, build:

(RKLLM-Toolkit-pyth3.10) elf@ubuntu:~/work/deepseek/rknn-llm-main/examples/DeepSeek-R1-Distill-Qwen-1.5B_Demo/deploy$ ./build-linux.sh

Executable: rknn-llm-main/examples/DeepSeek-R1-Distill-Qwen-1.5B_Demo/deploy/install/demo_Linux_aarch64/llm_demo

2.3 Local deployment on the board

Upload the compiled .rkllm model, executable, and library files to the target board. This enables offline interaction with DeepSeek-R1.

elf@elf2-desktop:~$ ./llm_demo DeepSeek-R1-Distill-Qwen-1.5B_W8A8_RK3588.rkllm 10000 10000Sample runtime output:

Note: The desktop system used in this test ran NPU driver version 0.9.6. For driver updates, refer to the Rockchip SDK documentation. Visible text for reference: SDK

Following this deployment flow enables efficient local execution of the DeepSeek model on the ELF 2 development board. This guide can serve as a technical reference for embedded AI developers and academic users working on edge computing and AI integration.

ALLPCB

ALLPCB