Overview

Large language models such as OpenAI's ChatGPT have accelerated demand for compute. Major technology companies are developing related tools, platforms, and applications. The AIGC ecosystem is forming around three layers: 1) an upstream infrastructure layer built on pretrained models; 2) a middle layer of vertical, scenario-specific, and personalized models and tooling; 3) an application layer delivering content generation services for text, images, audio, and video to end users.

Server Hardware and Logical Architecture

Typical server hardware includes processors, memory, chipsets, I/O (RAID cards, NICs, HBA cards), storage drives, chassis (power supplies, fans). For an ordinary server, CPU and chipset account for roughly 50% of production cost, memory about 15%, external storage about 10%, and other hardware about 25%.

Server logical architecture is similar to a standard computer, but servers require higher performance and stronger guarantees for stability, reliability, security, scalability, and manageability. The CPU and memory are the core elements: the CPU performs logic and computation while memory handles data storage and management. Firmware typically includes BIOS or UEFI, BMC, and CMOS; operating systems include 32-bit and 64-bit variants.

Model Growth and Compute Demand

GPT-family models have seen parameter counts grow substantially compared with earlier models like BERT and T5. GPT-3 is one of the largest well-known language models, with 175 billion parameters. Before GPT-3, Microsoft’s Turing NLG had 17 billion parameters. As training datasets increase, compute requirements also rise.

Both the GPT family and BERT-based models are based on Transformer architecture. GPT was introduced in 2018. GPT-1 used 12 Transformer layers; GPT-3 increased to 96 layers. GPT-1 combined unsupervised pretraining with supervised fine-tuning, while GPT-2 and GPT-3 used purely unsupervised pretraining. The main evolution from GPT-2 to GPT-3 was a large increase in data and parameter scale.

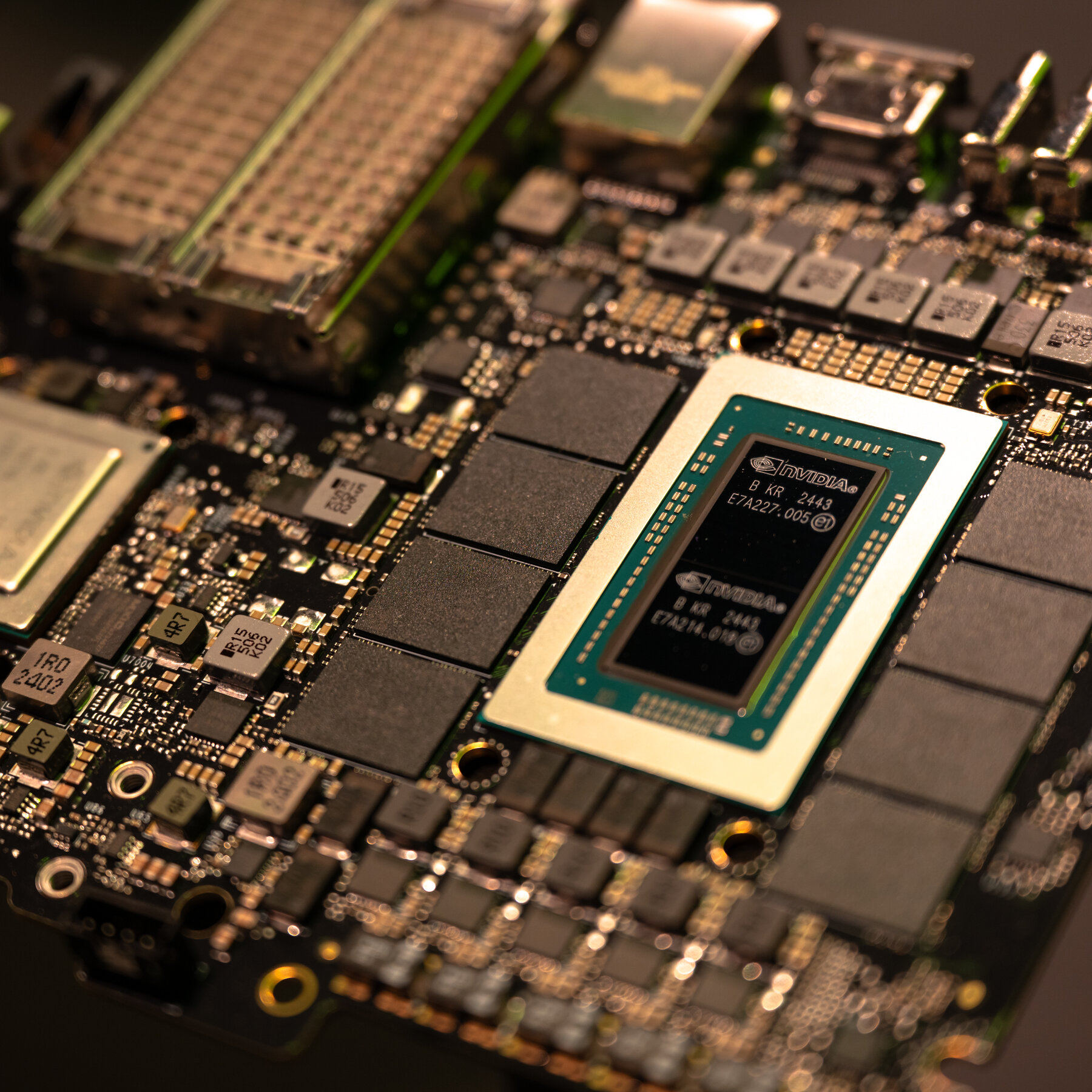

Heterogeneous Computing and CPU+GPU Architectures

Heterogeneous computing means using different types of instruction sets and architectures together. Common configurations include GPU cloud servers, FPGA cloud servers, and elastic accelerated instances, allowing specialized hardware to serve specific workloads.

In CPU+GPU heterogeneous architectures, the GPU and CPU are connected via PCIe. The CPU acts as the host and the GPU as the device. Such platforms leverage complementary strengths: CPUs handle serial, control-heavy tasks while GPUs accelerate data-parallel workloads, enabling significant performance gains. Increasingly, AI computation uses heterogeneous setups for acceleration.

CPU vs GPU Roles

CPUs are suitable for a wide range of workloads, especially those requiring low latency and high per-core performance. As strong execution engines, CPUs concentrate fewer, powerful cores to complete single tasks quickly, which suits serial computation and database workloads.

GPUs were originally developed as ASICs to accelerate 3D rendering. Over time they became more programmable and flexible. While graphics remain a primary function, GPUs have evolved into general-purpose parallel processors used in many applications, including AI.

Training vs Inference

Training and inference handle different data volumes and compute patterns.

Training is a learning process that uses large datasets to determine network weights and biases so the model can perform a target function. Training requires iterative forward and backward passes through the network. When errors are detected, gradients are backpropagated and parameters are adjusted repeatedly until an optimal configuration is found. Modern neural networks can have millions to billions of parameters, so each adjustment involves extensive computation.

Inference uses a trained model to generate outputs from new input data. It performs a single forward pass without iterative parameter updates, so inference requires significantly less compute than training.

Common Accelerators and Product Examples

NVIDIA T4 GPUs accelerate a variety of cloud workloads, including HPC, deep learning training and inference, machine learning, data analytics, and graphics. Turing Tensor Cores support mixed-precision computation from FP32 to FP16 and INT8/INT4, providing much higher throughput than CPUs for certain tasks.

For training, NVIDIA A100 and H100 are widely used. The A100 80GB offers up to 1.3 TB of unified memory per node for very large models and up to 3x the throughput of the A100 40GB in some configurations. On advanced conversational AI models like BERT, A100 can increase inference throughput by up to 249x versus CPU.

The H100 provides stronger performance and architectural innovations. NVIDIA reports up to 9x faster AI training for large models and up to 30x faster inference for very large models compared with the previous generation. H100 implements DPX instructions that can outperform A100 Tensor Core GPUs by up to 7x for certain workloads and can accelerate dynamic programming algorithms dramatically compared with dual-CPU servers.

Compute Example and Sensitivity Analysis

Assume an H100 server with 32 PFLOPS of AI compute and a maximum power of 10.2 kW. Using a simplistic model, the number of servers required for a training task equals training compute demand divided by per-server AI performance. For a hypothetical peak demand of 4.625×10^7 PFLOPS-second (simultaneous work for 1 second), that corresponds to about 46.25 million servers if run for 1 second, or 535 servers running for one day in aggregate.

Using public estimates for GPT-3—about 175 billion parameters and 45 TB of pretraining data, roughly 300 billion tokens—and an effective utilization factor of 21.3%, the implied training compute requirement can be in the order of 1.48×10^9 PFLOPS.

Sensitivity analysis for AI server training demand should consider two variables: 1) the number of large models trained in parallel, and 2) the time target for completing each model's training. For example, if ten large models must be trained in a single day, tens of thousands of A100 servers or several thousand H100 servers may be required, depending on assumptions.

If future model sizes increase substantially (for example, a hypothetical model several times larger than GPT-3), the required AI server count would increase accordingly.

AI Server Demand and Market Estimates

AI servers are foundational compute infrastructure and are expected to grow rapidly with rising compute demands. TrendForce estimated that as of 2022, AI servers equipped with GPGPU accounted for about 1% of overall server shipments, and ChatBot-related demand could accelerate growth. TrendForce projected an annual shipment growth that could reach approximately 8% year over year, with a 2022–2026 compound annual growth rate around 10.8%.

AI servers are heterogeneous and can be configured in various combinations, such as CPU+GPU, CPU+TPU, or CPU plus other accelerator cards. IDC estimated that China’s AI server market was about $5.7 billion in 2021, up 61.6% year over year, and projected growth to $10.9 billion by 2025, a CAGR of 17.5%.

Example: Inspur NF5688M6

The Inspur NF5688M6 server uses NVSwitch for high-speed GPU peer-to-peer interconnects. A typical configuration uses eight NVIDIA Ampere GPUs connected via NVSwitch, two third-generation Intel Xeon Scalable processors, support for eight 2.5-inch NVMe SSDs or SATA/SAS SSDs, two onboard SATA M.2 slots, an optional PCIe 4.0 x16 OCP 3.0 NIC supporting 10G/25G/100G, up to 10 PCIe 4.0 x16 slots, and support for 32 DDR4 RDIMM/LRDIMM modules at up to 3200 MT/s. The chassis may include multiple hot-pluggable redundant power supplies and fans.

AI servers vary by GPU count: examples include 4-GPU systems, 8-GPU systems (such as configurations with A100 640GB), and 16-GPU systems like the NVIDIA DGX-2.

Key Components

Core AI server components include GPUs, DRAM, SSDs and RAID controllers, CPUs, NICs, PCBs, on-board high-speed interconnect chips, and cooling modules.

Market Share and Cloud Demand

TrendForce reports that in 2022 the four major North American cloud providers—Google, AWS, Meta, and Microsoft—accounted for about 66.2% of AI server procurement. In the Chinese market, growing localization has increased AI infrastructure investment by companies such as ByteDance, which showed the largest procurement share at about 6.2% in 2022, followed by Tencent, Alibaba, and Baidu at roughly 2.3%, 1.5%, and 1.5% respectively.

ALLPCB

ALLPCB