Overview

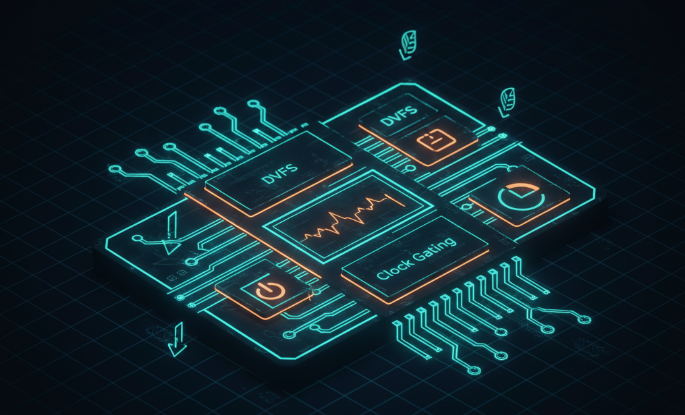

Pipeline design is a typical area-for-performance trade-off. By partitioning long functional paths appropriately, multiple requests for the same function can be processed in parallel, significantly increasing throughput. Splitting long paths into shorter ones also enables higher operating frequency; if higher frequency is not required, the additional timing margin can be used to reduce voltage and power.

1. Temporal Parallelism in Pipelines

Pipelining provides temporal parallelism. Each pipeline stage introduces one clock-cycle latency and stages operate in lockstep. Per-stage latencies can hide each other, enabling up to N-fold parallelism with N pipeline stages.

2. Pipeline Stage Partitioning

A pipeline boundary is implemented by a set of registers, and their placement is determined by the designer. When partitioning a pipeline, consider:

- If the subfunction at a cut has a high level of abstraction, partition along complete functional units, for example CPU execution stages.

- Place pipeline registers where datapath bit-widths are smaller to save register count and area.

- Aim for similar critical path delays across stages to maximize timing margin and achieve higher clock frequency.

3. Simple Example of Pipeline Design

Structurally a pipeline is straightforward: insert several stages of registers into an otherwise monolithic function. Each stage performs a different portion of the function, and registers update once per clock.

4. Backpressure Propagation Between Pipeline Stages

For example, the last-stage valid signal vld_out must complete a handshake with the downstream backpressure signal rdy_in before the previous stage s2 can update out.

5. Other Backpressure Scenarios in Pipelines

Besides stage-to-stage backpressure originating from the final stage, any individual stage may be independently backpressured depending on the specific design.

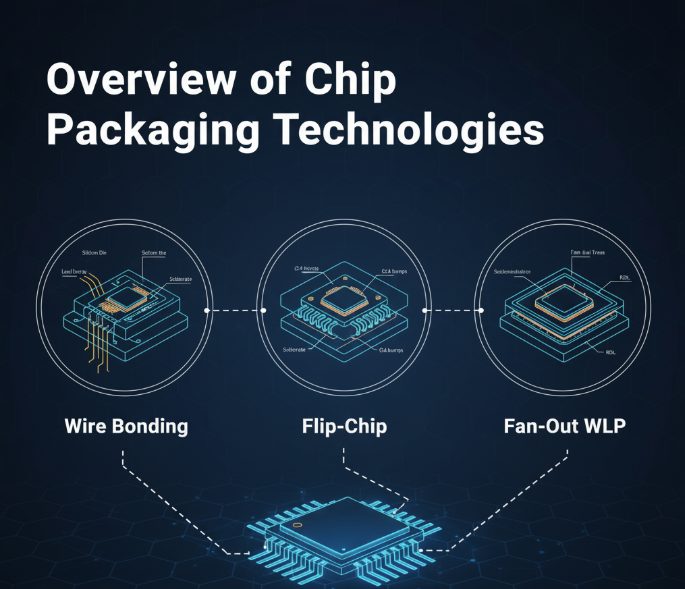

ALLPCB

ALLPCB