Efficient AI large models have proliferated, expanding opportunities for learning and exploration. Although AI tools are now common, concepts such as prompts, model throughput, distillation and quantization, and private knowledge bases can still be unfamiliar. This article summarizes ten common core concepts in the AI field.

1. Model variants

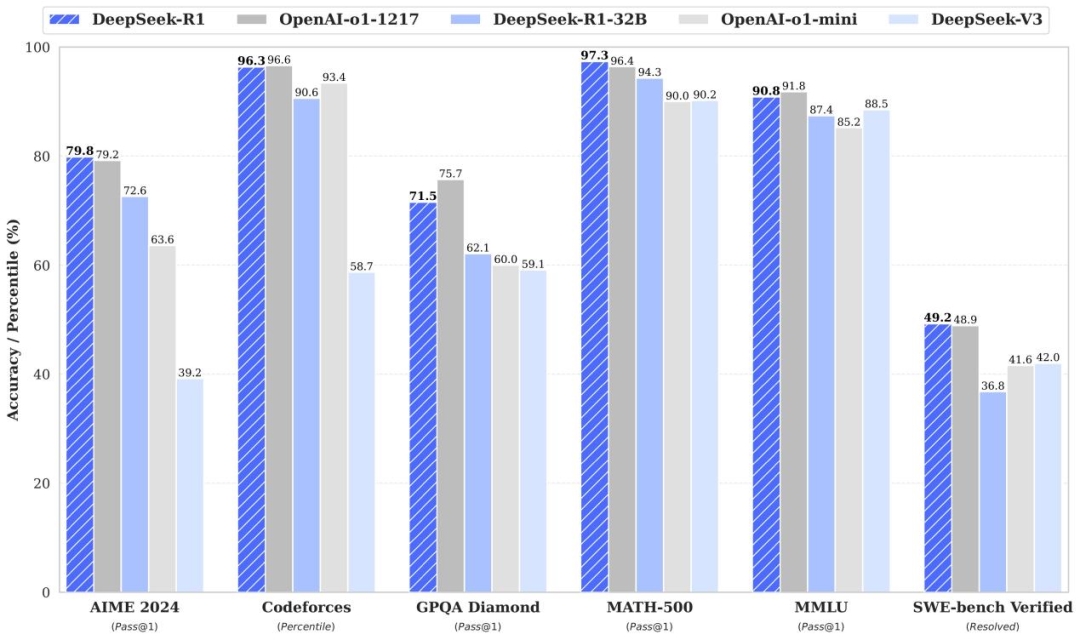

Each large model has a distinct design and set of capabilities. Some models perform well at language understanding, while others are stronger at image generation or domain-specific tasks. A single model family can also have multiple versions, for example DeepSeek R1 or DeepSeek V3. Version numbers typically indicate overall improvements, such as larger parameter counts, faster speed, longer context windows, or new multimodal features.

Many models are fine-tuned for specific tasks and carry names that reflect that, such as DeepSeek Coder V2 or DeepSeek Math. Naming conventions often add terms like "chat" for conversational models, "coder" for code generation, "math" for mathematical tasks, or "vision" for visual capabilities.

2. Model parameters

The number of model parameters is often very large, for example 7B, 14B, or 32B (7B = 7 billion = 7,000,000,000 parameters). A model with billions or trillions of parameters can be thought of as a very large "brain." Parameters determine how the model interprets input and generates output.

In general, larger models can learn more complex patterns. Larger parameter counts also increase cost, so it is important to choose a model size appropriate for the complexity of the task.

7B models

Typical use cases: Suitable for scenarios that require low latency and involve relatively simple tasks. Examples include basic text classification, such as quickly categorizing news articles into politics, economics, or entertainment, and simple customer service tasks that answer common, fixed questions like "What is the product price?" or "How do I register an account?"

14B models

Typical use cases: Capable of handling somewhat more complex natural language tasks. Examples include simple abstractive summarization to extract key points from an article, and customer service scenarios that can understand slightly more complex user issues, such as "A product I purchased is malfunctioning, how can I fix it?" and provide appropriate solutions.

32B models

Typical use cases: Higher-quality text generation, such as advertising copy or social media posts, and providing useful creativity and content generation. In question-answering systems, they can address domain-related questions such as "What is the development history of artificial intelligence?"

70B models

Typical use cases: Stronger language understanding and generation abilities for complex dialogue systems. These models can handle multi-turn conversations, maintain context across turns, and generate coherent long-form text such as novels or technical documents. They can serve as writing assistants to help produce reports and proposals, offering content suggestions and higher-quality support for complex writing tasks.

671B models

Typical use cases: Extremely large models usually have comprehensive knowledge and strong language processing capabilities, suitable for very complex tasks. Examples include assisting researchers in analyzing academic literature and building knowledge graphs, and in finance, performing deep analysis of large datasets for risk assessment and investment forecasting. By analyzing large volumes of data, such models can provide valuable insights and support major business decisions.

3. Context length

Context length can be thought of as a memory window. When a large model processes text, it considers a fixed span of preceding text to understand the current input. This span is the context length. Short, simple questions work with low context lengths, while rigorous tasks with large text volumes require longer context windows.

Common context lengths include:

- 2K (2048): Standard length for general conversations

- 4K (4096): Medium length for longer documents

- 8K (8192): Longer context for extended analysis

- 32K+: Very long context for processing book-length content

If the context window is too short, the model may "forget" earlier information and produce less accurate responses.

4. Token

A token can be understood as a building block of text. When a model processes text, it splits the text into small units called tokens. For example, the sentence "I like apples" might be split into tokens such as "I", "like", and "apples".

The model analyzes these tokens to derive the meaning of the whole text. Each token has an associated identifier or vector representation, and the model operates on these units to perform language processing.

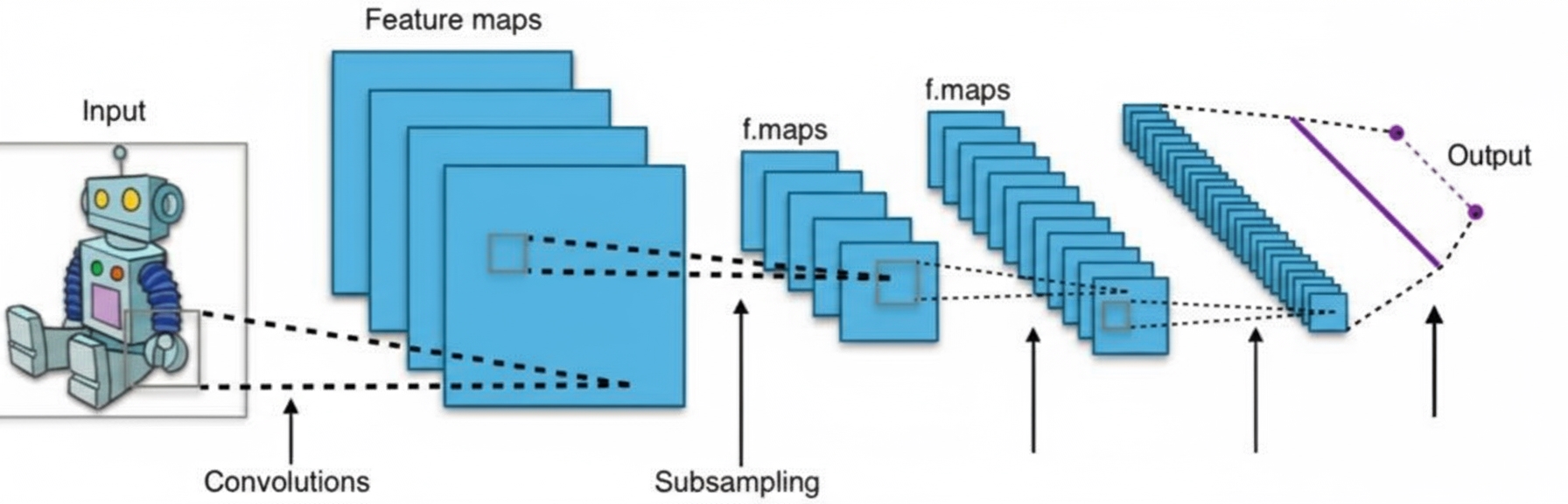

5. Distillation

Distillation is like extracting the essence from a knowledgeable teacher (a large model) and teaching it to a student (a smaller model). Large models often contain extensive knowledge but can be complex and slow to run.

Through distillation, a smaller model learns to mimic the behavior and knowledge of a larger model. The result is a simpler, more efficient model that retains much of the original performance while being faster and more resource efficient.

6. Quantization

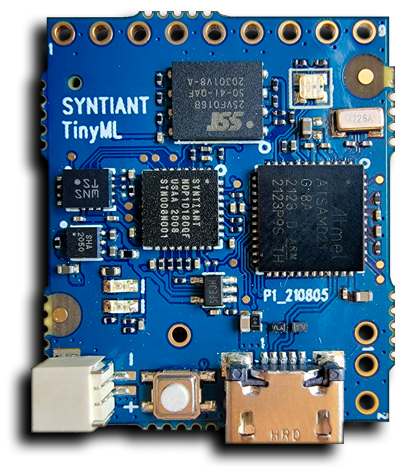

Model parameters are typically stored as high-precision numbers, which require substantial storage and compute resources. Quantization represents these precise numbers in a more compact form, for example converting 32-bit floating point values to 8-bit integers.

Analogously, it is like reproducing a full-color painting with fewer colors. Reducing the number of distinct values can save computation and storage. Although quantization may introduce some loss of precision, it can significantly improve runtime performance and enable the model to run on resource-constrained devices.

7. Knowledge base and RAG

A knowledge base is like a large library that stores various kinds of factual information, such as historical events, scientific knowledge, and cultural references.

RAG, or retrieval-augmented generation, is a method that retrieves relevant information from this "library" and provides it to the model so the model can generate answers grounded in accurate, retrieved information.

8. MoE (Mixture of Experts)

Some systems adopt the MoE architecture, training multiple expert modules where each expert is optimized for a particular data distribution or task.

When presented with a task, the system selects the most appropriate expert or combines multiple experts to produce the final result. This is similar to a team where different members have specialized skills and the most suitable member handles a given problem. Ensuring each expert handles the data or tasks it is best suited for can improve efficiency and accuracy.

9. Prompt

A prompt is the instruction or input given to a large model. When you ask a model to perform a task or answer a question, the text you provide is the prompt. Prompt design is important because it directly influences the model's output.

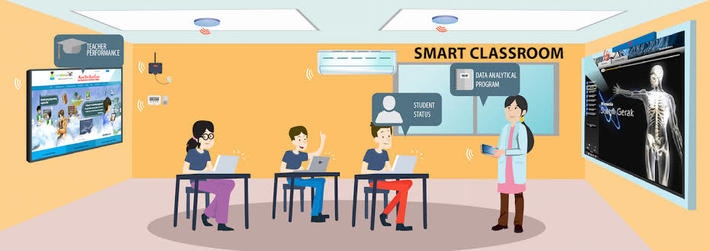

10. Agent

An agent can autonomously perform tasks based on a model's capabilities and predefined rules, such as conducting conversations with users, processing information, or carrying out specific operations.

An agent behaves like a small autonomous robot that understands user requirements and uses the model's knowledge and its own functionality to help solve problems and provide assistance.

ALLPCB

ALLPCB