In the context of rapid development in artificial intelligence and machine learning, efficient use of GPU computing resources has become a key performance metric. Optimized GPU allocation can significantly speed up model training while controlling compute costs. According to a 2024 industry survey by the AI Infrastructure Alliance, only 7% of organizations achieve over 85% GPU utilization during peak load, highlighting a substantial gap in AI infrastructure optimization. This article systematically analyzes 12 technical strategies to improve GPU compute efficiency and explains concrete implementation methods and tool choices for optimizing AI/ML workloads.

1. Implement mixed precision training

Mixed precision training uses both 16-bit and 32-bit floating point representations to reduce memory usage and improve GPU compute efficiency while maintaining model accuracy. This approach can significantly accelerate computational performance without negatively affecting final model convergence.

In practice, mixed precision can be enabled via automatic mixed precision (AMP) features provided by major deep learning frameworks, such as torch.cuda.amp in PyTorch or the tf.keras.mixed_precision module in TensorFlow. The core advantage is reduced data transfer between GPU memory and compute units: 16-bit values occupy half the memory of 32-bit values, allowing more data to be loaded into GPU caches per unit time and increasing overall compute throughput. Before deploying mixed precision in production, conduct thorough accuracy and performance testing to ensure stable model convergence.

2. Optimize data loading and preprocessing

An efficient data pipeline is critical to minimizing GPU idle time and ensuring compute resources remain busy. Configure tools like PyTorch DataLoader and tune the num_workers parameter to achieve parallel data loading. Increasing num_workers lets the system prepare the next batch in the background while the GPU processes the current batch, reducing delays caused by data loading.

For frequently accessed datasets, cache them in system memory or use high-speed NVMe SSDs to lower retrieval latency. Prioritize data prefetching strategies and, where possible, perform preprocessing directly on the GPU to minimize CPU-GPU communication overhead.

3. Make full use of Tensor Cores for matrix operations

Tensor Cores are specialized hardware units in modern NVIDIA GPUs optimized for matrix operations. To leverage this hardware acceleration, ensure the model uses data types compatible with Tensor Cores, such as float16 or bfloat16, which are optimized for Tensor Core execution.

Popular frameworks like PyTorch and TensorFlow will automatically use Tensor Cores when certain conditions are met. This hardware acceleration is especially effective for convolutional layers and large matrix multiplications, providing substantial performance gains.

4. Tune batch size appropriately

Selecting the right batch size is important for balancing memory efficiency and GPU utilization. In practice, incrementally increase batch size until it approaches but does not exceed GPU memory limits to avoid out-of-memory errors. Larger batch sizes increase parallel computation and can improve throughput.

If memory is constrained, consider gradient accumulation. This technique accumulates gradients over several smaller mini-batches before performing a weight update, effectively increasing the equivalent batch size without exceeding memory limits, thus improving computation efficiency while maintaining memory constraints.

5. GPU usage analysis and real-time monitoring

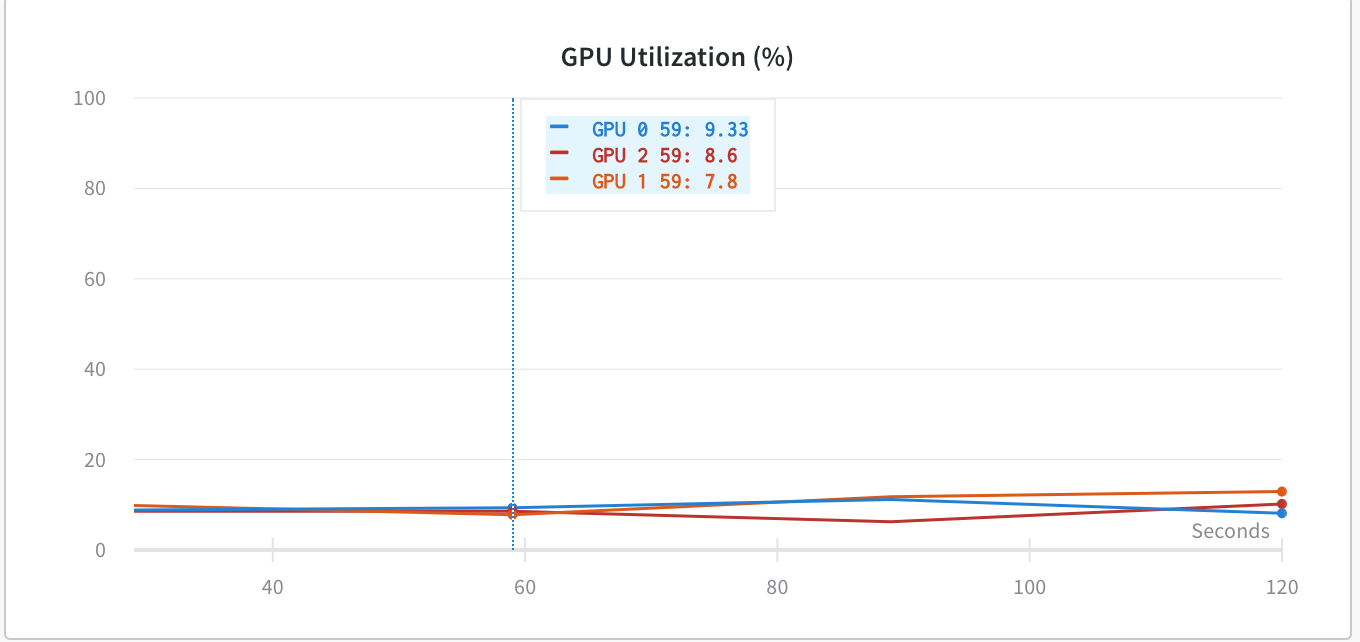

Performance monitoring tools are essential for identifying system bottlenecks and ensuring GPUs are fully utilized. Tools such as NVIDIA Nsight Systems, PyTorch Profiler, and TensorFlow Profiler provide deep performance analysis to locate inefficient code paths, memory bottlenecks, and GPU idle cycles.

Focus on key metrics like GPU memory usage, compute unit utilization, and data transfer efficiency. Use profiling results to refactor training workflows or adjust data flow patterns to improve overall system performance.

6. Optimize model architecture

Efficient model design can significantly reduce compute overhead and improve GPU performance. During model design, consider techniques such as depthwise separable convolutions, grouped convolutions, or efficient attention mechanisms to minimize computational cost while preserving accuracy.

For existing models, apply pruning or quantization to reduce model size and enhance compute efficiency. Pruning removes redundant neurons or connections, while quantization reduces numerical precision to lower memory and compute requirements.

For multi-stage or complex models, run systematic benchmarks to identify layers or operations that limit pipeline efficiency and target those hotspots for optimization.

7. Manage GPU memory efficiently

Poor memory management can cause out-of-memory errors or low GPU utilization. Use memory-efficient frameworks such as DeepSpeed or PyTorch Lightning, which help automate memory allocation and release unused tensor resources.

In practice, free unused tensors with functions like torch.cuda.empty_cache() or tf.keras.backend.clear_session() to release GPU memory, which is especially helpful when long training runs cause memory fragmentation. Another strategy is to preallocate large tensors early in training to reduce fragmentation and improve runtime stability.

8. Reduce CPU-GPU data transfer overhead

Frequent data transfers between CPU and GPU are a common performance bottleneck. Minimize data movement by keeping frequently used tensors resident in GPU memory. Repeatedly moving data between CPU and GPU increases processing latency.

Use asynchronous operations managed with torch.cuda.Stream() or tf.device() to overlap CPU-GPU communication with computation. Additionally, data prefetching that loads data onto the GPU ahead of use can reduce transfer latency during training.

9. Enable XLA optimizations

XLA optimizations improve TensorFlow performance by optimizing computation graph execution and reducing runtime overhead. In TensorFlow, enable XLA by annotating supported functions with tf.function(jit_compile=True). This lets TensorFlow compile parts of the computation graph for better execution efficiency.

Benchmark workloads before fully deploying XLA to verify performance gains. Note that while XLA often improves performance, some operations may perform better without XLA, so evaluate based on the specific workload.

10. Distributed training strategies for large workloads

For large models or datasets, distributed training improves scalability and performance. Use libraries such as Horovod, DeepSpeed, or PyTorch DistributedDataParallel to implement multi-GPU training. These tools handle gradient synchronization across multiple GPUs efficiently.

To optimize gradient communication, apply techniques like gradient compression or overlap communication with computation to minimize synchronization delays. Shard large datasets appropriately across GPUs to increase data parallelism and reduce per-GPU memory pressure.

11. Implement efficient checkpointing

A practical checkpoint strategy is essential for periodically saving model state and preventing data loss from failures. Use incremental checkpointing that saves only updated model state rather than the entire model to reduce I/O overhead and speed up recovery.

Frameworks like DeepSpeed provide optimized checkpointing methods that minimize interruptions to GPU computation during model saving, helping maintain training continuity and efficiency.

12. Maximize GPU cluster resource utilization

For large-scale training, GPU clusters offer significant throughput and reduced training time. When building a GPU cluster, consider using Kubernetes with GPU resource scheduling to manage multi-GPU nodes efficiently.

Use job schedulers such as Ray, Dask, or Slurm to optimize task scheduling and run parallel workloads across multiple GPUs. Ensure efficient cross-node data sharding strategies to minimize inter-node data transfer bottlenecks.

Summary

The strategies described here provide comprehensive technical guidance for improving GPU utilization in AI/ML workloads. Implementing data pipeline parallelism, memory management optimizations, and model design improvements can accelerate training and reduce operational cost. Regular performance analysis and system tuning are essential for identifying bottlenecks and improving compute efficiency, providing a solid technical foundation for AI system development.

ALLPCB

ALLPCB